Deirdre Mulligan

Organizational Governance of Emerging Technologies: AI Adoption in Healthcare

May 10, 2023

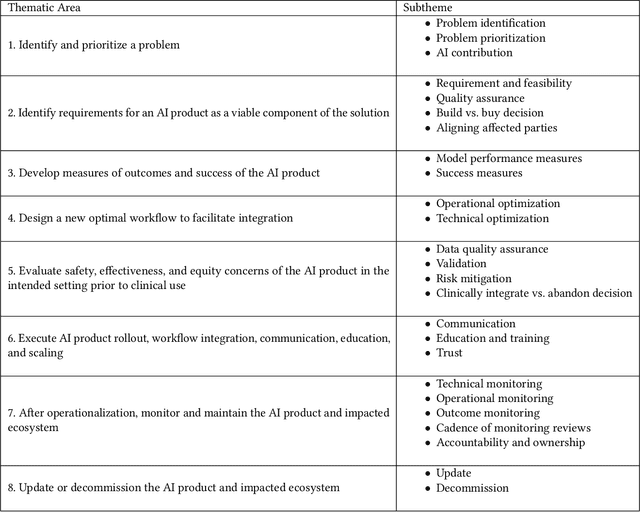

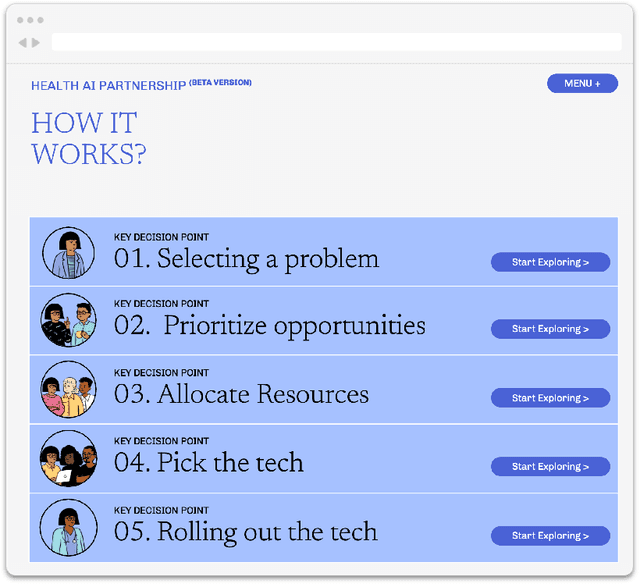

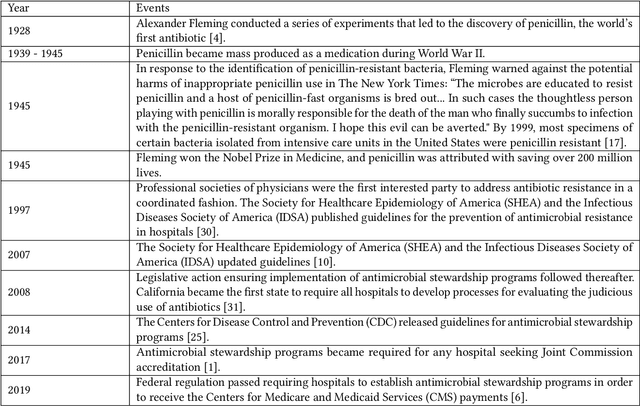

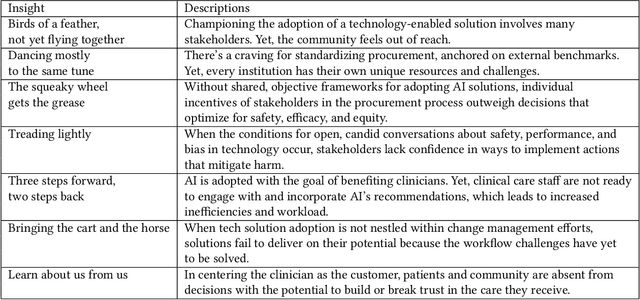

Abstract:Private and public sector structures and norms refine how emerging technology is used in practice. In healthcare, despite a proliferation of AI adoption, the organizational governance surrounding its use and integration is often poorly understood. What the Health AI Partnership (HAIP) aims to do in this research is to better define the requirements for adequate organizational governance of AI systems in healthcare settings and support health system leaders to make more informed decisions around AI adoption. To work towards this understanding, we first identify how the standards for the AI adoption in healthcare may be designed to be used easily and efficiently. Then, we map out the precise decision points involved in the practical institutional adoption of AI technology within specific health systems. Practically, we achieve this through a multi-organizational collaboration with leaders from major health systems across the United States and key informants from related fields. Working with the consultancy IDEO [dot] org, we were able to conduct usability-testing sessions with healthcare and AI ethics professionals. Usability analysis revealed a prototype structured around mock key decision points that align with how organizational leaders approach technology adoption. Concurrently, we conducted semi-structured interviews with 89 professionals in healthcare and other relevant fields. Using a modified grounded theory approach, we were able to identify 8 key decision points and comprehensive procedures throughout the AI adoption lifecycle. This is one of the most detailed qualitative analyses to date of the current governance structures and processes involved in AI adoption by health systems in the United States. We hope these findings can inform future efforts to build capabilities to promote the safe, effective, and responsible adoption of emerging technologies in healthcare.

Designing Commercial Therapeutic Robots for Privacy Preserving Systems and Ethical Research Practices within the Home

Jun 29, 2016Abstract:The migration of robots from the laboratory into sensitive home settings as commercially available therapeutic agents represents a significant transition for information privacy and ethical imperatives. We present new privacy paradigms and apply the Fair Information Practices (FIPs) to investigate concerns unique to the placement of therapeutic robots in private home contexts. We then explore the importance and utility of research ethics as operationalized by existing human subjects research frameworks to guide the consideration of therapeutic robotic users -- a step vital to the continued research and development of these platforms. Together, privacy and research ethics frameworks provide two complementary approaches to protect users and ensure responsible yet robust information sharing for technology development. We make recommendations for the implementation of these principles -- paying particular attention to specific principles that apply to vulnerable individuals (i.e., children, disabled, or elderly persons)--to promote the adoption and continued improvement of long-term, responsible, and research-enabled robotics in private settings.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge