Dawit Mureja

Automatic Spine Segmentation using Convolutional Neural Network via Redundant Generation of Class Labels for 3D Spine Modeling

Nov 29, 2017

Abstract:There has been a significant increase from 2010 to 2016 in the number of people suffering from spine problems. The automatic image segmentation of the spine obtained from a computed tomography (CT) image is important for diagnosing spine conditions and for performing surgery with computer-assisted surgery systems. The spine has a complex anatomy that consists of 33 vertebrae, 23 intervertebral disks, the spinal cord, and connecting ribs. As a result, the spinal surgeon is faced with the challenge of needing a robust algorithm to segment and create a model of the spine. In this study, we developed an automatic segmentation method to segment the spine, and we compared our segmentation results with reference segmentations obtained by experts. We developed a fully automatic approach for spine segmentation from CT based on a hybrid method. This method combines the convolutional neural network (CNN) and fully convolutional network (FCN), and utilizes class redundancy as a soft constraint to greatly improve the segmentation results. The proposed method was found to significantly enhance the accuracy of the segmentation results and the system processing time. Our comparison was based on 12 measurements: the Dice coefficient (94%), Jaccard index (93%), volumetric similarity (96%), sensitivity (97%), specificity (99%), precision (over segmentation; 8.3 and under segmentation 2.6), accuracy (99%), Matthews correlation coefficient (0.93), mean surface distance (0.16 mm), Hausdorff distance (7.4 mm), and global consistency error (0.02). We experimented with CT images from 32 patients, and the experimental results demonstrated the efficiency of the proposed method.

Meta-Learning via Feature-Label Memory Network

Oct 19, 2017

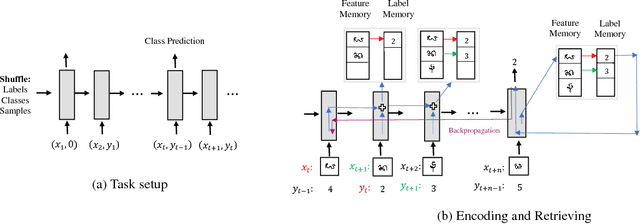

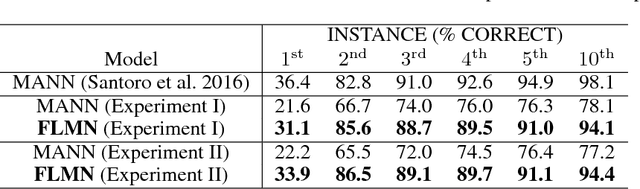

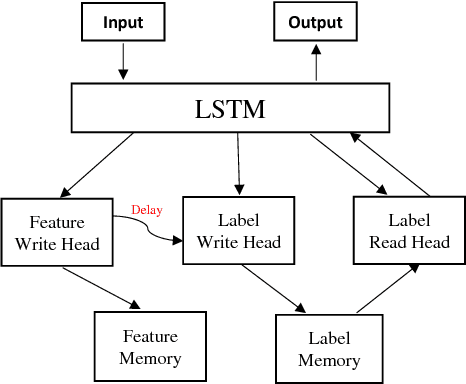

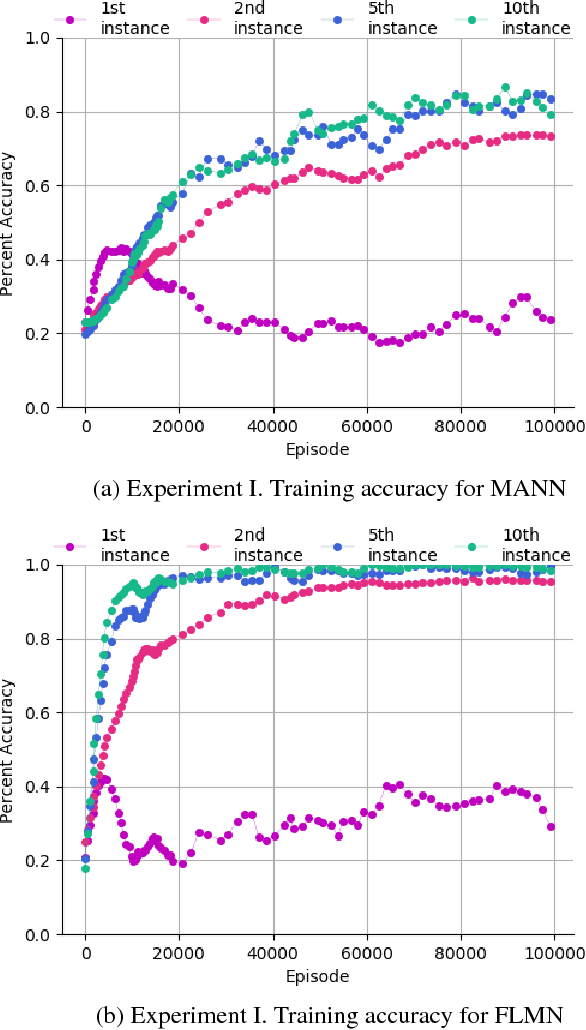

Abstract:Deep learning typically requires training a very capable architecture using large datasets. However, many important learning problems demand an ability to draw valid inferences from small size datasets, and such problems pose a particular challenge for deep learning. In this regard, various researches on "meta-learning" are being actively conducted. Recent work has suggested a Memory Augmented Neural Network (MANN) for meta-learning. MANN is an implementation of a Neural Turing Machine (NTM) with the ability to rapidly assimilate new data in its memory, and use this data to make accurate predictions. In models such as MANN, the input data samples and their appropriate labels from previous step are bound together in the same memory locations. This often leads to memory interference when performing a task as these models have to retrieve a feature of an input from a certain memory location and read only the label information bound to that location. In this paper, we tried to address this issue by presenting a more robust MANN. We revisited the idea of meta-learning and proposed a new memory augmented neural network by explicitly splitting the external memory into feature and label memories. The feature memory is used to store the features of input data samples and the label memory stores their labels. Hence, when predicting the label of a given input, our model uses its feature memory unit as a reference to extract the stored feature of the input, and based on that feature, it retrieves the label information of the input from the label memory unit. In order for the network to function in this framework, a new memory-writingmodule to encode label information into the label memory in accordance with the meta-learning task structure is designed. Here, we demonstrate that our model outperforms MANN by a large margin in supervised one-shot classification tasks using Omniglot and MNIST datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge