Davide Zagami

Categorizing Wireheading in Partially Embedded Agents

Jun 21, 2019

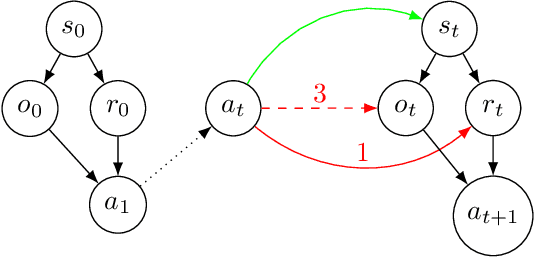

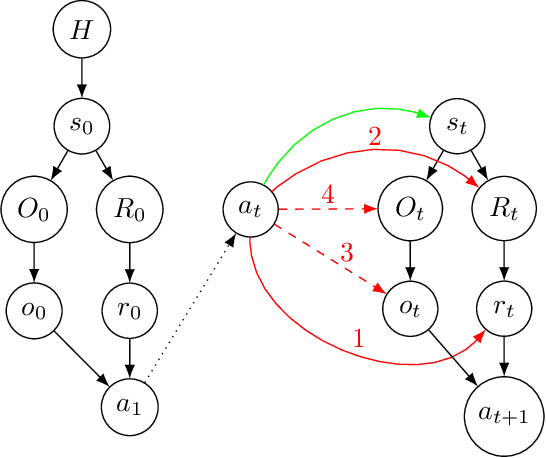

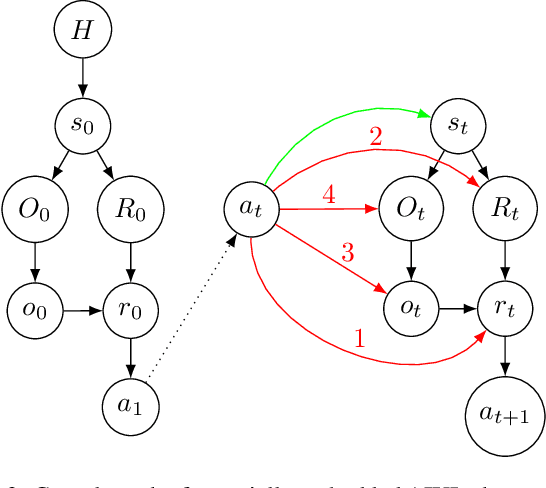

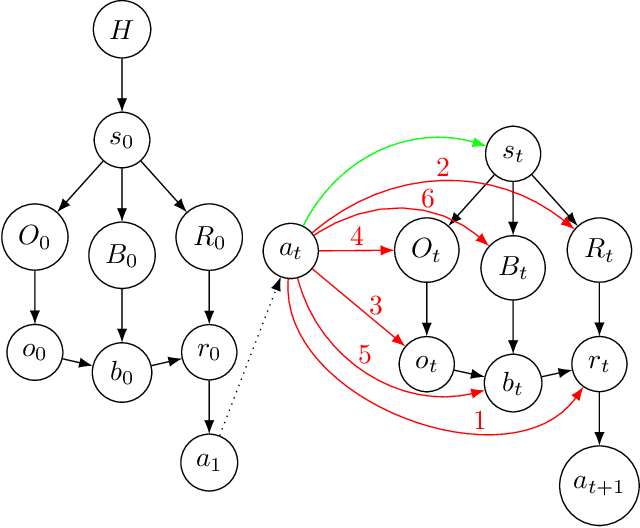

Abstract:$\textit{Embedded agents}$ are not explicitly separated from their environment, lacking clear I/O channels. Such agents can reason about and modify their internal parts, which they are incentivized to shortcut or $\textit{wirehead}$ in order to achieve the maximal reward. In this paper, we provide a taxonomy of ways by which wireheading can occur, followed by a definition of wirehead-vulnerable agents. Starting from the fully dualistic universal agent AIXI, we introduce a spectrum of partially embedded agents and identify wireheading opportunities that such agents can exploit, experimentally demonstrating the results with the GRL simulation platform AIXIjs. We contextualize wireheading in the broader class of all misalignment problems - where the goals of the agent conflict with the goals of the human designer - and conjecture that the only other possible type of misalignment is specification gaming. Motivated by this taxonomy, we define wirehead-vulnerable agents as embedded agents that choose to behave differently from fully dualistic agents lacking access to their internal parts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge