David Vorchheimer

Deciphering Heartbeat Signatures: A Vision Transformer Approach to Explainable Atrial Fibrillation Detection from ECG Signals

Feb 12, 2024

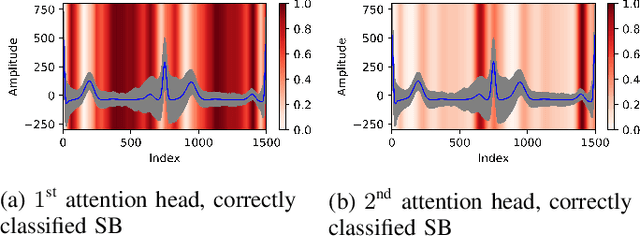

Abstract:Remote patient monitoring based on wearable single-lead electrocardiogram (ECG) devices has significant potential for enabling the early detection of heart disease, especially in combination with artificial intelligence (AI) approaches for automated heart disease detection. There have been prior studies applying AI approaches based on deep learning for heart disease detection. However, these models are yet to be widely accepted as a reliable aid for clinical diagnostics, in part due to the current black-box perception surrounding many AI algorithms. In particular, there is a need to identify the key features of the ECG signal that contribute toward making an accurate diagnosis, thereby enhancing the interpretability of the model. In the present study, we develop a vision transformer approach to identify atrial fibrillation based on single-lead ECG data. A residual network (ResNet) approach is also developed for comparison with the vision transformer approach. These models are applied to the Chapman-Shaoxing dataset to classify atrial fibrillation, as well as another common arrhythmia, sinus bradycardia, and normal sinus rhythm heartbeats. The models enable the identification of the key regions of the heartbeat that determine the resulting classification, and highlight the importance of P-waves and T-waves, as well as heartbeat duration and signal amplitude, in distinguishing normal sinus rhythm from atrial fibrillation and sinus bradycardia.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge