David Atiena

Mathematical model of parameters relevance in adaptive level-crossing sampling for electrocardiogram signals

Jan 18, 2025

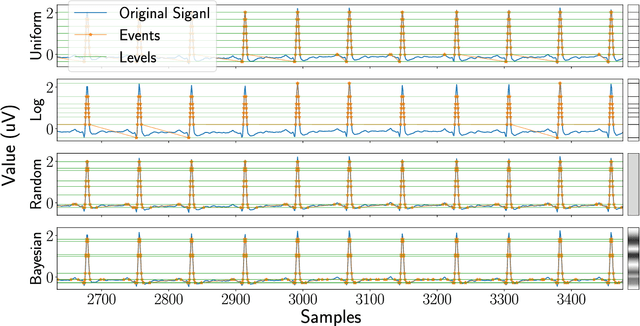

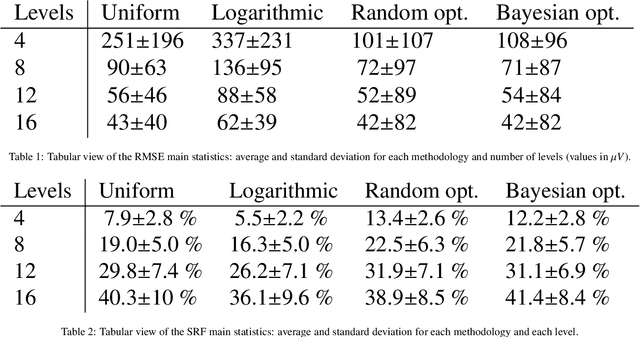

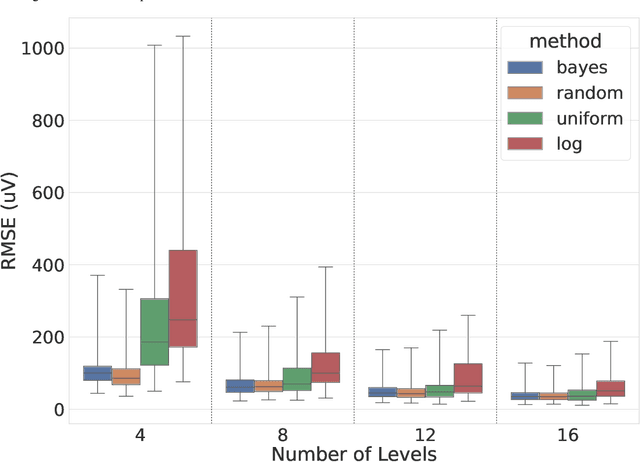

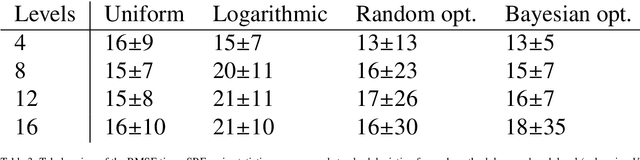

Abstract:Digital acquisition of bio-signals has been mostly dominated by uniform time sampling following the Nyquist theorem. However, in recent years, new approaches have emerged, focused on sampling a signal only when certain events happen. Currently, the most prominent of these approaches is Level Crossing (LC) sampling. Conventional level crossing analog-to-digital converters (LC-ADC) are often designed to make use of statically defined and uniformly spaced levels. However, a different positioning of the levels, optimized for bio-signals monitoring, can potentially lead to better performing solutions. In this work, we compare multiple LC-level definitions, including statically defined (uniform and logarithmic) configurations and optimization-driven designs (randomized and Bayesian optimization), assessing their ability to maintain signal fidelity while minimizing the sampling rate. In this paper, we analyze the performance of these different methodologies, which is evaluated using the root mean square error (RMSE), the sampling reduction factor (SRF) -- a metric evaluating the sampling compression ratio -- , and error per event metrics to gauge the trade-offs between signal fidelity and data compression. Our findings reveal that optimization-driven LC-sampling, particularly those using Bayesian methods, achieve a lower RMSE without substantially impacting the error per event compared to static configurations, but at the cost of an increase in the sampling rate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge