Dario Stein

iHub, Radboud University Nijmegen

Overdrawing Urns using Categories of Signed Probabilities

Dec 14, 2023

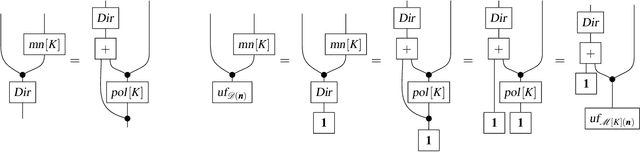

Abstract:A basic experiment in probability theory is drawing without replacement from an urn filled with multiple balls of different colours. Clearly, it is physically impossible to overdraw, that is, to draw more balls from the urn than it contains. This paper demonstrates that overdrawing does make sense mathematically, once we allow signed distributions with negative probabilities. A new (conservative) extension of the familiar hypergeometric ('draw-and-delete') distribution is introduced that allows draws of arbitrary sizes, including overdraws. The underlying theory makes use of the dual basis functions of the Bernstein polynomials, which play a prominent role in computer graphics. Negative probabilities are treated systematically in the framework of categorical probability and the central role of datastructures such as multisets and monads is emphasised.

* In Proceedings ACT 2023, arXiv:2312.08138

Pearl's and Jeffrey's Update as Modes of Learning in Probabilistic Programming

Sep 13, 2023

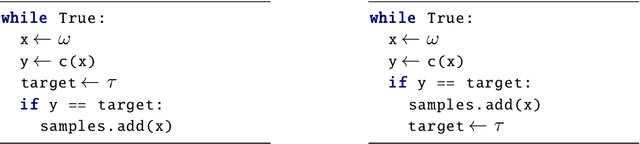

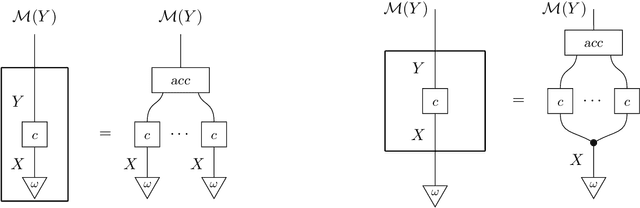

Abstract:The concept of updating a probability distribution in the light of new evidence lies at the heart of statistics and machine learning. Pearl's and Jeffrey's rule are two natural update mechanisms which lead to different outcomes, yet the similarities and differences remain mysterious. This paper clarifies their relationship in several ways: via separate descriptions of the two update mechanisms in terms of probabilistic programs and sampling semantics, and via different notions of likelihood (for Pearl and for Jeffrey). Moreover, it is shown that Jeffrey's update rule arises via variational inference. In terms of categorical probability theory, this amounts to an analysis of the situation in terms of the behaviour of the multiset functor, extended to the Kleisli category of the distribution monad.

Compositional Semantics for Probabilistic Programs with Exact Conditioning

Jan 27, 2021

Abstract:We define a probabilistic programming language for Gaussian random variables with a first-class exact conditioning construct. We give operational, denotational and equational semantics for this language, establishing convenient properties like exchangeability of conditions. Conditioning on equality of continuous random variables is nontrivial, as the exact observation may have probability zero; this is Borel's paradox. Using categorical formulations of conditional probability, we show that the good properties of our language are not particular to Gaussians, but can be derived from universal properties, thus generalizing to wider settings. We define the Cond construction, which internalizes conditioning as a morphism, providing general compositional semantics for probabilistic programming with exact conditioning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge