Danny Tsang

FedFA: Federated Learning with Feature Anchors to Align Feature and Classifier for Heterogeneous Data

Nov 17, 2022

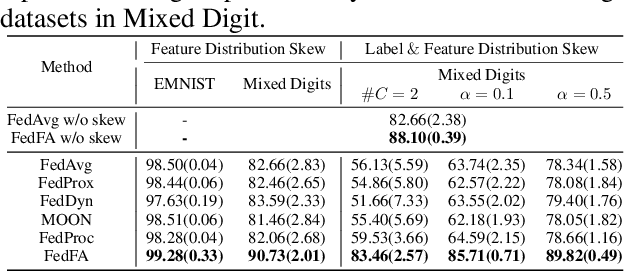

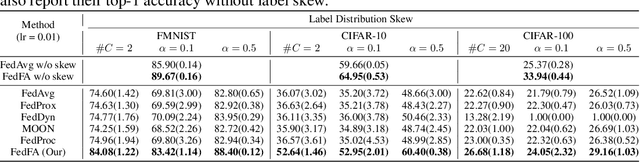

Abstract:Federated learning allows multiple clients to collaboratively train a model without exchanging their data, thus preserving data privacy. Unfortunately, it suffers significant performance degradation under heterogeneous data at clients. Common solutions in local training involve designing a specific auxiliary loss to regularize weight divergence or feature inconsistency. However, we discover that these approaches fall short of the expected performance because they ignore the existence of a vicious cycle between classifier divergence and feature mapping inconsistency across clients, such that client models are updated in inconsistent feature space with diverged classifiers. We then propose a simple yet effective framework named Federated learning with Feature Anchors (FedFA) to align the feature mappings and calibrate classifier across clients during local training, which allows client models updating in a shared feature space with consistent classifiers. We demonstrate that this modification brings similar classifiers and a virtuous cycle between feature consistency and classifier similarity across clients. Extensive experiments show that FedFA significantly outperforms the state-of-the-art federated learning algorithms on various image classification datasets under label and feature distribution skews.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge