Daniel Recoskie

Gradient-based Filter Design for the Dual-tree Wavelet Transform

Jun 04, 2018

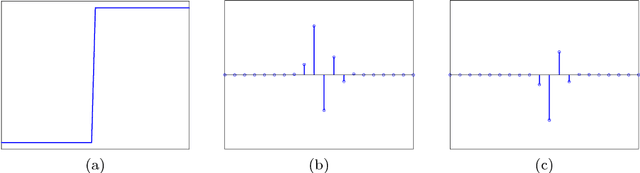

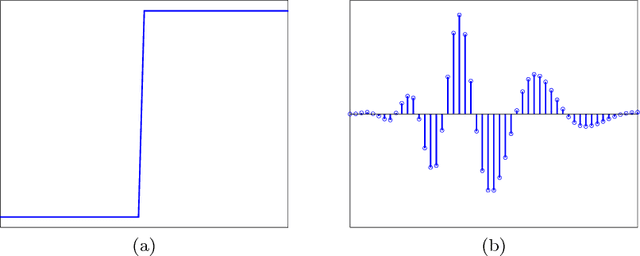

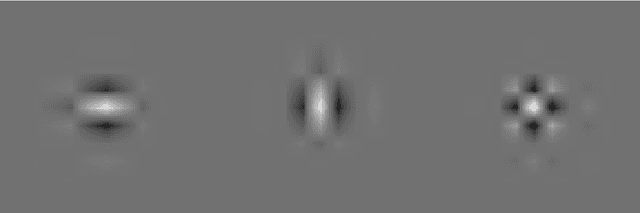

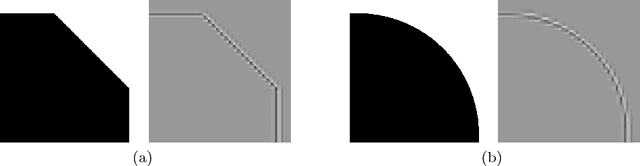

Abstract:The wavelet transform has seen success when incorporated into neural network architectures, such as in wavelet scattering networks. More recently, it has been shown that the dual-tree complex wavelet transform can provide better representations than the standard transform. With this in mind, we extend our previous method for learning filters for the 1D and 2D wavelet transforms into the dual-tree domain. We show that with few modifications to our original model, we can learn directional filters that leverage the properties of the dual-tree wavelet transform.

Learning Sparse Wavelet Representations

Feb 08, 2018

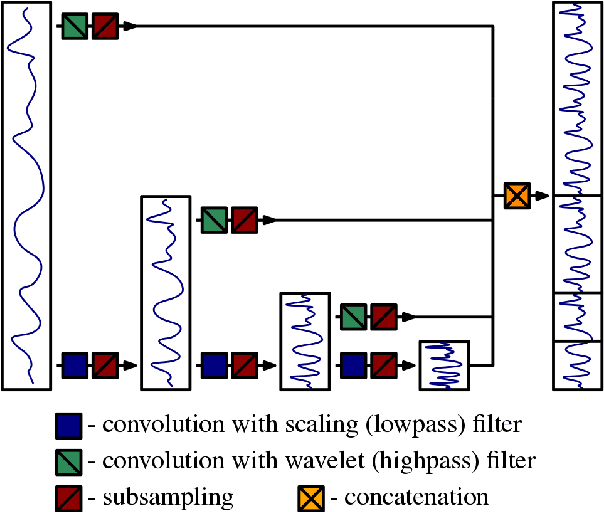

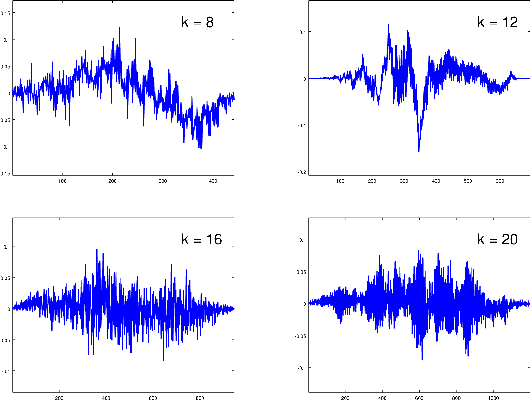

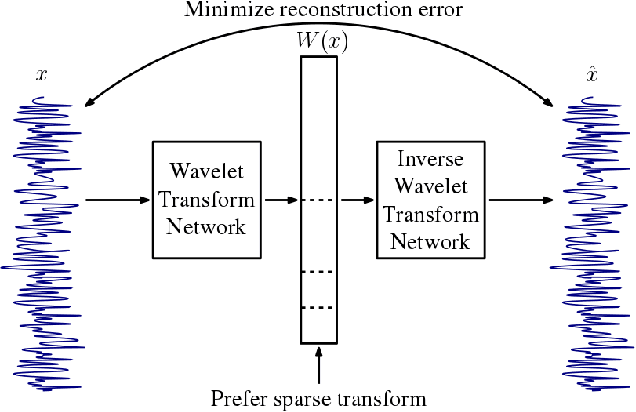

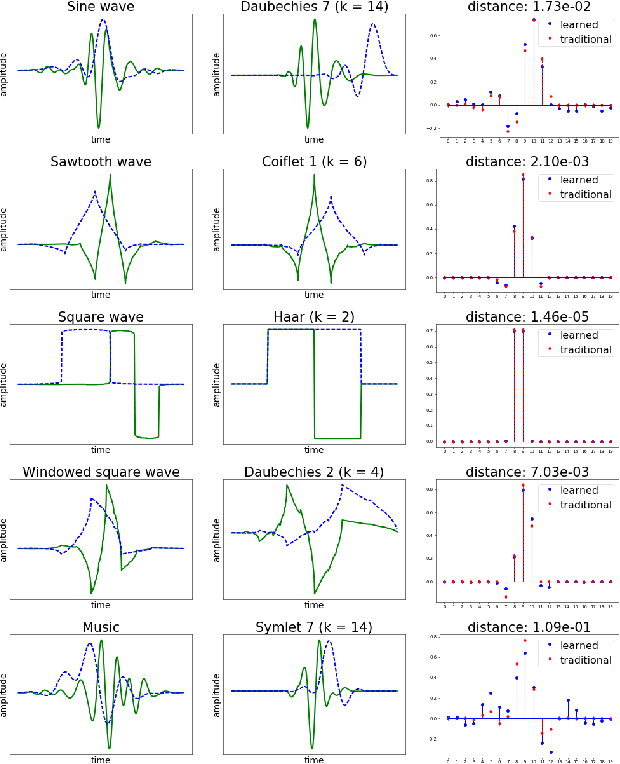

Abstract:In this work we propose a method for learning wavelet filters directly from data. We accomplish this by framing the discrete wavelet transform as a modified convolutional neural network. We introduce an autoencoder wavelet transform network that is trained using gradient descent. We show that the model is capable of learning structured wavelet filters from synthetic and real data. The learned wavelets are shown to be similar to traditional wavelets that are derived using Fourier methods. Our method is simple to implement and easily incorporated into neural network architectures. A major advantage to our model is that we can learn from raw audio data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge