Daniel Panangian

PolyRoof: Precision Roof Polygonization in Urban Residential Building with Graph Neural Networks

Mar 13, 2025Abstract:The growing demand for detailed building roof data has driven the development of automated extraction methods to overcome the inefficiencies of traditional approaches, particularly in handling complex variations in building geometries. Re:PolyWorld, which integrates point detection with graph neural networks, presents a promising solution for reconstructing high-detail building roof vector data. This study enhances Re:PolyWorld's performance on complex urban residential structures by incorporating attention-based backbones and additional area segmentation loss. Despite dataset limitations, our experiments demonstrated improvements in point position accuracy (1.33 pixels) and line distance accuracy (14.39 pixels), along with a notable increase in the reconstruction score to 91.99%. These findings highlight the potential of advanced neural network architectures in addressing the challenges of complex urban residential geometries.

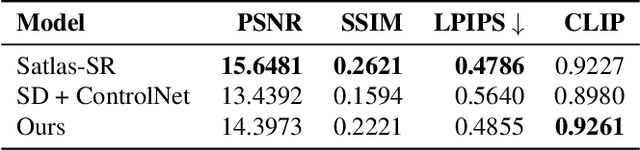

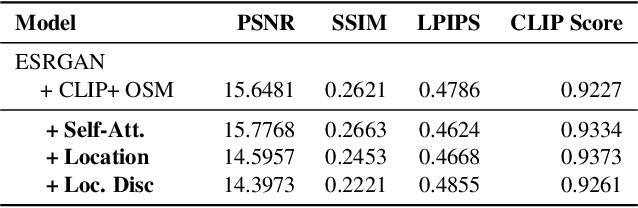

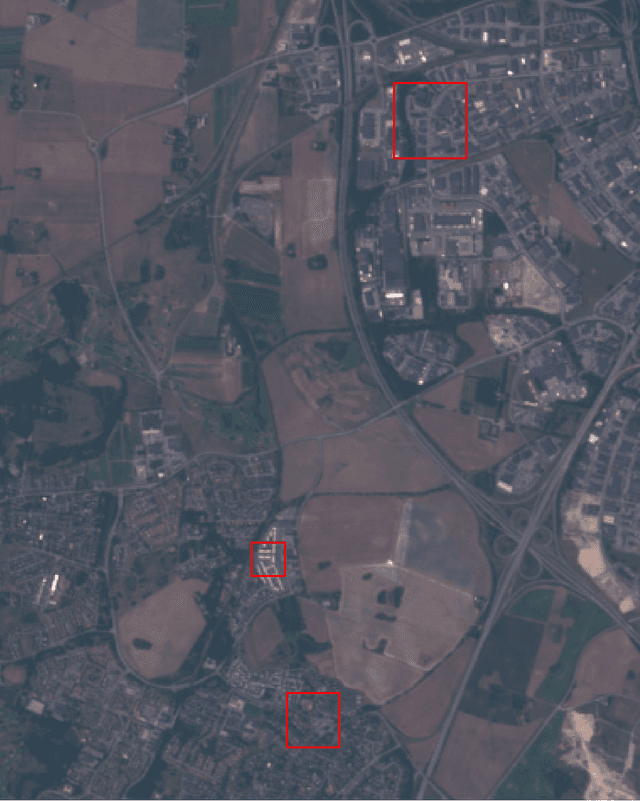

Can Location Embeddings Enhance Super-Resolution of Satellite Imagery?

Jan 27, 2025

Abstract:Publicly available satellite imagery, such as Sentinel- 2, often lacks the spatial resolution required for accurate analysis of remote sensing tasks including urban planning and disaster response. Current super-resolution techniques are typically trained on limited datasets, leading to poor generalization across diverse geographic regions. In this work, we propose a novel super-resolution framework that enhances generalization by incorporating geographic context through location embeddings. Our framework employs Generative Adversarial Networks (GANs) and incorporates techniques from diffusion models to enhance image quality. Furthermore, we address tiling artifacts by integrating information from neighboring images, enabling the generation of seamless, high-resolution outputs. We demonstrate the effectiveness of our method on the building segmentation task, showing significant improvements over state-of-the-art methods and highlighting its potential for real-world applications.

Dfilled: Repurposing Edge-Enhancing Diffusion for Guided DSM Void Filling

Jan 26, 2025

Abstract:Digital Surface Models (DSMs) are essential for accurately representing Earth's topography in geospatial analyses. DSMs capture detailed elevations of natural and manmade features, crucial for applications like urban planning, vegetation studies, and 3D reconstruction. However, DSMs derived from stereo satellite imagery often contain voids or missing data due to occlusions, shadows, and lowsignal areas. Previous studies have primarily focused on void filling for digital elevation models (DEMs) and Digital Terrain Models (DTMs), employing methods such as inverse distance weighting (IDW), kriging, and spline interpolation. While effective for simpler terrains, these approaches often fail to handle the intricate structures present in DSMs. To overcome these limitations, we introduce Dfilled, a guided DSM void filling method that leverages optical remote sensing images through edge-enhancing diffusion. Dfilled repurposes deep anisotropic diffusion models, which originally designed for super-resolution tasks, to inpaint DSMs. Additionally, we utilize Perlin noise to create inpainting masks that mimic natural void patterns in DSMs. Experimental evaluations demonstrate that Dfilled surpasses traditional interpolation methods and deep learning approaches in DSM void filling tasks. Both quantitative and qualitative assessments highlight the method's ability to manage complex features and deliver accurate, visually coherent results.

SyntStereo2Real: Edge-Aware GAN for Remote Sensing Image-to-Image Translation while Maintaining Stereo Constraint

Apr 14, 2024

Abstract:In the field of remote sensing, the scarcity of stereo-matched and particularly lack of accurate ground truth data often hinders the training of deep neural networks. The use of synthetically generated images as an alternative, alleviates this problem but suffers from the problem of domain generalization. Unifying the capabilities of image-to-image translation and stereo-matching presents an effective solution to address the issue of domain generalization. Current methods involve combining two networks, an unpaired image-to-image translation network and a stereo-matching network, while jointly optimizing them. We propose an edge-aware GAN-based network that effectively tackles both tasks simultaneously. We obtain edge maps of input images from the Sobel operator and use it as an additional input to the encoder in the generator to enforce geometric consistency during translation. We additionally include a warping loss calculated from the translated images to maintain the stereo consistency. We demonstrate that our model produces qualitatively and quantitatively superior results than existing models, and its applicability extends to diverse domains, including autonomous driving.

Real-GDSR: Real-World Guided DSM Super-Resolution via Edge-Enhancing Residual Network

Apr 05, 2024Abstract:A low-resolution digital surface model (DSM) features distinctive attributes impacted by noise, sensor limitations and data acquisition conditions, which failed to be replicated using simple interpolation methods like bicubic. This causes super-resolution models trained on synthetic data does not perform effectively on real ones. Training a model on real low and high resolution DSMs pairs is also a challenge because of the lack of information. On the other hand, the existence of other imaging modalities of the same scene can be used to enrich the information needed for large-scale super-resolution. In this work, we introduce a novel methodology to address the intricacies of real-world DSM super-resolution, named REAL-GDSR, breaking down this ill-posed problem into two steps. The first step involves the utilization of a residual local refinement network. This strategic approach departs from conventional methods that trained to directly predict height values instead of the differences (residuals) and utilize large receptive fields in their networks. The second step introduces a diffusion-based technique that enhances the results on a global scale, with a primary focus on smoothing and edge preservation. Our experiments underscore the effectiveness of the proposed method. We conduct a comprehensive evaluation, comparing it to recent state-of-the-art techniques in the domain of real-world DSM super-resolution (SR). Our approach consistently outperforms these existing methods, as evidenced through qualitative and quantitative assessments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge