Craig Rieger

RX-ADS: Interpretable Anomaly Detection using Adversarial ML for Electric Vehicle CAN data

Sep 05, 2022

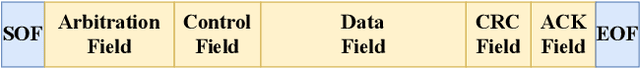

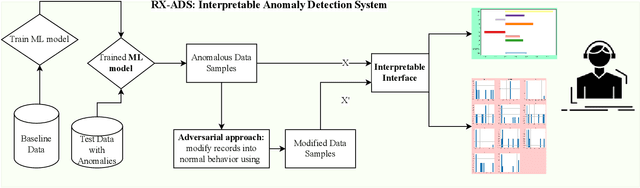

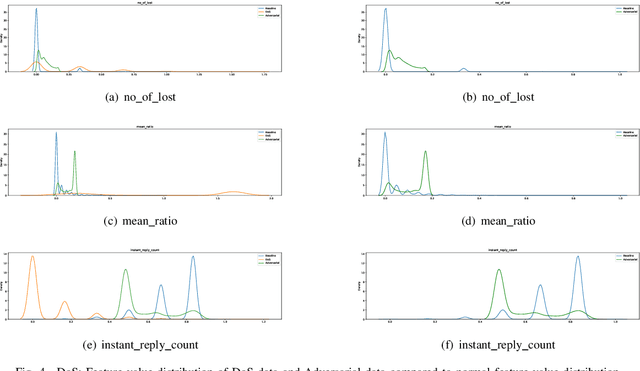

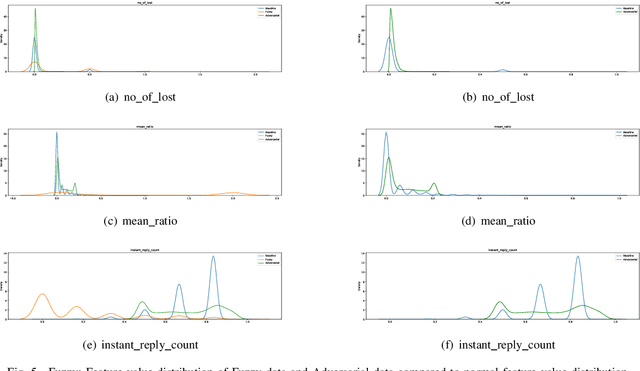

Abstract:Recent year has brought considerable advancements in Electric Vehicles (EVs) and associated infrastructures/communications. Intrusion Detection Systems (IDS) are widely deployed for anomaly detection in such critical infrastructures. This paper presents an Interpretable Anomaly Detection System (RX-ADS) for intrusion detection in CAN protocol communication in EVs. Contributions include: 1) window based feature extraction method; 2) deep Autoencoder based anomaly detection method; and 3) adversarial machine learning based explanation generation methodology. The presented approach was tested on two benchmark CAN datasets: OTIDS and Car Hacking. The anomaly detection performance of RX-ADS was compared against the state-of-the-art approaches on these datasets: HIDS and GIDS. The RX-ADS approach presented performance comparable to the HIDS approach (OTIDS dataset) and has outperformed HIDS and GIDS approaches (Car Hacking dataset). Further, the proposed approach was able to generate explanations for detected abnormal behaviors arising from various intrusions. These explanations were later validated by information used by domain experts to detect anomalies. Other advantages of RX-ADS include: 1) the method can be trained on unlabeled data; 2) explanations help experts in understanding anomalies and root course analysis, and also help with AI model debugging and diagnostics, ultimately improving user trust in AI systems.

Self-Supervised and Interpretable Anomaly Detection using Network Transformers

Feb 25, 2022

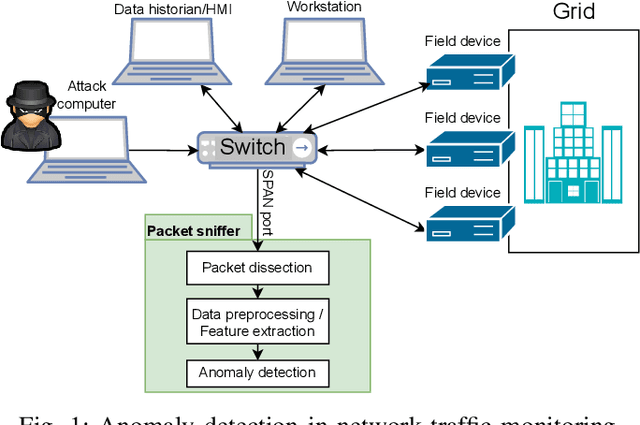

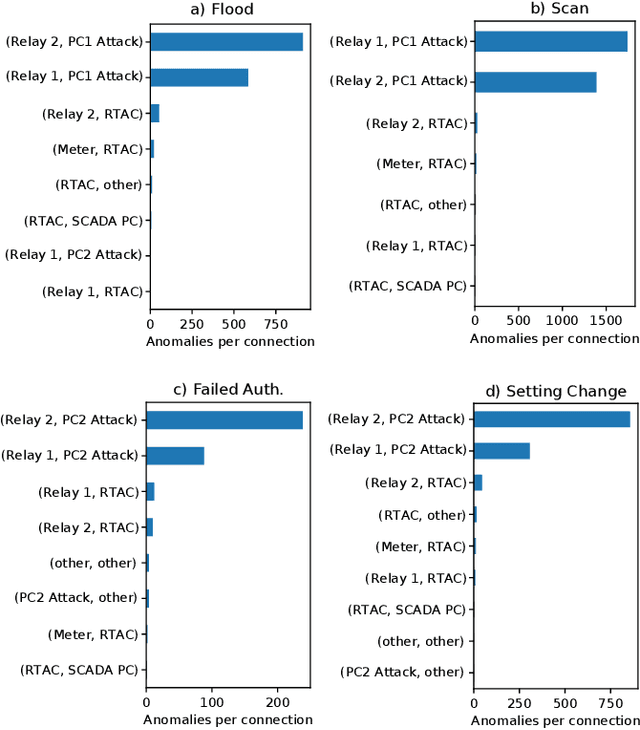

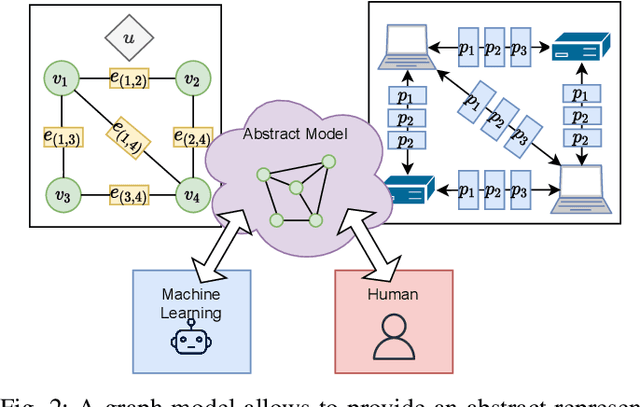

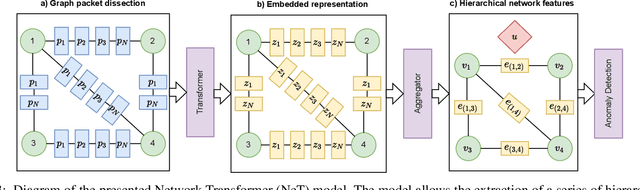

Abstract:Monitoring traffic in computer networks is one of the core approaches for defending critical infrastructure against cyber attacks. Machine Learning (ML) and Deep Neural Networks (DNNs) have been proposed in the past as a tool to identify anomalies in computer networks. Although detecting these anomalies provides an indication of an attack, just detecting an anomaly is not enough information for a user to understand the anomaly. The black-box nature of off-the-shelf ML models prevents extracting important information that is fundamental to isolate the source of the fault/attack and take corrective measures. In this paper, we introduce the Network Transformer (NeT), a DNN model for anomaly detection that incorporates the graph structure of the communication network in order to improve interpretability. The presented approach has the following advantages: 1) enhanced interpretability by incorporating the graph structure of computer networks; 2) provides a hierarchical set of features that enables analysis at different levels of granularity; 3) self-supervised training that does not require labeled data. The presented approach was tested by evaluating the successful detection of anomalies in an Industrial Control System (ICS). The presented approach successfully identified anomalies, the devices affected, and the specific connections causing the anomalies, providing a data-driven hierarchical approach to analyze the behavior of a cyber network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge