Cosmin G. Petra

Convergence Rates of Constrained Expected Improvement

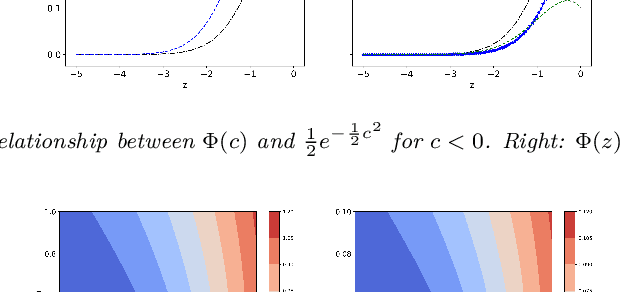

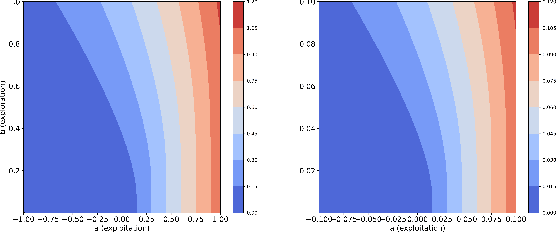

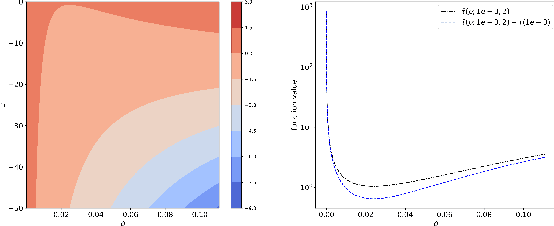

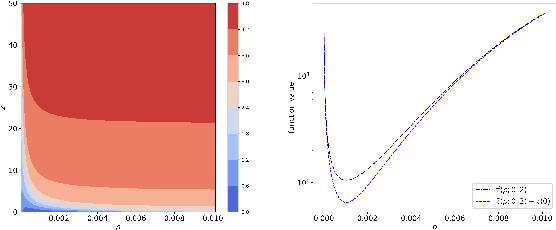

May 16, 2025Abstract:Constrained Bayesian optimization (CBO) methods have seen significant success in black-box optimization with constraints, and one of the most commonly used CBO methods is the constrained expected improvement (CEI) algorithm. CEI is a natural extension of the expected improvement (EI) when constraints are incorporated. However, the theoretical convergence rate of CEI has not been established. In this work, we study the convergence rate of CEI by analyzing its simple regret upper bound. First, we show that when the objective function $f$ and constraint function $c$ are assumed to each lie in a reproducing kernel Hilbert space (RKHS), CEI achieves the convergence rates of $\mathcal{O} \left(t^{-\frac{1}{2}}\log^{\frac{d+1}{2}}(t) \right) \ \text{and }\ \mathcal{O}\left(t^{\frac{-\nu}{2\nu+d}} \log^{\frac{\nu}{2\nu+d}}(t)\right)$ for the commonly used squared exponential and Mat\'{e}rn kernels, respectively. Second, we show that when $f$ and $c$ are assumed to be sampled from Gaussian processes (GPs), CEI achieves the same convergence rates with a high probability. Numerical experiments are performed to validate the theoretical analysis.

On the convergence of noisy Bayesian Optimization with Expected Improvement

Jan 16, 2025

Abstract:Expected improvement (EI) is one of the most widely-used acquisition functions in Bayesian optimization (BO). Despite its proven success in applications for decades, important open questions remain on the theoretical convergence behaviors and rates for EI. In this paper, we contribute to the convergence theories of EI in three novel and critical area. First, we consider objective functions that are under the Gaussian process (GP) prior assumption, whereas existing works mostly focus on functions in the reproducing kernel Hilbert space (RKHS). Second, we establish the first asymptotic error bound and its corresponding rate for GP-EI with noisy observations under the GP prior assumption. Third, by investigating the exploration and exploitation of the non-convex EI function, we prove improved error bounds for both the noise-free and noisy cases. The improved noiseless bound is extended to the RKHS assumption as well.

On Improved Regret Bounds In Bayesian Optimization with Gaussian Noise

Dec 25, 2024Abstract:Bayesian optimization (BO) with Gaussian process (GP) surrogate models is a powerful black-box optimization method. Acquisition functions are a critical part of a BO algorithm as they determine how the new samples are selected. Some of the most widely used acquisition functions include upper confidence bound (UCB) and Thompson sampling (TS). The convergence analysis of BO algorithms has focused on the cumulative regret under both the Bayesian and frequentist settings for the objective. In this paper, we establish new pointwise bounds on the prediction error of GP under the frequentist setting with Gaussian noise. Consequently, we prove improved convergence rates of cumulative regret bound for both GP-UCB and GP-TS. Of note, the new prediction error bound under Gaussian noise can be applied to general BO algorithms and convergence analysis, e.g., the asymptotic convergence of expected improvement (EI) with noise.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge