Get our free extension to see links to code for papers anywhere online!Free add-on: code for papers everywhere!Free add-on: See code for papers anywhere!

Corbin Rosset

Deep Canonically Correlated LSTMs

Jan 16, 2018Figures and Tables:

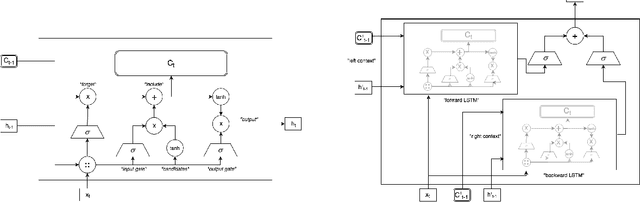

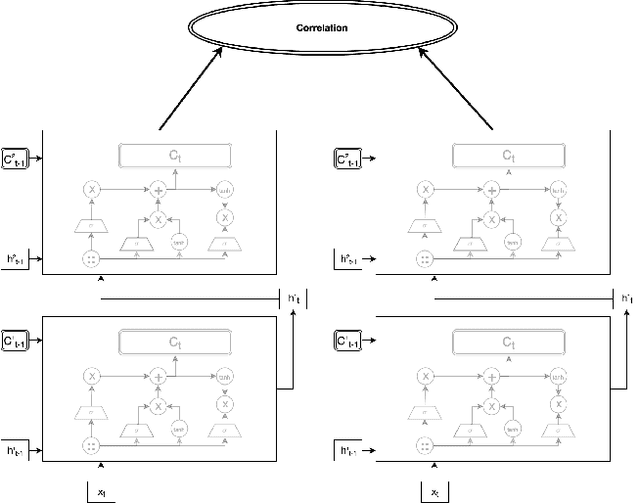

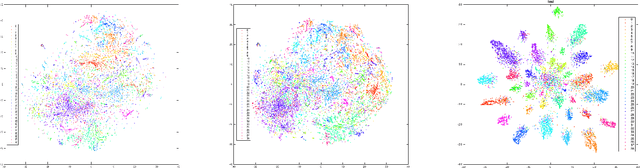

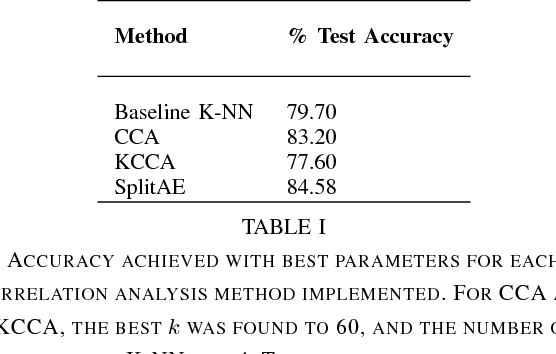

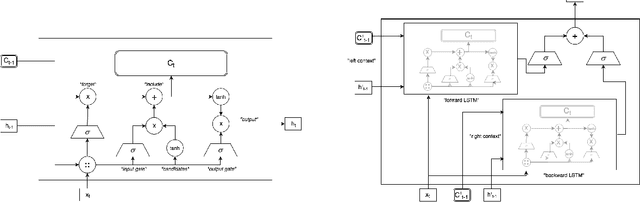

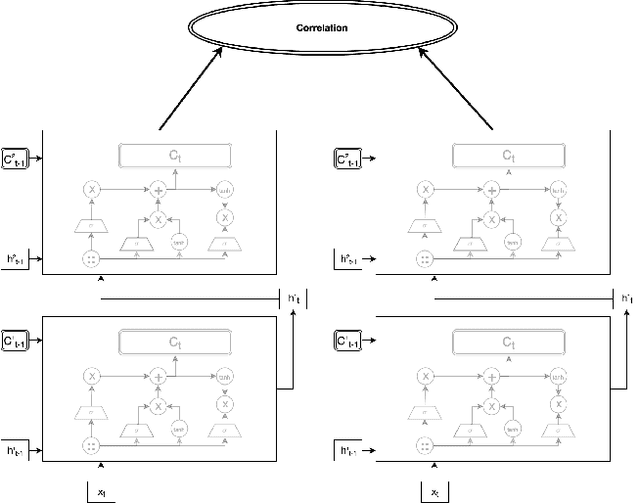

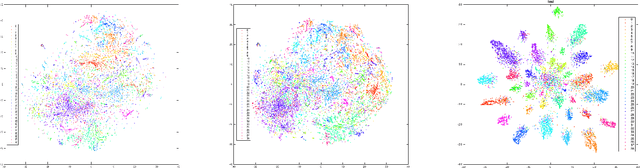

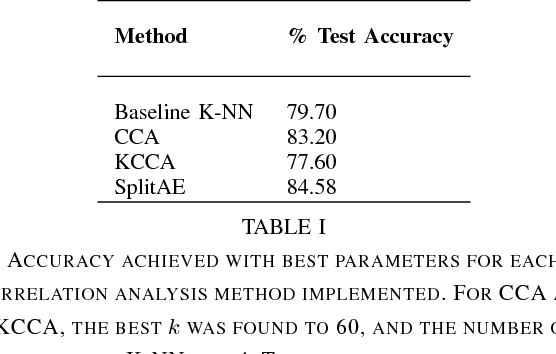

Abstract:We examine Deep Canonically Correlated LSTMs as a way to learn nonlinear transformations of variable length sequences and embed them into a correlated, fixed dimensional space. We use LSTMs to transform multi-view time-series data non-linearly while learning temporal relationships within the data. We then perform correlation analysis on the outputs of these neural networks to find a correlated subspace through which we get our final representation via projection. This work follows from previous work done on Deep Canonical Correlation (DCCA), in which deep feed-forward neural networks were used to learn nonlinear transformations of data while maximizing correlation.

* 8 pages, 3 figures, accepted as the undergraduate honors thesis for

Neil Mallinar by The Johns Hopkins University

Via

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge