Connor Brooks

Visualization of Intended Assistance for Acceptance of Shared Control

Aug 25, 2020

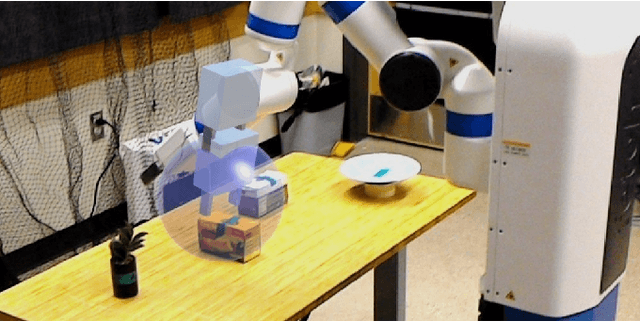

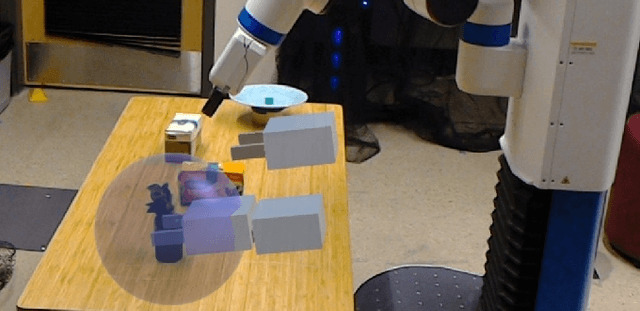

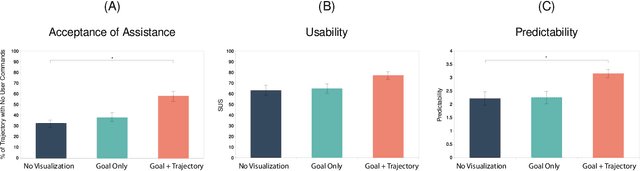

Abstract:In shared control, advances in autonomous robotics are applied to help empower a human user in operating a robotic system. While these systems have been shown to improve efficiency and operation success, users are not always accepting of the new control paradigm produced by working with an assistive controller. This mismatch between performance and acceptance can prevent users from taking advantage of the benefits of shared control systems for robotic operation. To address this mismatch, we develop multiple types of visualizations for improving both the legibility and perceived predictability of assistive controllers, then conduct a user study to evaluate the impact that these visualizations have on user acceptance of shared control systems. Our results demonstrate that shared control visualizations must be designed carefully to be effective, with users requiring visualizations that improve both legibility and predictability of the assistive controller in order to voluntarily relinquish control.

Building Second-Order Mental Models for Human-Robot Interaction

Sep 14, 2019

Abstract:The mental models that humans form of other agents---encapsulating human beliefs about agent goals, intentions, capabilities, and more---create an underlying basis for interaction. These mental models have the potential to affect both the human's decision making during the interaction and the human's subjective assessment of the interaction. In this paper, we surveyed existing methods for modeling how humans view robots, then identified a potential method for improving these estimates through inferring a human's model of a robot agent directly from their actions. Then, we conducted an online study to collect data in a grid-world environment involving humans moving an avatar past a virtual agent. Through our analysis, we demonstrated that participants' action choices leaked information about their mental models of a virtual agent. We conclude by discussing the implications of these findings and the potential for such a method to improve human-robot interactions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge