Chun-Shu Wei

SSVEP-DAN: A Data Alignment Network for SSVEP-based Brain Computer Interfaces

Nov 21, 2023Abstract:Steady-state visual-evoked potential (SSVEP)-based brain-computer interfaces (BCIs) offer a non-invasive means of communication through high-speed speller systems. However, their efficiency heavily relies on individual training data obtained during time-consuming calibration sessions. To address the challenge of data insufficiency in SSVEP-based BCIs, we present SSVEP-DAN, the first dedicated neural network model designed for aligning SSVEP data across different domains, which can encompass various sessions, subjects, or devices. Our experimental results across multiple cross-domain scenarios demonstrate SSVEP-DAN's capability to transform existing source SSVEP data into supplementary calibration data, significantly enhancing SSVEP decoding accuracy in scenarios with limited calibration data. We envision SSVEP-DAN as a catalyst for practical SSVEP-based BCI applications with minimal calibration. The source codes in this work are available at: https://github.com/CECNL/SSVEP-DAN.

Enhancing Low-Density EEG-Based Brain-Computer Interfaces with Similarity-Keeping Knowledge Distillation

Dec 06, 2022

Abstract:Electroencephalogram (EEG) has been one of the common neuromonitoring modalities for real-world brain-computer interfaces (BCIs) because of its non-invasiveness, low cost, and high temporal resolution. Recently, light-weight and portable EEG wearable devices based on low-density montages have increased the convenience and usability of BCI applications. However, loss of EEG decoding performance is often inevitable due to reduced number of electrodes and coverage of scalp regions of a low-density EEG montage. To address this issue, we introduce knowledge distillation (KD), a learning mechanism developed for transferring knowledge/information between neural network models, to enhance the performance of low-density EEG decoding. Our framework includes a newly proposed similarity-keeping (SK) teacher-student KD scheme that encourages a low-density EEG student model to acquire the inter-sample similarity as in a pre-trained teacher model trained on high-density EEG data. The experimental results validate that our SK-KD framework consistently improves motor-imagery EEG decoding accuracy when number of electrodes deceases for the input EEG data. For both common low-density headphone-like and headband-like montages, our method outperforms state-of-the-art KD methods across various EEG decoding model architectures. As the first KD scheme developed for enhancing EEG decoding, we foresee the proposed SK-KD framework to facilitate the practicality of low-density EEG-based BCI in real-world applications.

CLEEGN: A Convolutional Neural Network for Plug-and-Play Automatic EEG Reconstruction

Oct 12, 2022

Abstract:Human electroencephalography (EEG) is a brain monitoring modality that senses cortical neuroelectrophysiological activity in high-temporal resolution. One of the greatest challenges posed in applications of EEG is the unstable signal quality susceptible to inevitable artifacts during recordings. To date, most existing techniques for EEG artifact removal and reconstruction are applicable to offline analysis solely, or require individualized training data to facilitate online reconstruction. We have proposed CLEEGN, a novel convolutional neural network for plug-and-play automatic EEG reconstruction. CLEEGN is based on a subject-independent pre-trained model using existing data and can operate on a new user without any further calibration. The performance of CLEEGN was validated using multiple evaluations including waveform observation, reconstruction error assessment, and decoding accuracy on well-studied labeled datasets. The results of simulated online validation suggest that, even without any calibration, CLEEGN can largely preserve inherent brain activity and outperforms leading online/offline artifact removal methods in the decoding accuracy of reconstructed EEG data. In addition, visualization of model parameters and latent features exhibit the model behavior and reveal explainable insights related to existing knowledge of neuroscience. We foresee pervasive applications of CLEEGN in prospective works of online plug-and-play EEG decoding and analysis.

MAtt: A Manifold Attention Network for EEG Decoding

Oct 05, 2022

Abstract:Recognition of electroencephalographic (EEG) signals highly affect the efficiency of non-invasive brain-computer interfaces (BCIs). While recent advances of deep-learning (DL)-based EEG decoders offer improved performances, the development of geometric learning (GL) has attracted much attention for offering exceptional robustness in decoding noisy EEG data. However, there is a lack of studies on the merged use of deep neural networks (DNNs) and geometric learning for EEG decoding. We herein propose a manifold attention network (mAtt), a novel geometric deep learning (GDL)-based model, featuring a manifold attention mechanism that characterizes spatiotemporal representations of EEG data fully on a Riemannian symmetric positive definite (SPD) manifold. The evaluation of the proposed MAtt on both time-synchronous and -asyncronous EEG datasets suggests its superiority over other leading DL methods for general EEG decoding. Furthermore, analysis of model interpretation reveals the capability of MAtt in capturing informative EEG features and handling the non-stationarity of brain dynamics.

ExBrainable: An Open-Source GUI for CNN-based EEG Decoding and Model Interpretation

Jan 10, 2022

Abstract:We have developed a graphic user interface (GUI), ExBrainable, dedicated to convolutional neural networks (CNN) model training and visualization in electroencephalography (EEG) decoding. Available functions include model training, evaluation, and parameter visualization in terms of temporal and spatial representations. We demonstrate these functions using a well-studied public dataset of motor-imagery EEG and compare the results with existing knowledge of neuroscience. The primary objective of ExBrainable is to provide a fast, simplified, and user-friendly solution of EEG decoding for investigators across disciplines to leverage cutting-edge methods in brain/neuroscience research.

Boosting Template-based SSVEP Decoding by Cross-domain Transfer Learning

Feb 10, 2021

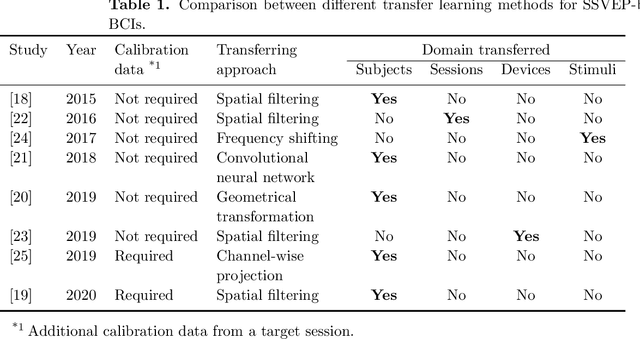

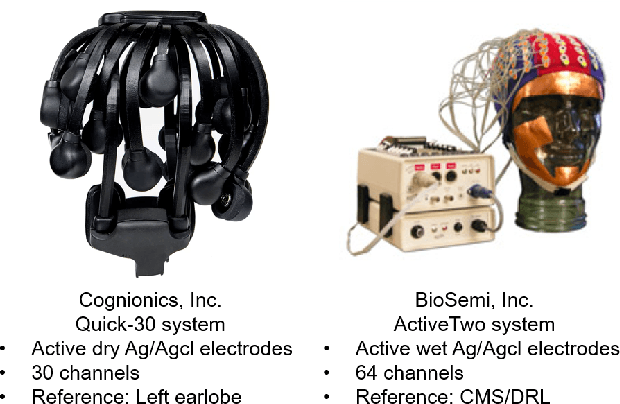

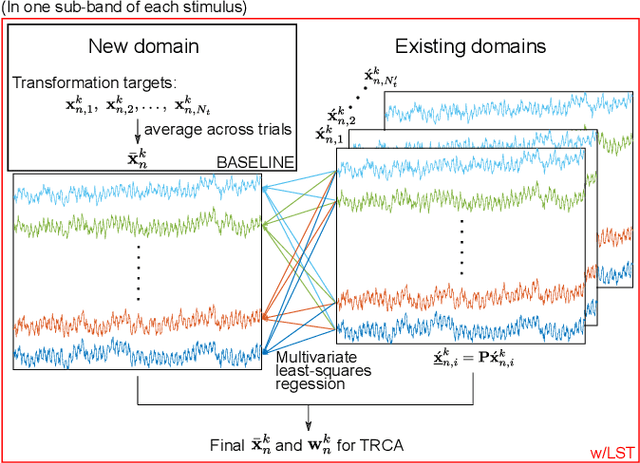

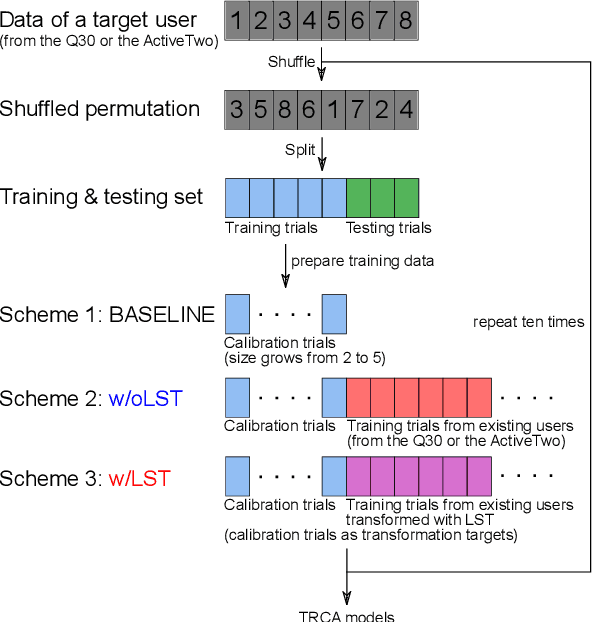

Abstract:Objective: This study aims to establish a generalized transfer-learning framework for boosting the performance of steady-state visual evoked potential (SSVEP)-based brain-computer interfaces (BCIs) by leveraging cross-domain data transferring. Approach: We enhanced the state-of-the-art template-based SSVEP decoding through incorporating a least-squares transformation (LST)-based transfer learning to leverage calibration data across multiple domains (sessions, subjects, and EEG montages). Main results: Study results verified the efficacy of LST in obviating the variability of SSVEPs when transferring existing data across domains. Furthermore, the LST-based method achieved significantly higher SSVEP-decoding accuracy than the standard task-related component analysis (TRCA)-based method and the non-LST naive transfer-learning method. Significance: This study demonstrated the capability of the LST-based transfer learning to leverage existing data across subjects and/or devices with an in-depth investigation of its rationale and behavior in various circumstances. The proposed framework significantly improved the SSVEP decoding accuracy over the standard TRCA approach when calibration data are limited. Its performance in calibration reduction could facilitate plug-and-play SSVEP-based BCIs and further practical applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge