Chuanbo Feng

Reducing Semantic Ambiguity In Domain Adaptive Semantic Segmentation Via Probabilistic Prototypical Pixel Contrast

Sep 27, 2024

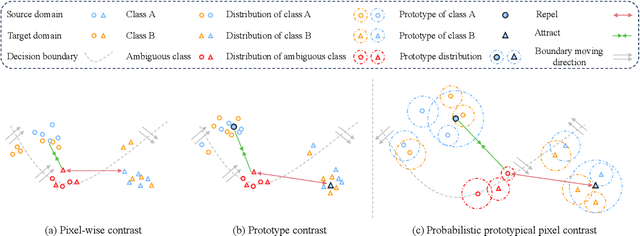

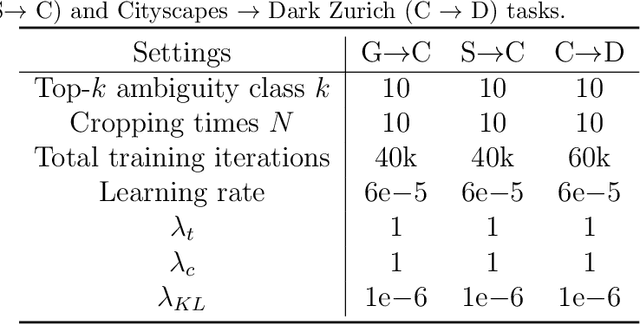

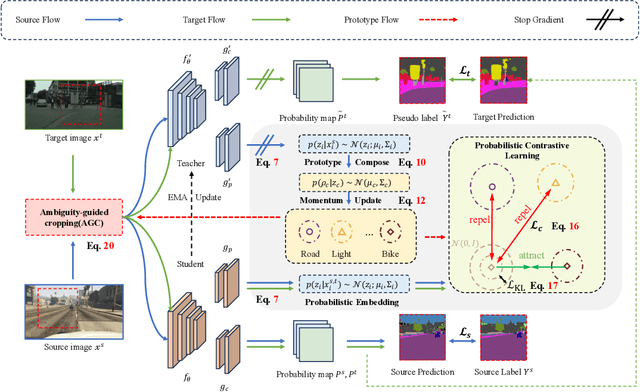

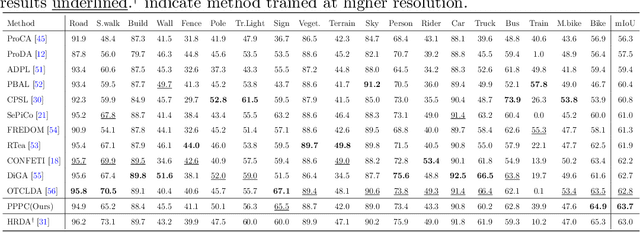

Abstract:Domain adaptation aims to reduce the model degradation on the target domain caused by the domain shift between the source and target domains. Although encouraging performance has been achieved by combining cognitive learning with the self-training paradigm, they suffer from ambiguous scenarios caused by scale, illumination, or overlapping when deploying deterministic embedding. To address these issues, we propose probabilistic proto-typical pixel contrast (PPPC), a universal adaptation framework that models each pixel embedding as a probability via multivariate Gaussian distribution to fully exploit the uncertainty within them, eventually improving the representation quality of the model. In addition, we derive prototypes from probability estimation posterior probability estimation which helps to push the decision boundary away from the ambiguity points. Moreover, we employ an efficient method to compute similarity between distributions, eliminating the need for sampling and reparameterization, thereby significantly reducing computational overhead. Further, we dynamically select the ambiguous crops at the image level to enlarge the number of boundary points involved in contrastive learning, which benefits the establishment of precise distributions for each category. Extensive experimentation demonstrates that PPPC not only helps to address ambiguity at the pixel level, yielding discriminative representations but also achieves significant improvements in both synthetic-to-real and day-to-night adaptation tasks. It surpasses the previous state-of-the-art (SOTA) by +5.2% mIoU in the most challenging daytime-to-nighttime adaptation scenario, exhibiting stronger generalization on other unseen datasets. The code and models are available at https://github.com/DarlingInTheSV/Probabilistic-Prototypical-Pixel-Contrast.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge