Christopher Adnel

FALCON: Feature-Label Constrained Graph Net Collapse for Memory Efficient GNNs

Dec 27, 2023

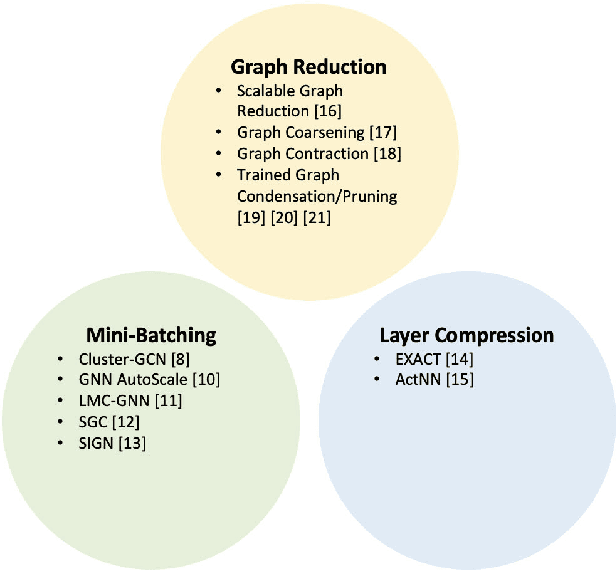

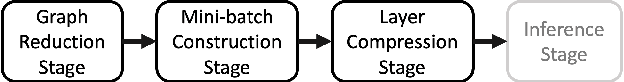

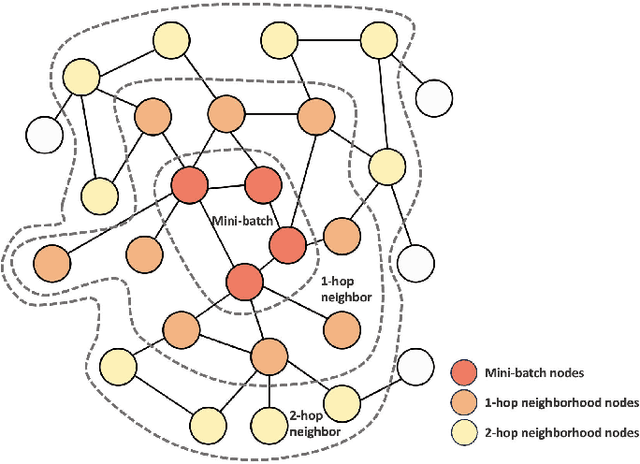

Abstract:Graph Neural Network (GNN) ushered in a new era of machine learning with interconnected datasets. While traditional neural networks can only be trained on independent samples, GNN allows for the inclusion of inter-sample interactions in the training process. This gain, however, incurs additional memory cost, rendering most GNNs unscalable for real-world applications involving vast and complicated networks with tens of millions of nodes (e.g., social circles, web graphs, and brain graphs). This means that storing the graph in the main memory can be difficult, let alone training the GNN model with significantly less GPU memory. While much of the recent literature has focused on either mini-batching GNN methods or quantization, graph reduction methods remain largely scarce. Furthermore, present graph reduction approaches have several drawbacks. First, most graph reduction focuses only on the inference stage (e.g., condensation and distillation) and requires full graph GNN training, which does not reduce training memory footprint. Second, many methods focus solely on the graph's structural aspect, ignoring the initial population feature-label distribution, resulting in a skewed post-reduction label distribution. Here, we propose a Feature-Label COnstrained graph Net collapse, FALCON, to address these limitations. Our three core contributions lie in (i) designing FALCON, a topology-aware graph reduction technique that preserves feature-label distribution; (ii) implementation of FALCON with other memory reduction methods (i.e., mini-batched GNN and quantization) for further memory reduction; (iii) extensive benchmarking and ablation studies against SOTA methods to evaluate FALCON memory reduction. Our extensive results show that FALCON can significantly collapse various public datasets while achieving equal prediction quality across GNN models. Code: https://github.com/basiralab/FALCON

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge