Christophe Lohr

IMT Atlantique - INFO, Lab-STICC

Human Activity Recognition (HAR) in Smart Homes

Dec 20, 2021Abstract:Generally, Human Activity Recognition (HAR) consists of monitoring and analyzing the behavior of one or more persons in order to deduce their activity. In a smart home context, the HAR consists of monitoring daily activities of the residents. Thanks to this monitoring, a smart home can offer home assistance services to improve quality of life, autonomy and health of their residents, especially for elderly and dependent people.

Using Language Model to Bootstrap Human Activity Recognition Ambient Sensors Based in Smart Homes

Nov 23, 2021

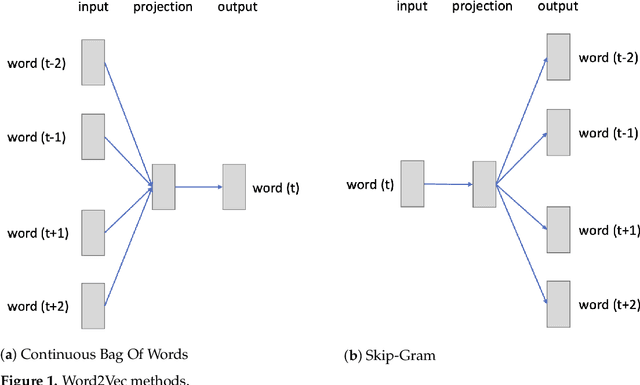

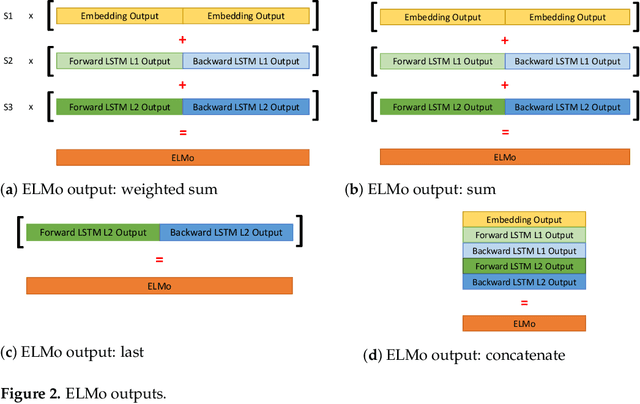

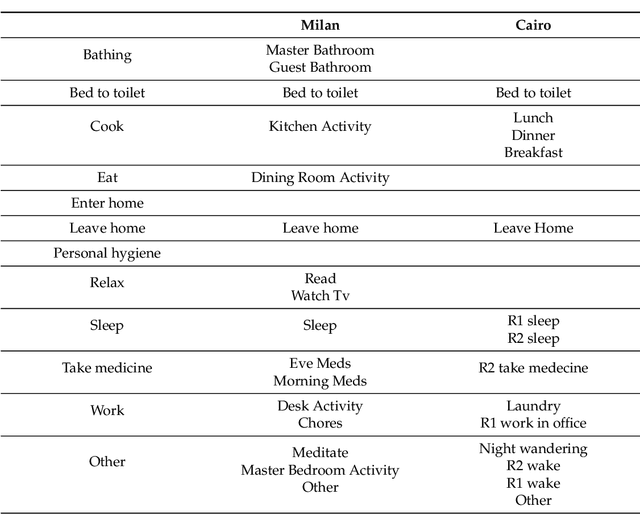

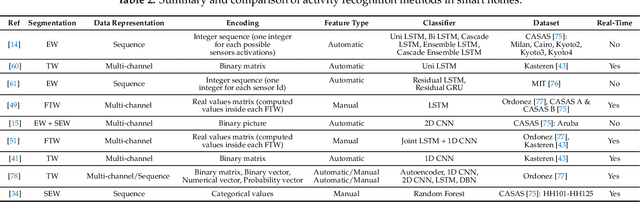

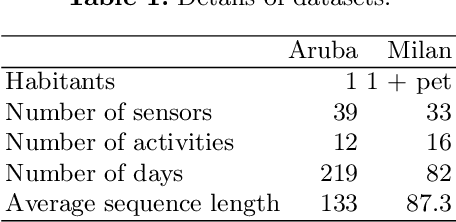

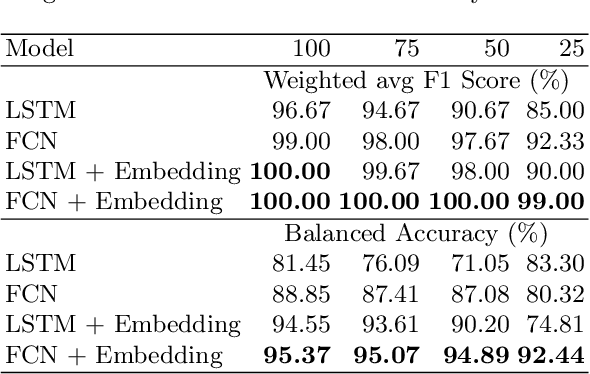

Abstract:Long Short Term Memory LSTM-based structures have demonstrated their efficiency for daily living recognition activities in smart homes by capturing the order of sensor activations and their temporal dependencies. Nevertheless, they still fail in dealing with the semantics and the context of the sensors. More than isolated id and their ordered activation values, sensors also carry meaning. Indeed, their nature and type of activation can translate various activities. Their logs are correlated with each other, creating a global context. We propose to use and compare two Natural Language Processing embedding methods to enhance LSTM-based structures in activity-sequences classification tasks: Word2Vec, a static semantic embedding, and ELMo, a contextualized embedding. Results, on real smart homes datasets, indicate that this approach provides useful information, such as a sensor organization map, and makes less confusion between daily activity classes. It helps to better perform on datasets with competing activities of other residents or pets. Our tests show also that the embeddings can be pretrained on different datasets than the target one, enabling transfer learning. We thus demonstrate that taking into account the context of the sensors and their semantics increases the classification performances and enables transfer learning.

A Survey of Human Activity Recognition in Smart Homes Based on IoT Sensors Algorithms: Taxonomies, Challenges, and Opportunities with Deep Learning

Oct 18, 2021

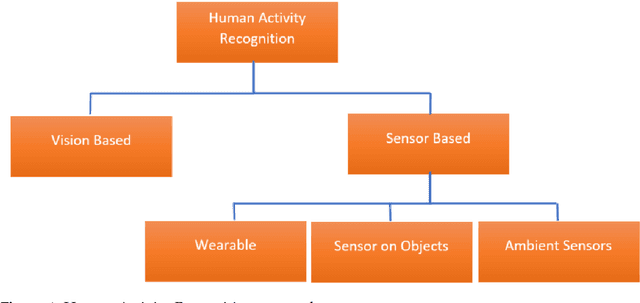

Abstract:Recent advances in Internet of Things (IoT) technologies and the reduction in the cost of sensors have encouraged the development of smart environments, such as smart homes. Smart homes can offer home assistance services to improve the quality of life, autonomy and health of their residents, especially for the elderly and dependent. To provide such services, a smart home must be able to understand the daily activities of its residents. Techniques for recognizing human activity in smart homes are advancing daily. But new challenges are emerging every day. In this paper, we present recent algorithms, works, challenges and taxonomy of the field of human activity recognition in a smart home through ambient sensors. Moreover, since activity recognition in smart homes is a young field, we raise specific problems, missing and needed contributions. But also propose directions, research opportunities and solutions to accelerate advances in this field.

Fully Convolutional Network Bootstrapped by Word Encoding and Embedding for Activity Recognition in Smart Homes

Dec 01, 2020

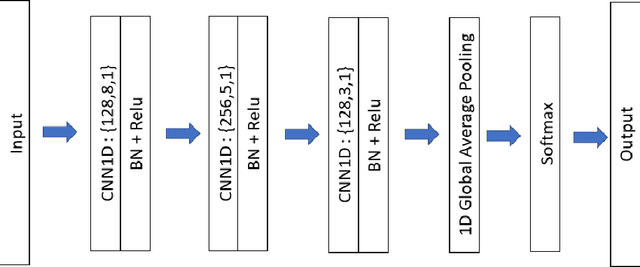

Abstract:Activity recognition in smart homes is essential when we wish to propose automatic services for the inhabitants. However, it poses challenges in terms of variability of the environment, sensorimotor system, but also user habits. Therefore, endto-end systems fail at automatically extracting key features, without extensive pre-processing. We propose to tackle feature extraction for activity recognition in smart homes by merging methods from the Natural Language Processing (NLP) and the Time Series Classification (TSC) domains. We evaluate the performance of our method on two datasets issued from the Center for Advanced Studies in Adaptive Systems (CASAS). Moreover, we analyze the contributions of the use of NLP encoding Bag-Of-Word with Embedding as well as the ability of the FCN algorithm to automatically extract features and classify. The method we propose shows good performance in offline activity classification. Our analysis also shows that FCN is a suitable algorithm for smart home activity recognition and hightlights the advantages of automatic feature extraction.

How An Automated Gesture Imitation Game Can Improve Social Interactions With Teenagers With ASD

Jul 10, 2020

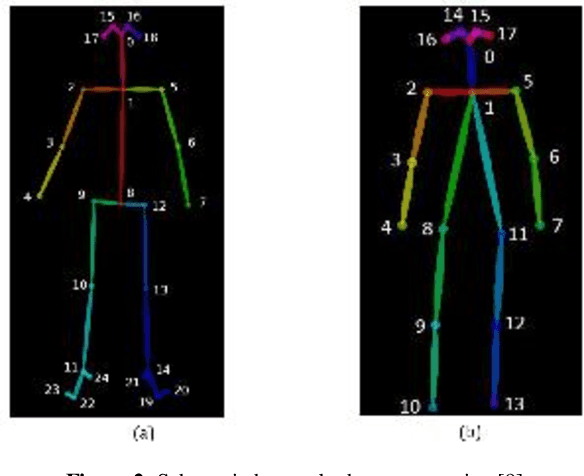

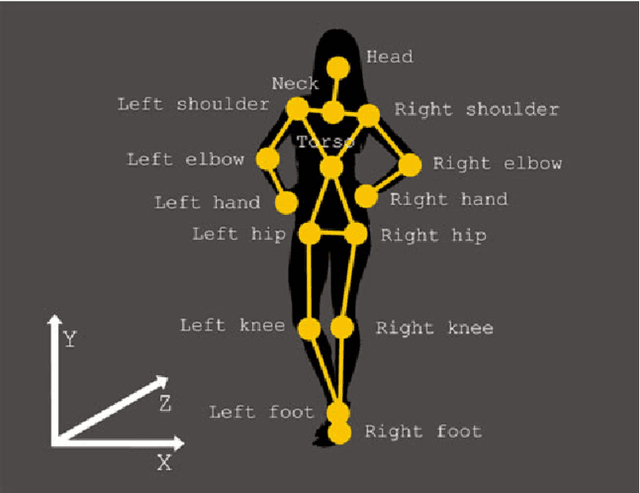

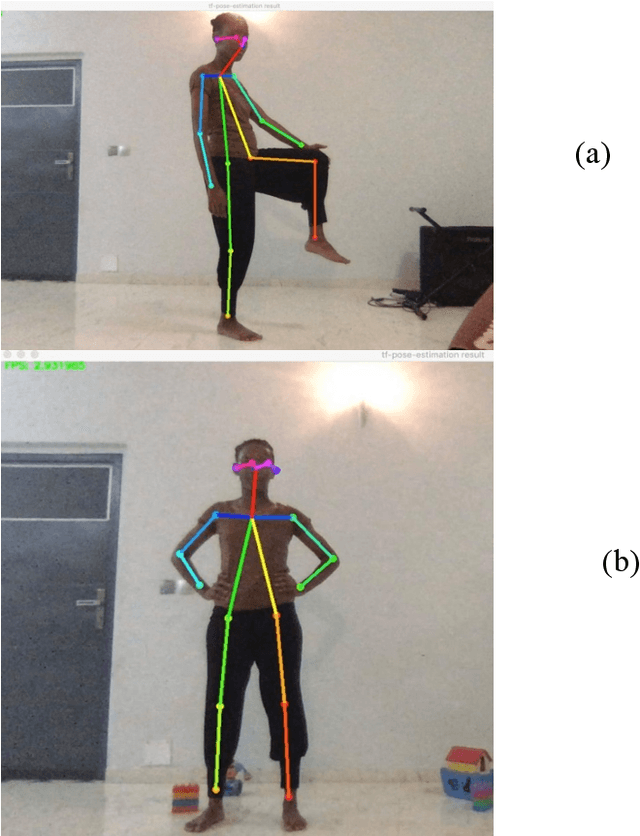

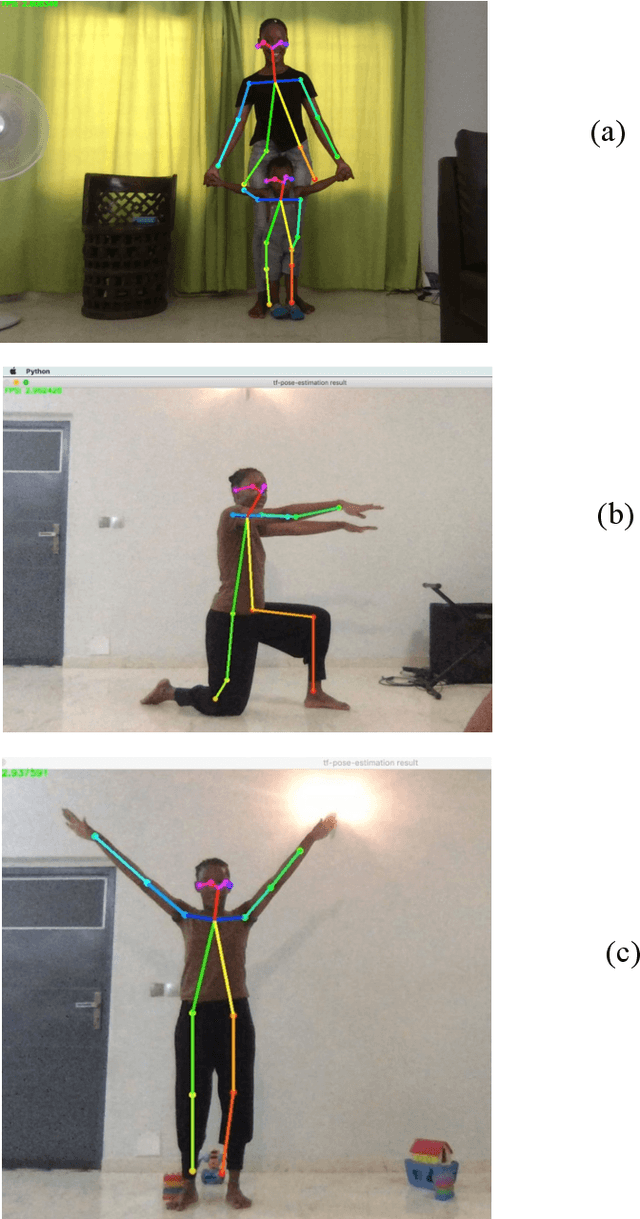

Abstract:With the outlook of improving communication and social abilities of people with ASD, we propose to extend the paradigm of robot-based imitation games to ASD teenagers. In this paper, we present an interaction scenario adapted to ASD teenagers, propose a computational architecture using the latest machine learning algorithm Openpose for human pose detection, and present the results of our basic testing of the scenario with human caregivers. These results are preliminary due to the number of session (1) and participants (4). They include a technical assessment of the performance of Openpose, as well as a preliminary user study to confirm our game scenario could elicit the expected response from subjects.

Building an Automated Gesture Imitation Game for Teenagers with ASD

Jul 09, 2020

Abstract:Autism spectrum disorder is a neurodevelopmental condition that includes issues with communication and social interactions. People with ASD also often have restricted interests and repetitive behaviors. In this paper we build preliminary bricks of an automated gesture imitation game that will aim at improving social interactions with teenagers with ASD. The structure of the game is presented, as well as support tools and methods for skeleton detection and imitation learning. The game shall later be implemented using an interactive robot.

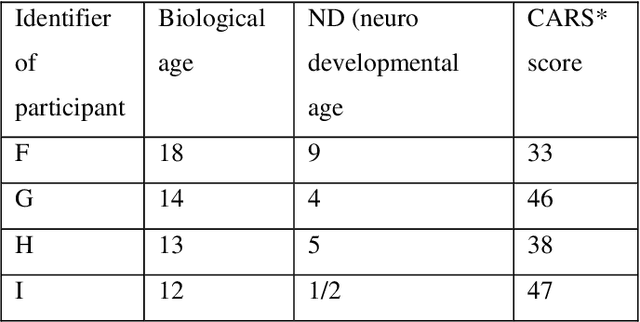

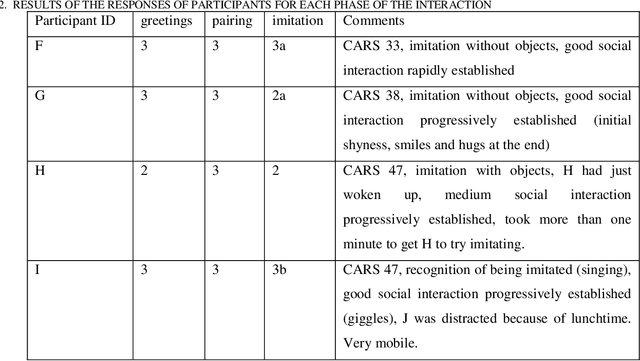

An implementation of an imitation game with ASD children to learn nursery rhymes

Apr 10, 2020

Abstract:Previous studies have suggested that being imitated by an adult is an effective intervention with children with autism and developmental delay. The purpose of this study is to investigate if an imitation game with a robot can arise interest from children and constitute an effective tool to be used in clinical activities. In this paper, we describe the design of our nursery rhyme imitation game, its implementation based on RGB image pose recognition and the preliminary tests we performed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge