Christele Morisseau

Theory and Implementation of Complex-Valued Neural Networks

Feb 16, 2023

Abstract:This work explains in detail the theory behind Complex-Valued Neural Network (CVNN), including Wirtinger calculus, complex backpropagation, and basic modules such as complex layers, complex activation functions, or complex weight initialization. We also show the impact of not adapting the weight initialization correctly to the complex domain. This work presents a strong focus on the implementation of such modules on Python using cvnn toolbox. We also perform simulations on real-valued data, casting to the complex domain by means of the Hilbert Transform, and verifying the potential interest of CVNN even for non-complex data.

Complex-Valued vs. Real-Valued Neural Networks for Classification Perspectives: An Example on Non-Circular Data

Sep 17, 2020

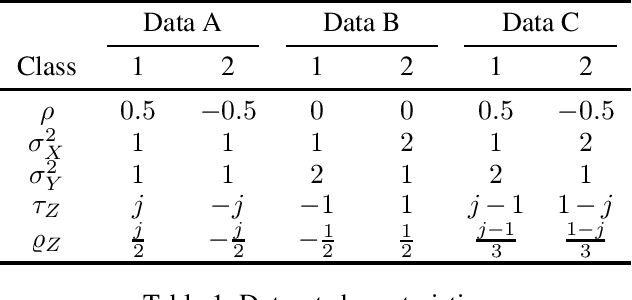

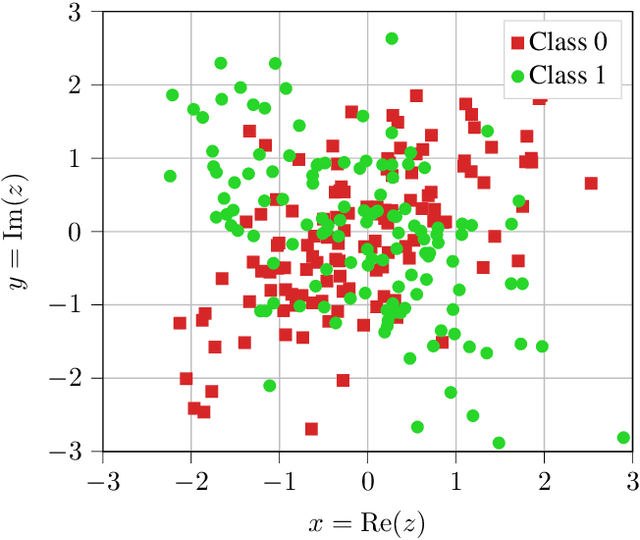

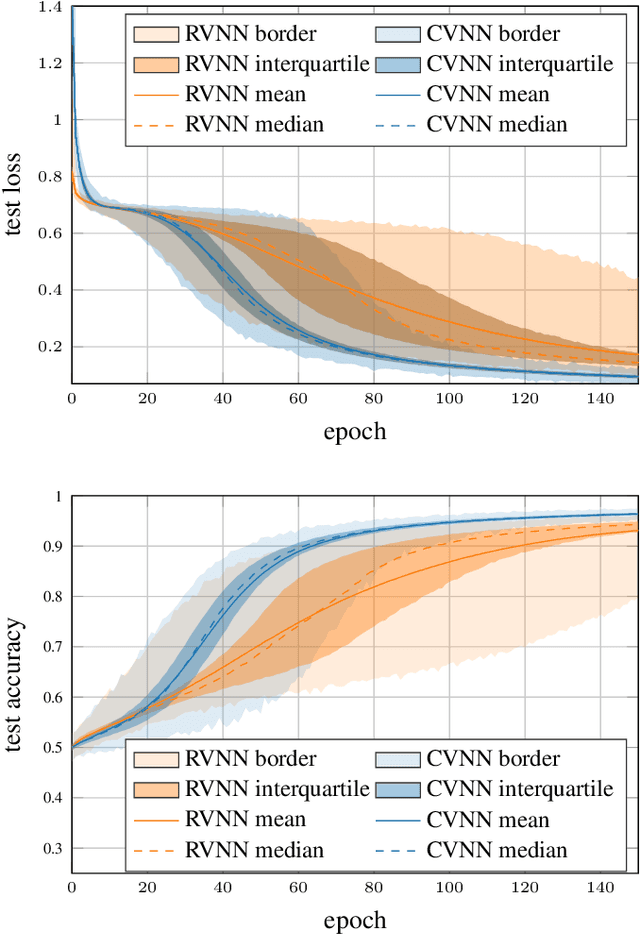

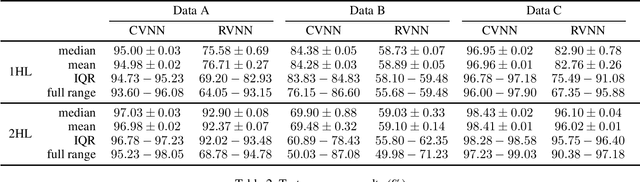

Abstract:The contributions of this paper are twofold. First, we show the potential interest of Complex-Valued Neural Network (CVNN) on classification tasks for complex-valued datasets. To highlight this assertion, we investigate an example of complex-valued data in which the real and imaginary parts are statistically dependent through the property of non-circularity. In this context, the performance of fully connected feed-forward CVNNs is compared against a real-valued equivalent model. The results show that CVNN performs better for a wide variety of architectures and data structures. CVNN accuracy presents a statistically higher mean and median and lower variance than Real-Valued Neural Network (RVNN). Furthermore, if no regularization technique is used, CVNN exhibits lower overfitting. The second contribution is the release of a Python library (Barrachina 2019) using Tensorflow as back-end that enables the implementation and training of CVNNs in the hopes of motivating further research on this area.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge