Chris Lattner

Swift for TensorFlow: A portable, flexible platform for deep learning

Feb 26, 2021

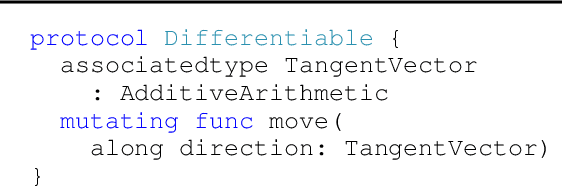

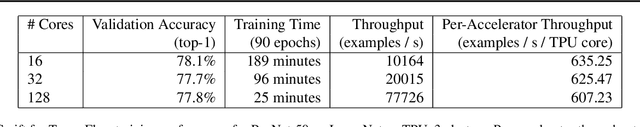

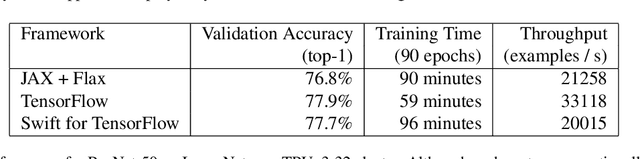

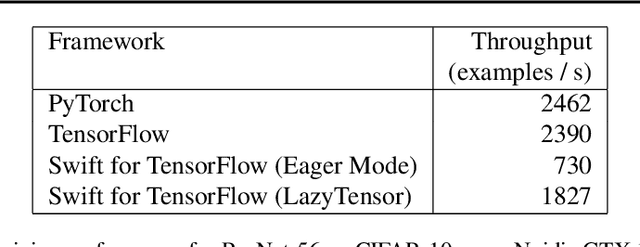

Abstract:Swift for TensorFlow is a deep learning platform that scales from mobile devices to clusters of hardware accelerators in data centers. It combines a language-integrated automatic differentiation system and multiple Tensor implementations within a modern ahead-of-time compiled language oriented around mutable value semantics. The resulting platform has been validated through use in over 30 deep learning models and has been employed across data center and mobile applications.

MLIR: A Compiler Infrastructure for the End of Moore's Law

Mar 01, 2020

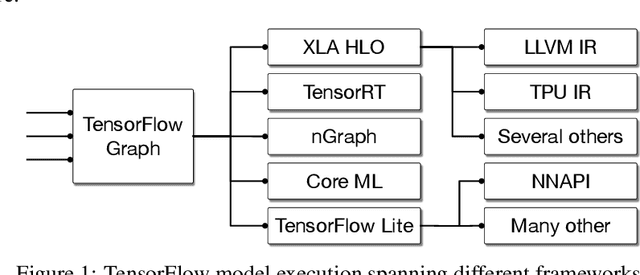

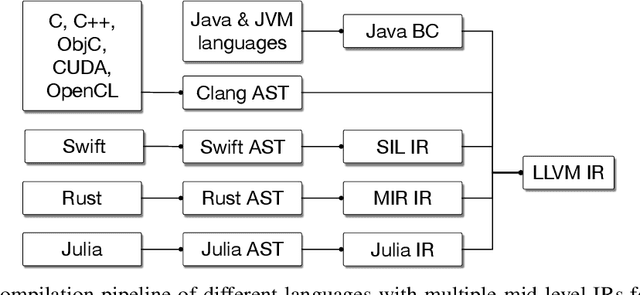

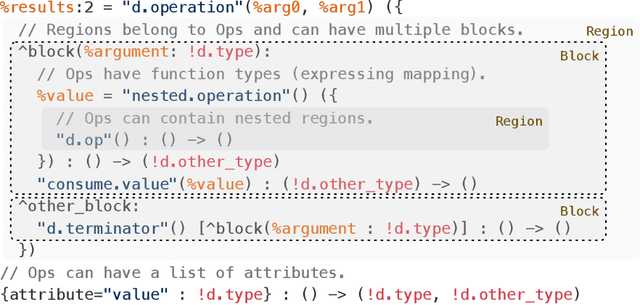

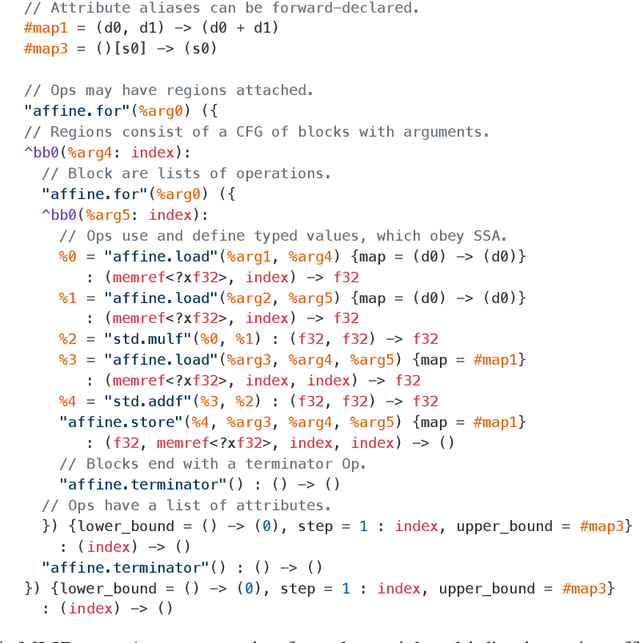

Abstract:This work presents MLIR, a novel approach to building reusable and extensible compiler infrastructure. MLIR aims to address software fragmentation, improve compilation for heterogeneous hardware, significantly reduce the cost of building domain specific compilers, and aid in connecting existing compilers together. MLIR facilitates the design and implementation of code generators, translators and optimizers at different levels of abstraction and also across application domains, hardware targets and execution environments. The contribution of this work includes (1) discussion of MLIR as a research artifact, built for extension and evolution, and identifying the challenges and opportunities posed by this novel design point in design, semantics, optimization specification, system, and engineering. (2) evaluation of MLIR as a generalized infrastructure that reduces the cost of building compilers-describing diverse use-cases to show research and educational opportunities for future programming languages, compilers, execution environments, and computer architecture. The paper also presents the rationale for MLIR, its original design principles, structures and semantics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge