Chris Kitchen

Multimodal Approach for Assessing Neuromotor Coordination in Schizophrenia Using Convolutional Neural Networks

Oct 09, 2021

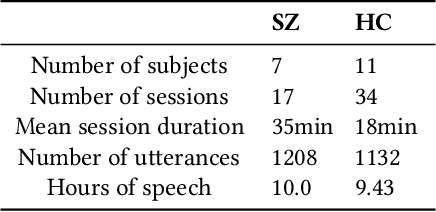

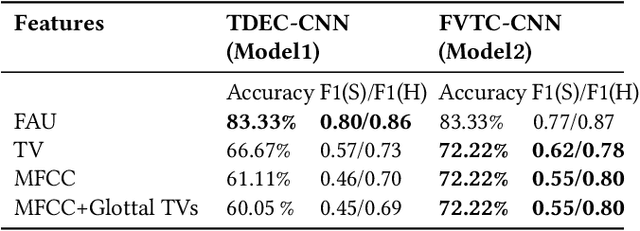

Abstract:This study investigates the speech articulatory coordination in schizophrenia subjects exhibiting strong positive symptoms (e.g. hallucinations and delusions), using two distinct channel-delay correlation methods. We show that the schizophrenic subjects with strong positive symptoms and who are markedly ill pose complex articulatory coordination pattern in facial and speech gestures than what is observed in healthy subjects. This distinction in speech coordination pattern is used to train a multimodal convolutional neural network (CNN) which uses video and audio data during speech to distinguish schizophrenic patients with strong positive symptoms from healthy subjects. We also show that the vocal tract variables (TVs) which correspond to place of articulation and glottal source outperform the Mel-frequency Cepstral Coefficients (MFCCs) when fused with Facial Action Units (FAUs) in the proposed multimodal network. For the clinical dataset we collected, our best performing multimodal network improves the mean F1 score for detecting schizophrenia by around 18% with respect to the full vocal tract coordination (FVTC) baseline method implemented with fusing FAUs and MFCCs.

Inverted Vocal Tract Variables and Facial Action Units to Quantify Neuromotor Coordination in Schizophrenia

Feb 14, 2021

Abstract:This study investigates the speech articulatory coordination in schizophrenia subjects exhibiting strong positive symptoms (e.g.hallucinations and delusions), using a time delay embedded correlation analysis. We show that the schizophrenia subjects with strong positive symptoms and who are markedly ill pose complex coordination patterns in facial and speech gestures than what is observed in healthy subjects. This observation is in contrast to what previous studies have shown in Major Depressive Disorder (MDD), where subjects with MDD show a simpler coordination pattern with respect to healthy controls or subjects in remission. This difference is not surprising given MDD is necessarily accompanied by Psychomotor slowing (i.e.,negative symptoms) which affects speech, ideation and motility. With respect to speech, psychomotor slowing results in slowed speech with more and longer pauses than what occurs in speech from the same speaker when they are in remission and from a healthy subject. Time delay embedded correlation analysis has been used to quantify the differences in coordination patterns of speech articulation. The current study is based on 17 Facial Action Units (FAUs) extracted from video data and 6 Vocal Tract Variables (TVs) obtained from simultaneously recorded audio data. The TVs are extracted using a speech inversion system based on articulatory phonology that maps the acoustic signal to vocal tract variables. The high-level time delay embedded correlation features computed from TVs and FAUs are used to train a stacking ensemble classifier fusing audio and video modalities. The results show that there is a promising distinction between healthy and schizophrenia subjects (with strong positive symptoms) in terms of neuromotor coordination in speech.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge