Chiara Galdi

2D-Malafide: Adversarial Attacks Against Face Deepfake Detection Systems

Aug 26, 2024

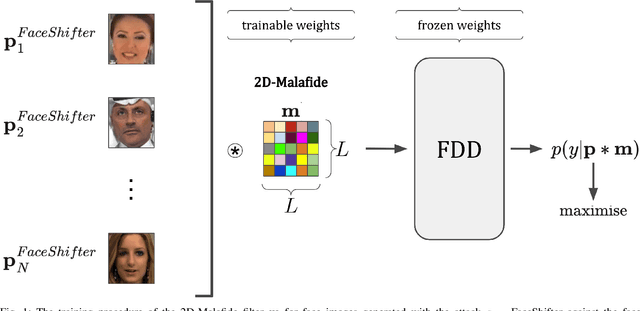

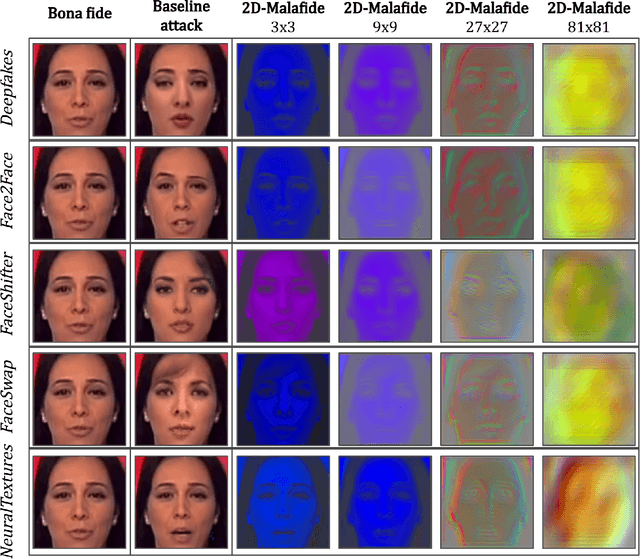

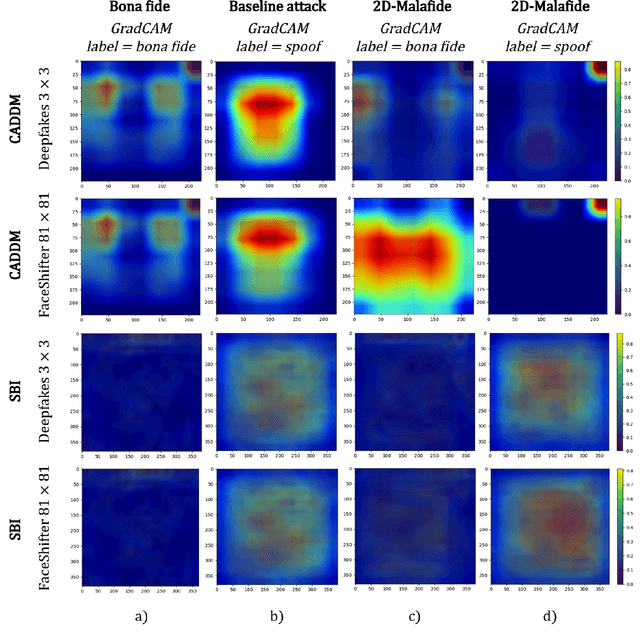

Abstract:We introduce 2D-Malafide, a novel and lightweight adversarial attack designed to deceive face deepfake detection systems. Building upon the concept of 1D convolutional perturbations explored in the speech domain, our method leverages 2D convolutional filters to craft perturbations which significantly degrade the performance of state-of-the-art face deepfake detectors. Unlike traditional additive noise approaches, 2D-Malafide optimises a small number of filter coefficients to generate robust adversarial perturbations which are transferable across different face images. Experiments, conducted using the FaceForensics++ dataset, demonstrate that 2D-Malafide substantially degrades detection performance in both white-box and black-box settings, with larger filter sizes having the greatest impact. Additionally, we report an explainability analysis using GradCAM which illustrates how 2D-Malafide misleads detection systems by altering the image areas used most for classification. Our findings highlight the vulnerability of current deepfake detection systems to convolutional adversarial attacks as well as the need for future work to enhance detection robustness through improved image fidelity constraints.

A Comparison of Differential Performance Metrics for the Evaluation of Automatic Speaker Verification Fairness

Apr 27, 2024

Abstract:When decisions are made and when personal data is treated by automated processes, there is an expectation of fairness -- that members of different demographic groups receive equitable treatment. This expectation applies to biometric systems such as automatic speaker verification (ASV). We present a comparison of three candidate fairness metrics and extend previous work performed for face recognition, by examining differential performance across a range of different ASV operating points. Results show that the Gini Aggregation Rate for Biometric Equitability (GARBE) is the only one which meets three functional fairness measure criteria. Furthermore, a comprehensive evaluation of the fairness and verification performance of five state-of-the-art ASV systems is also presented. Our findings reveal a nuanced trade-off between fairness and verification accuracy underscoring the complex interplay between system design, demographic inclusiveness, and verification reliability.

Fairness and Privacy in Voice Biometrics:A Study of Gender Influences Using wav2vec 2.0

Aug 27, 2023Abstract:This study investigates the impact of gender information on utility, privacy, and fairness in voice biometric systems, guided by the General Data Protection Regulation (GDPR) mandates, which underscore the need for minimizing the processing and storage of private and sensitive data, and ensuring fairness in automated decision-making systems. We adopt an approach that involves the fine-tuning of the wav2vec 2.0 model for speaker verification tasks, evaluating potential gender-related privacy vulnerabilities in the process. Gender influences during the fine-tuning process were employed to enhance fairness and privacy in order to emphasise or obscure gender information within the speakers' embeddings. Results from VoxCeleb datasets indicate our adversarial model increases privacy against uninformed attacks, yet slightly diminishes speaker verification performance compared to the non-adversarial model. However, the model's efficacy reduces against informed attacks. Analysis of system performance was conducted to identify potential gender biases, thus highlighting the need for further research to understand and improve the delicate interplay between utility, privacy, and equity in voice biometric systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge