Chase McDonald

Controllable Complementarity: Subjective Preferences in Human-AI Collaboration

Mar 07, 2025Abstract:Research on human-AI collaboration often prioritizes objective performance. However, understanding human subjective preferences is essential to improving human-AI complementarity and human experiences. We investigate human preferences for controllability in a shared workspace task with AI partners using Behavior Shaping (BS), a reinforcement learning algorithm that allows humans explicit control over AI behavior. In one experiment, we validate the robustness of BS in producing effective AI policies relative to self-play policies, when controls are hidden. In another experiment, we enable human control, showing that participants perceive AI partners as more effective and enjoyable when they can directly dictate AI behavior. Our findings highlight the need to design AI that prioritizes both task performance and subjective human preferences. By aligning AI behavior with human preferences, we demonstrate how human-AI complementarity can extend beyond objective outcomes to include subjective preferences.

Credit Assignment: Challenges and Opportunities in Developing Human-like AI Agents

Jul 16, 2023

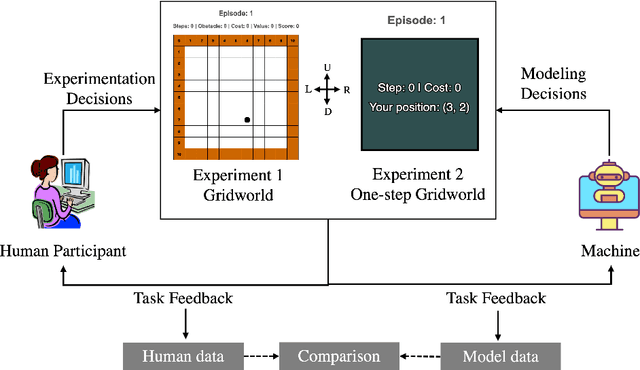

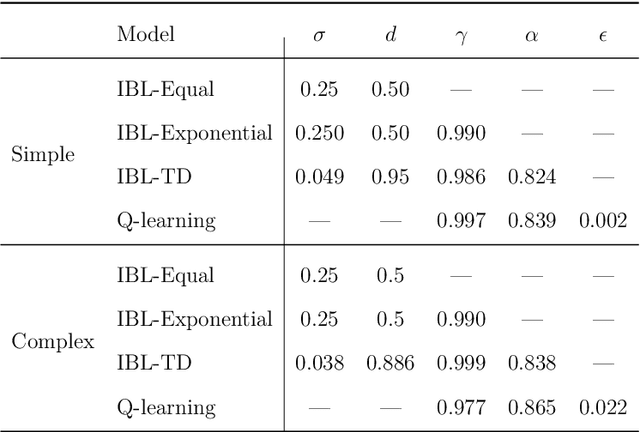

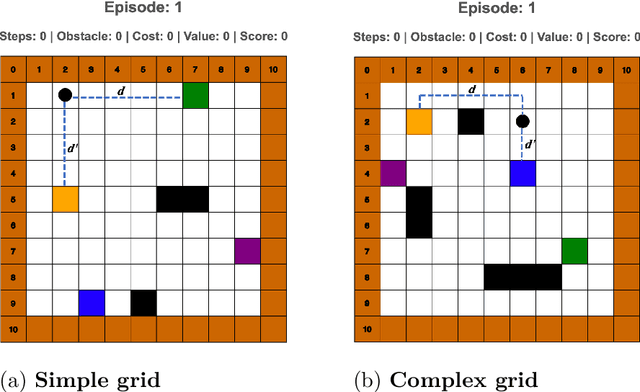

Abstract:Temporal credit assignment is crucial for learning and skill development in natural and artificial intelligence. While computational methods like the TD approach in reinforcement learning have been proposed, it's unclear if they accurately represent how humans handle feedback delays. Cognitive models intend to represent the mental steps by which humans solve problems and perform a number of tasks, but limited research in cognitive science has addressed the credit assignment problem in humans and cognitive models. Our research uses a cognitive model based on a theory of decisions from experience, Instance-Based Learning Theory (IBLT), to test different credit assignment mechanisms in a goal-seeking navigation task with varying levels of decision complexity. Instance-Based Learning (IBL) models simulate the process of making sequential choices with different credit assignment mechanisms, including a new IBL-TD model that combines the IBL decision mechanism with the TD approach. We found that (1) An IBL model that gives equal credit assignment to all decisions is able to match human performance better than other models, including IBL-TD and Q-learning; (2) IBL-TD and Q-learning models underperform compared to humans initially, but eventually, they outperform humans; (3) humans are influenced by decision complexity, while models are not. Our study provides insights into the challenges of capturing human behavior and the potential opportunities to use these models in future AI systems to support human activities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge