Charuka Herath

DSFL: A Dual-Server Byzantine-Resilient Federated Learning Framework via Group-Based Secure Aggregation

Sep 10, 2025

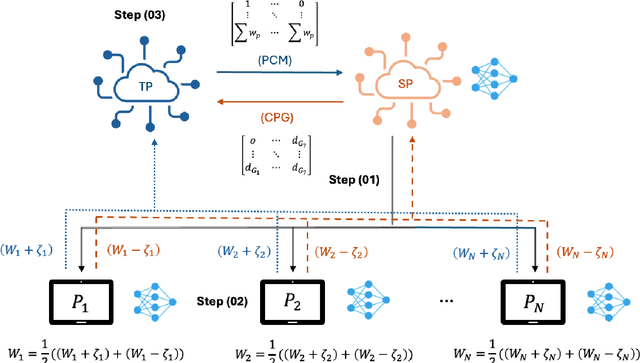

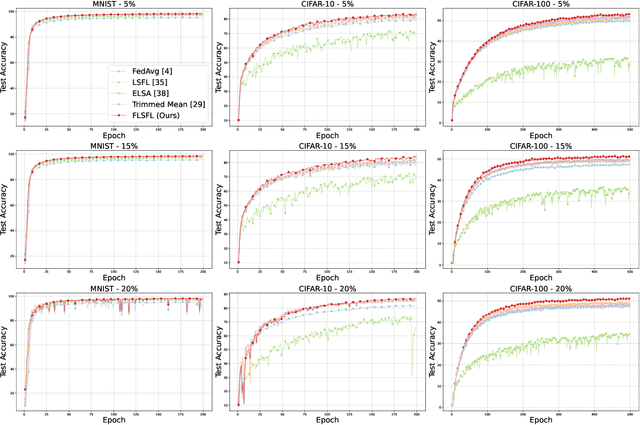

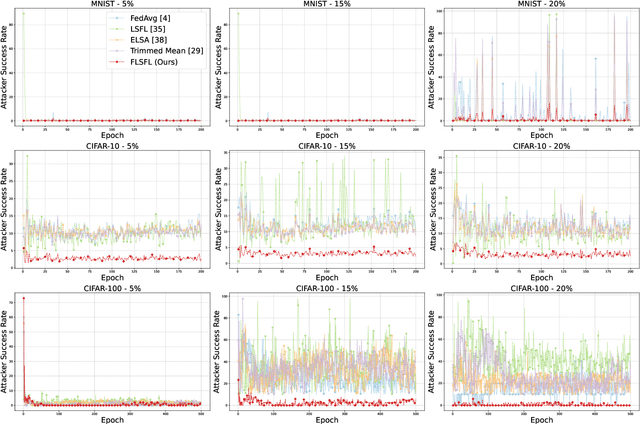

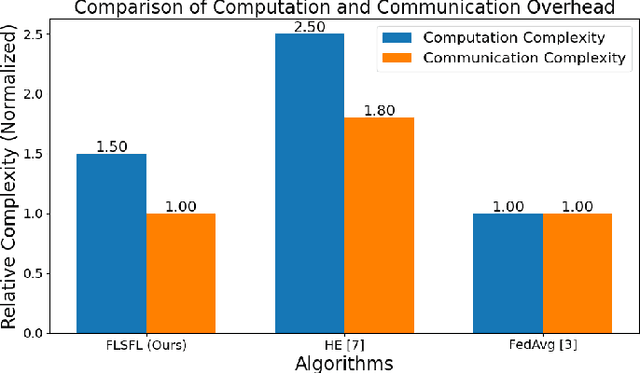

Abstract:Federated Learning (FL) enables decentralized model training without sharing raw data, offering strong privacy guarantees. However, existing FL protocols struggle to defend against Byzantine participants, maintain model utility under non-independent and identically distributed (non-IID) data, and remain lightweight for edge devices. Prior work either assumes trusted hardware, uses expensive cryptographic tools, or fails to address privacy and robustness simultaneously. We propose DSFL, a Dual-Server Byzantine-Resilient Federated Learning framework that addresses these limitations using a group-based secure aggregation approach. Unlike LSFL, which assumes non-colluding semi-honest servers, DSFL removes this dependency by revealing a key vulnerability: privacy leakage through client-server collusion. DSFL introduces three key innovations: (1) a dual-server secure aggregation protocol that protects updates without encryption or key exchange, (2) a group-wise credit-based filtering mechanism to isolate Byzantine clients based on deviation scores, and (3) a dynamic reward-penalty system for enforcing fair participation. DSFL is evaluated on MNIST, CIFAR-10, and CIFAR-100 under up to 30 percent Byzantine participants in both IID and non-IID settings. It consistently outperforms existing baselines, including LSFL, homomorphic encryption methods, and differential privacy approaches. For example, DSFL achieves 97.15 percent accuracy on CIFAR-10 and 68.60 percent on CIFAR-100, while FedAvg drops to 9.39 percent under similar threats. DSFL remains lightweight, requiring only 55.9 ms runtime and 1088 KB communication per round.

Enhancing Federated Learning Convergence with Dynamic Data Queue and Data Entropy-driven Participant Selection

Oct 23, 2024Abstract:Federated Learning (FL) is a decentralized approach for collaborative model training on edge devices. This distributed method of model training offers advantages in privacy, security, regulatory compliance, and cost-efficiency. Our emphasis in this research lies in addressing statistical complexity in FL, especially when the data stored locally across devices is not identically and independently distributed (non-IID). We have observed an accuracy reduction of up to approximately 10\% to 30\%, particularly in skewed scenarios where each edge device trains with only 1 class of data. This reduction is attributed to weight divergence, quantified using the Euclidean distance between device-level class distributions and the population distribution, resulting in a bias term (\(\delta_k\)). As a solution, we present a method to improve convergence in FL by creating a global subset of data on the server and dynamically distributing it across devices using a Dynamic Data queue-driven Federated Learning (DDFL). Next, we leverage Data Entropy metrics to observe the process during each training round and enable reasonable device selection for aggregation. Furthermore, we provide a convergence analysis of our proposed DDFL to justify their viability in practical FL scenarios, aiming for better device selection, a non-sub-optimal global model, and faster convergence. We observe that our approach results in a substantial accuracy boost of approximately 5\% for the MNIST dataset, around 18\% for CIFAR-10, and 20\% for CIFAR-100 with a 10\% global subset of data, outperforming the state-of-the-art (SOTA) aggregation algorithms.

FheFL: Fully Homomorphic Encryption Friendly Privacy-Preserving Federated Learning with Byzantine Users

Jun 26, 2023

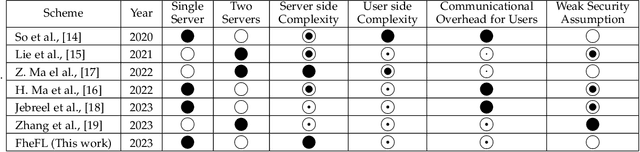

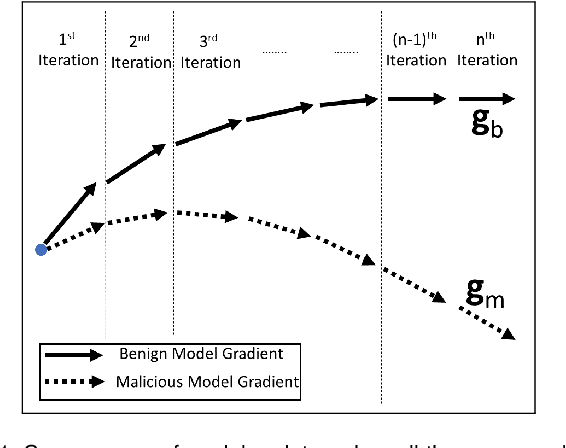

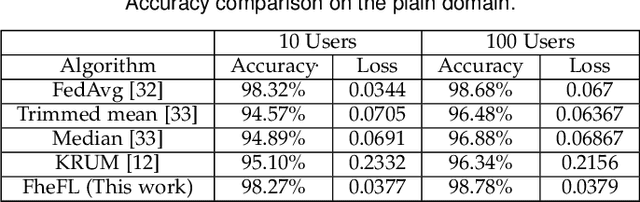

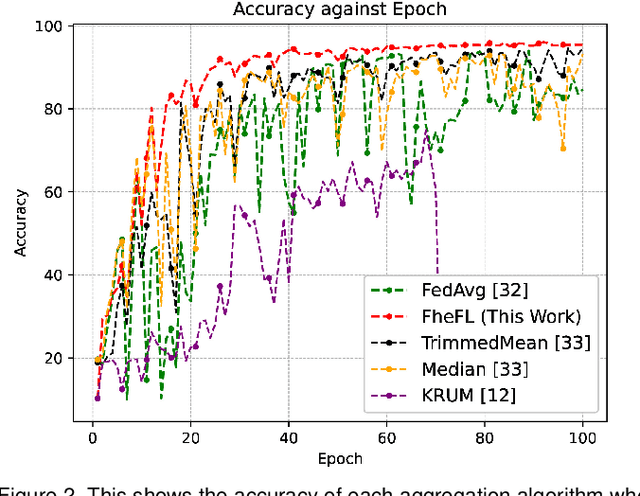

Abstract:The federated learning (FL) technique was developed to mitigate data privacy issues in the traditional machine learning paradigm. While FL ensures that a user's data always remain with the user, the gradients are shared with the centralized server to build the global model. This results in privacy leakage, where the server can infer private information from the shared gradients. To mitigate this flaw, the next-generation FL architectures proposed encryption and anonymization techniques to protect the model updates from the server. However, this approach creates other challenges, such as malicious users sharing false gradients. Since the gradients are encrypted, the server is unable to identify rogue users. To mitigate both attacks, this paper proposes a novel FL algorithm based on a fully homomorphic encryption (FHE) scheme. We develop a distributed multi-key additive homomorphic encryption scheme that supports model aggregation in FL. We also develop a novel aggregation scheme within the encrypted domain, utilizing users' non-poisoning rates, to effectively address data poisoning attacks while ensuring privacy is preserved by the proposed encryption scheme. Rigorous security, privacy, convergence, and experimental analyses have been provided to show that FheFL is novel, secure, and private, and achieves comparable accuracy at reasonable computational cost.

Recursive Euclidean Distance Based Robust Aggregation Technique For Federated Learning

Mar 20, 2023Abstract:Federated learning has gained popularity as a solution to data availability and privacy challenges in machine learning. However, the aggregation process of local model updates to obtain a global model in federated learning is susceptible to malicious attacks, such as backdoor poisoning, label-flipping, and membership inference. Malicious users aim to sabotage the collaborative learning process by training the local model with malicious data. In this paper, we propose a novel robust aggregation approach based on recursive Euclidean distance calculation. Our approach measures the distance of the local models from the previous global model and assigns weights accordingly. Local models far away from the global model are assigned smaller weights to minimize the data poisoning effect during aggregation. Our experiments demonstrate that the proposed algorithm outperforms state-of-the-art algorithms by at least $5\%$ in accuracy while reducing time complexity by less than $55\%$. Our contribution is significant as it addresses the critical issue of malicious attacks in federated learning while improving the accuracy of the global model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge