Chang-Ryeol Lee

Gyroscope-aided Relative Pose Estimation for Rolling Shutter Cameras

Apr 14, 2019

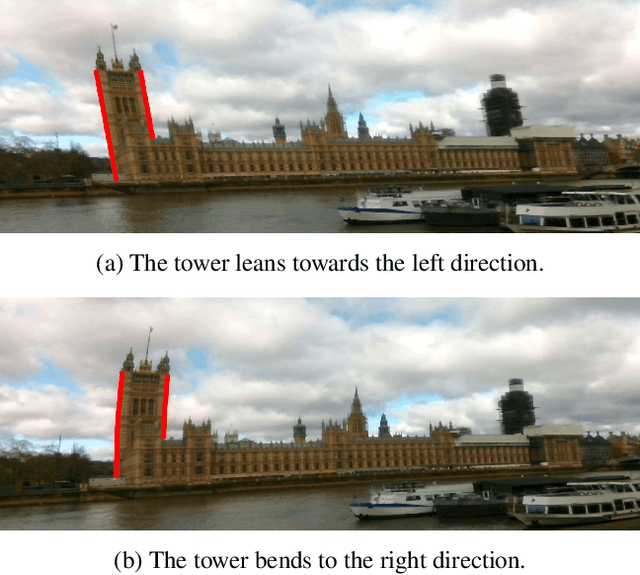

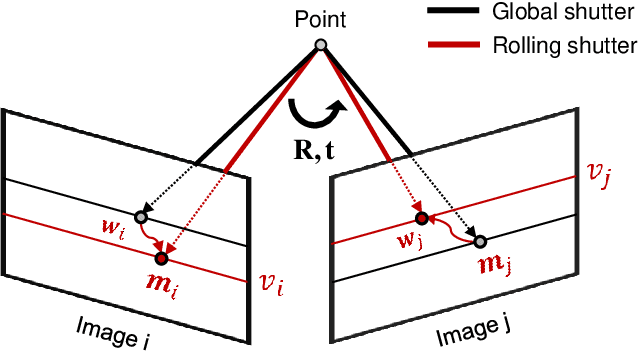

Abstract:The rolling shutter camera has received great attention due to its low cost imaging capability, however, the estimation of relative pose between rolling shutter cameras still remains a difficult problem owing to its line-by-line image capturing characteristics. To alleviate this problem, we exploit gyroscope measurements, angular velocity, along with image measurement to compute the relative pose between rolling shutter cameras. The gyroscope measurements provide the information about instantaneous motion that causes the rolling shutter distortion. Having gyroscope measurements in one hand, we simplify the relative pose estimation problem and find a minimal solution for the problem based on the Grobner basis polynomial solver. The proposed method requires only five points to compute relative pose between rolling shutter cameras, whereas previous methods require 20 or 44 corresponding points for linear and uniform rolling shutter geometry models, respectively. Experimental results on synthetic and real data verify the superiority of the proposed method over existing relative pose estimation methods.

Inertial-aided Rolling Shutter Relative Pose Estimation

Dec 01, 2017

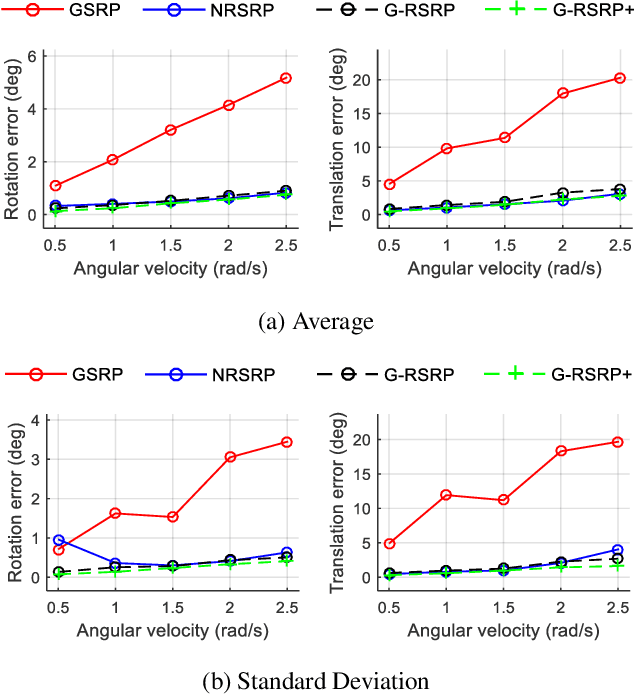

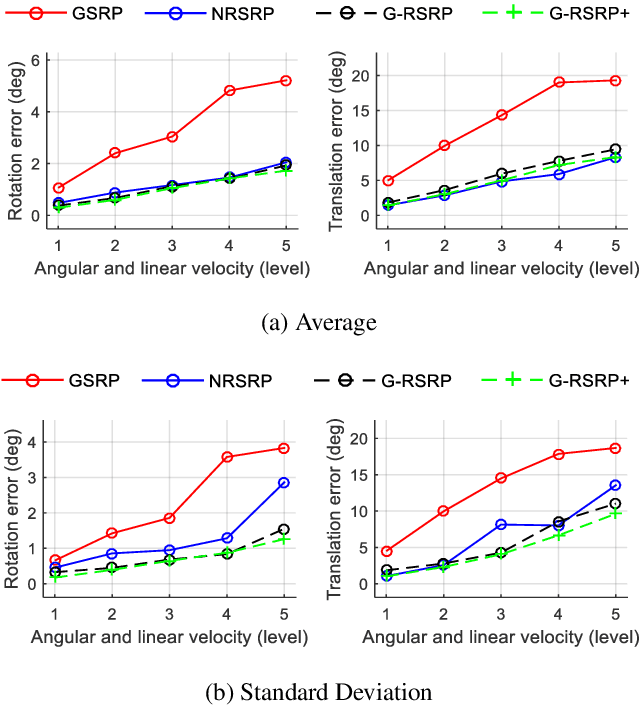

Abstract:Relative pose estimation is a fundamental problem in computer vision and it has been studied for conventional global shutter cameras for decades. However, recently, a rolling shutter camera has been widely used due to its low cost imaging capability and, since the rolling shutter camera captures the image line-by-line, the relative pose estimation of a rolling shutter camera is more difficult than that of a global shutter camera. In this paper, we propose to exploit inertial measurements (gravity and angular velocity) for the rolling shutter relative pose estimation problem. The inertial measurements provide information about the partial relative rotation between two views (cameras) and the instantaneous motion that causes the rolling shutter distortion. Based on this information, we simplify the rolling shutter relative pose estimation problem and propose effective methods to solve it. Unlike the previous methods, which require 44 (linear) or 17 (nonlinear) points with the uniform rolling shutter camera model, the proposed methods require at most 9 or 11 points to estimate the relative pose between the rolling shutter cameras. Experimental results on synthetic data and the public PennCOSYVIO dataset show that the proposed methods outperform the existing methods.

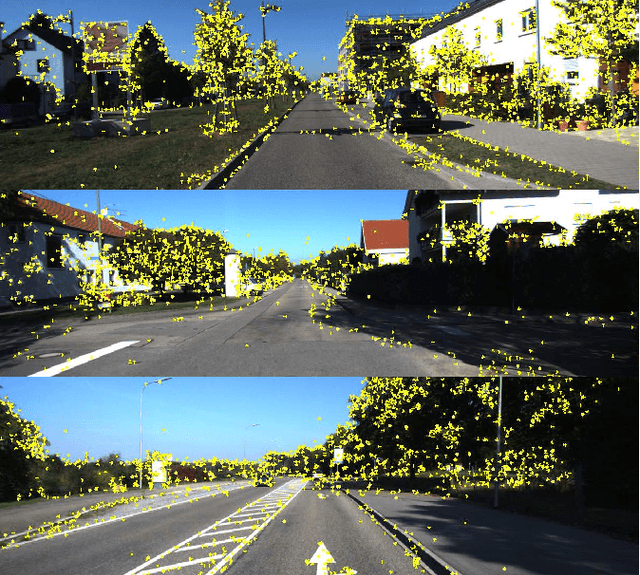

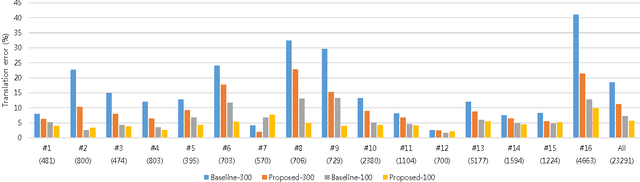

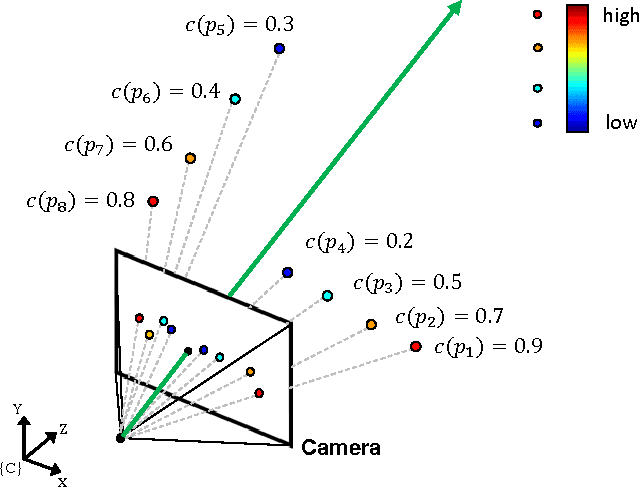

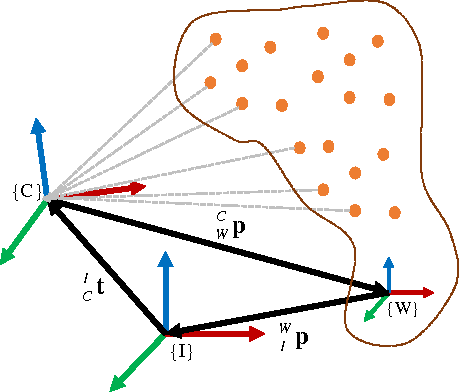

Exploiting Feature Confidence for Forward Motion Estimation

Aug 13, 2017

Abstract:Visual-Inertial Odometry (VIO) utilizes an Inertial Measurement Unit (IMU) to overcome the limitations of Visual Odometry (VO). However, the VIO for vehicles in large-scale outdoor environments still has some difficulties in estimating forward motion with distant features. To solve these difficulties, we propose a robust VIO method based on the analysis of feature confidence in forward motion estimation using an IMU. We first formulate the VIO problem by using effective trifocal tensor geometry. Then, we infer the feature confidence by using the motion information obtained from an IMU and incorporate the confidence into the Bayesian estimation framework. Experimental results on the public KITTI dataset show that the proposed VIO outperforms the baseline VIO, and it also demonstrates the effectiveness of the proposed feature confidence analysis and confidence-incorporated egomotion estimation framework.

Monocular Visual Odometry with a Rolling Shutter Camera

Apr 24, 2017

Abstract:Rolling Shutter (RS) cameras have become popularized because of low-cost imaging capability. However, the RS cameras suffer from undesirable artifacts when the camera or the subject is moving, or illumination condition changes. For that reason, Monocular Visual Odometry (MVO) with RS cameras produces inaccurate ego-motion estimates. Previous works solve this RS distortion problem with motion prediction from images and/or inertial sensors. However, the MVO still has trouble in handling the RS distortion when the camera motion changes abruptly (e.g. vibration of mobile cameras causes extremely fast motion instantaneously). To address the problem, we propose the novel MVO algorithm in consideration of the geometric characteristics of RS cameras. The key idea of the proposed algorithm is the new RS essential matrix which incorporates the instantaneous angular and linear velocities at each frame. Our algorithm produces accurate and robust ego-motion estimates in an online manner, and is applicable to various mobile applications with RS cameras. The superiority of the proposed algorithm is validated through quantitative and qualitative comparison on both synthetic and real dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge