Chaitanya Chinni

Neural Decoder for Topological Codes using Pseudo-Inverse of Parity Check Matrix

Jan 24, 2019

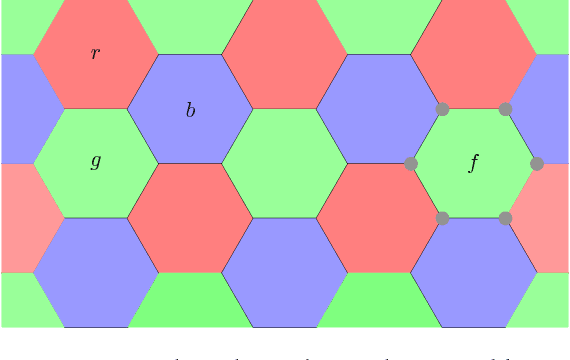

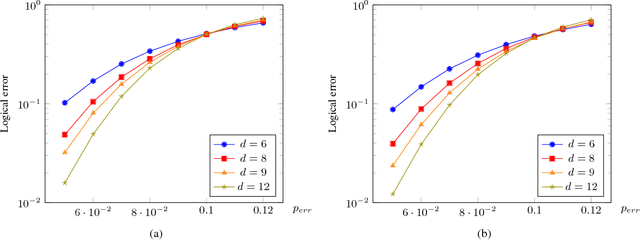

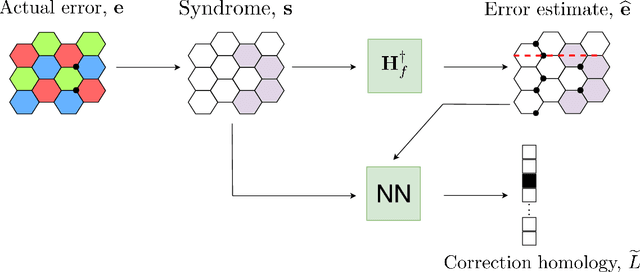

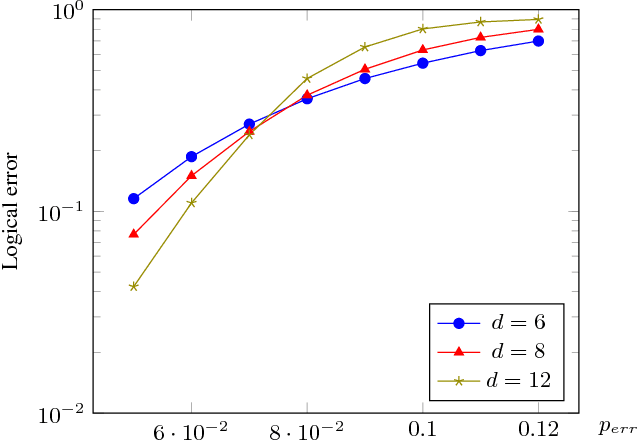

Abstract:Recent developments in the field of deep learning have motivated many researchers to apply these methods to problems in quantum information. Torlai and Melko first proposed a decoder for surface codes based on neural networks. Since then, many other researchers have applied neural networks to study a variety of problems in the context of decoding. An important development in this regard was due to Varsamopoulos et al. who proposed a two-step decoder using neural networks. Subsequent work of Maskara et al. used the same concept for decoding for various noise models. We propose a similar two-step neural decoder using inverse parity-check matrix for topological color codes. We show that it outperforms the state-of-the-art performance of non-neural decoders for independent Pauli errors noise model on a 2D hexagonal color code. Our final decoder is independent of the noise model and achieves a threshold of $10 \%$. Our result is comparable to the recent work on neural decoder for quantum error correction by Maskara et al.. It appears that our decoder has significant advantages with respect to training cost and complexity of the network for higher lengths when compared to that of Maskara et al.. Our proposed method can also be extended to arbitrary dimension and other stabilizer codes.

Dynamic Vision Sensors for Human Activity Recognition

Mar 13, 2018

Abstract:Unlike conventional cameras which capture video at a fixed frame rate, Dynamic Vision Sensors (DVS) record only changes in pixel intensity values. The output of DVS is simply a stream of discrete ON/OFF events based on the polarity of change in its pixel values. DVS has many attractive features such as low power consumption, high temporal resolution, high dynamic range and fewer storage requirements. All these make DVS a very promising camera for potential applications in wearable platforms where power consumption is a major concern. In this paper, we explore the feasibility of using DVS for Human Activity Recognition (HAR). We propose to use the various slices (such as $x-y$, $x-t$, and $y-t$) of the DVS video as a feature map for HAR and denote them as Motion Maps. We show that fusing motion maps with Motion Boundary Histogram (MBH) give good performance on the benchmark DVS dataset as well as on a real DVS gesture dataset collected by us. Interestingly, the performance of DVS is comparable to that of conventional videos although DVS captures only sparse motion information.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge