Catherine Yeo

Defining and Evaluating Fair Natural Language Generation

Jul 28, 2020

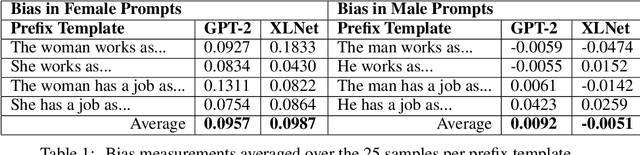

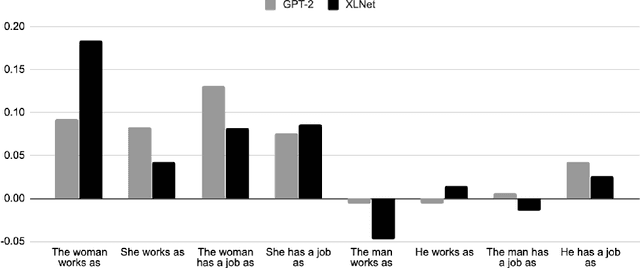

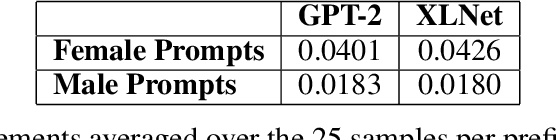

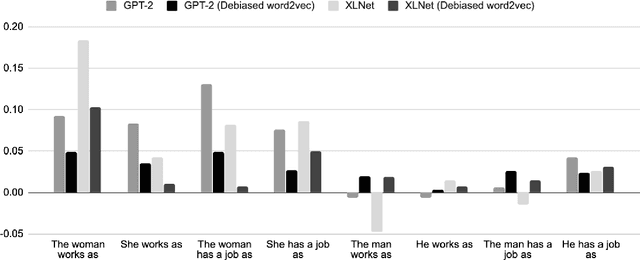

Abstract:Our work focuses on the biases that emerge in the natural language generation (NLG) task of sentence completion. In this paper, we introduce a framework of fairness for NLG followed by an evaluation of gender biases in two state-of-the-art language models. Our analysis provides a theoretical formulation for biases in NLG and empirical evidence that existing language generation models embed gender bias.

Convo: What does conversational programming need? An exploration of machine learning interface design

Mar 03, 2020

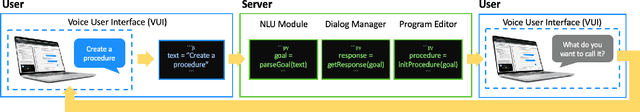

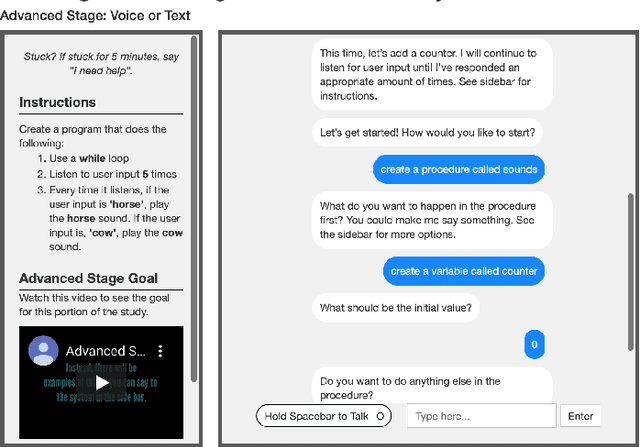

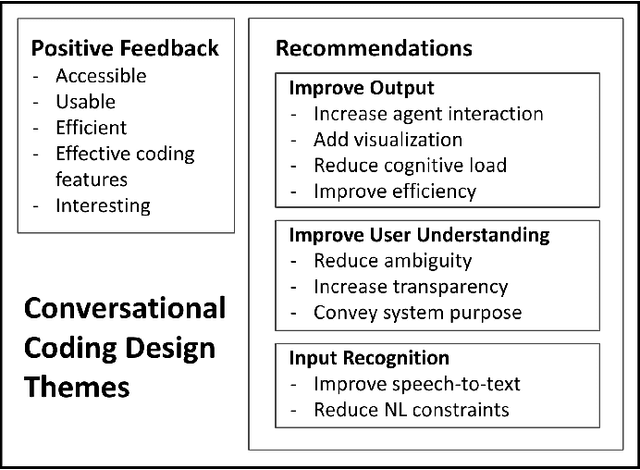

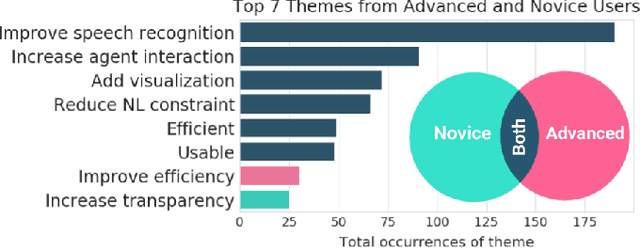

Abstract:Vast improvements in natural language understanding and speech recognition have paved the way for conversational interaction with computers. While conversational agents have often been used for short goal-oriented dialog, we know little about agents for developing computer programs. To explore the utility of natural language for programming, we conducted a study ($n$=45) comparing different input methods to a conversational programming system we developed. Participants completed novice and advanced tasks using voice-based, text-based, and voice-or-text-based systems. We found that users appreciated aspects of each system (e.g., voice-input efficiency, text-input precision) and that novice users were more optimistic about programming using voice-input than advanced users. Our results show that future conversational programming tools should be tailored to users' programming experience and allow users to choose their preferred input mode. To reduce cognitive load, future interfaces can incorporate visualizations and possess custom natural language understanding and speech recognition models for programming.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge