Carsten Gottschlich

DOTmark - A Benchmark for Discrete Optimal Transport

Oct 11, 2016

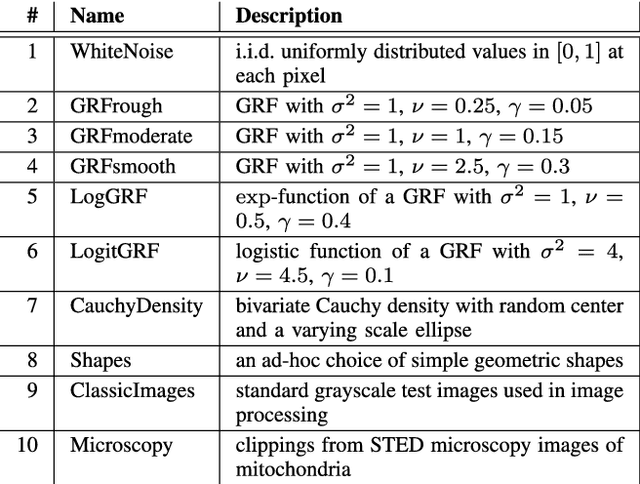

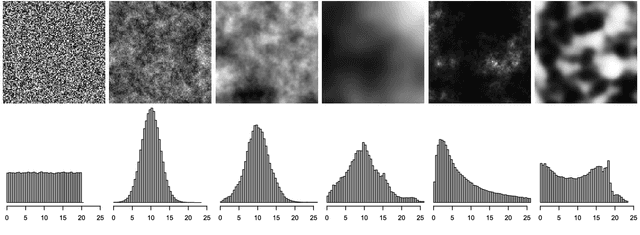

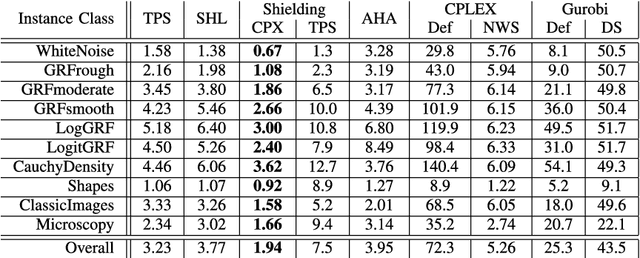

Abstract:The Wasserstein metric or earth mover's distance (EMD) is a useful tool in statistics, machine learning and computer science with many applications to biological or medical imaging, among others. Especially in the light of increasingly complex data, the computation of these distances via optimal transport is often the limiting factor. Inspired by this challenge, a variety of new approaches to optimal transport has been proposed in recent years and along with these new methods comes the need for a meaningful comparison. In this paper, we introduce a benchmark for discrete optimal transport, called DOTmark, which is designed to serve as a neutral collection of problems, where discrete optimal transport methods can be tested, compared to one another, and brought to their limits on large-scale instances. It consists of a variety of grayscale images, in various resolutions and classes, such as several types of randomly generated images, classical test images and real data from microscopy. Along with the DOTmark we present a survey and a performance test for a cross section of established methods ranging from more traditional algorithms, such as the transportation simplex, to recently developed approaches, such as the shielding neighborhood method, and including also a comparison with commercial solvers.

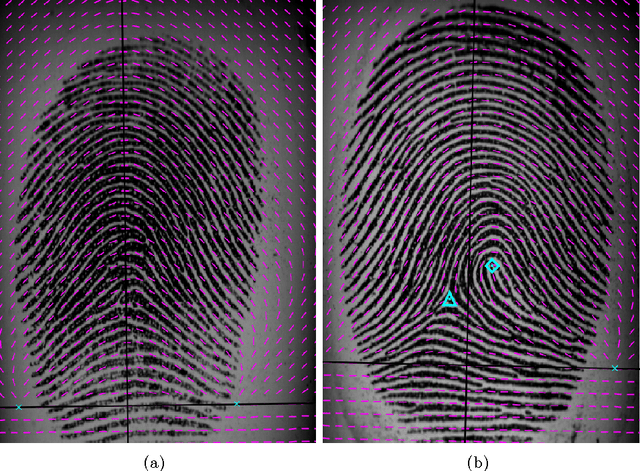

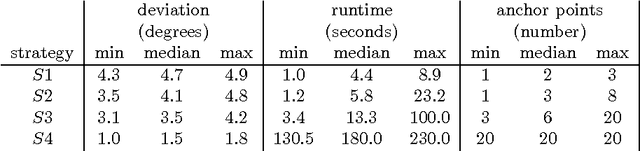

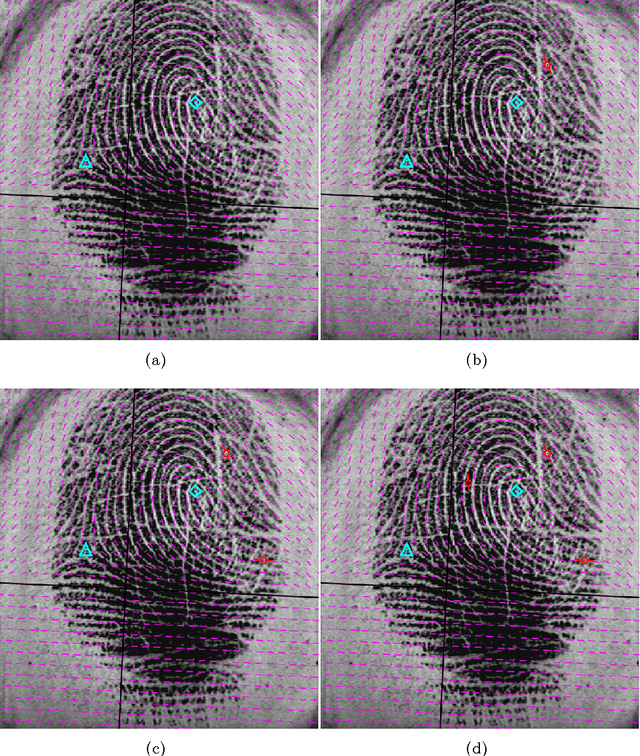

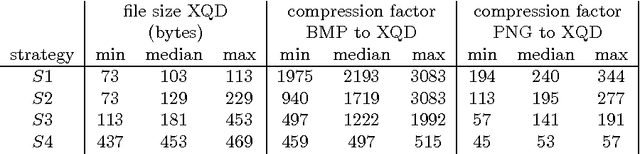

Perfect Fingerprint Orientation Fields by Locally Adaptive Global Models

Jun 20, 2016

Abstract:Fingerprint recognition is widely used for verification and identification in many commercial, governmental and forensic applications. The orientation field (OF) plays an important role at various processing stages in fingerprint recognition systems. OFs are used for image enhancement, fingerprint alignment, for fingerprint liveness detection, fingerprint alteration detection and fingerprint matching. In this paper, a novel approach is presented to globally model an OF combined with locally adaptive methods. We show that this model adapts perfectly to the 'true OF' in the limit. This perfect OF is described by a small number of parameters with straightforward geometric interpretation. Applications are manifold: Quick expert marking of very poor quality (for instance latent) OFs, high fidelity low parameter OF compression and a direct road to ground truth OFs markings for large databases, say. In this contribution we describe an algorithm to perfectly estimate OF parameters automatically or semi-automatically, depending on image quality, and we establish the main underlying claim of high fidelity low parameter OF compression.

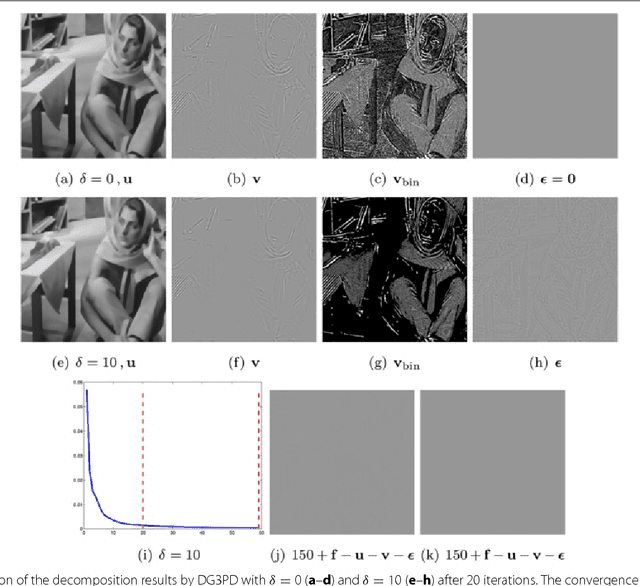

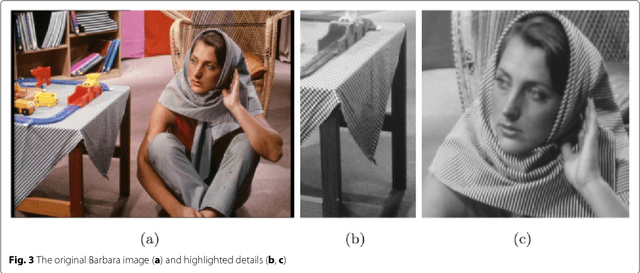

Simultaneous Inpainting and Denoising by Directional Global Three-part Decomposition: Connecting Variational and Fourier Domain Based Image Processing

Jun 09, 2016

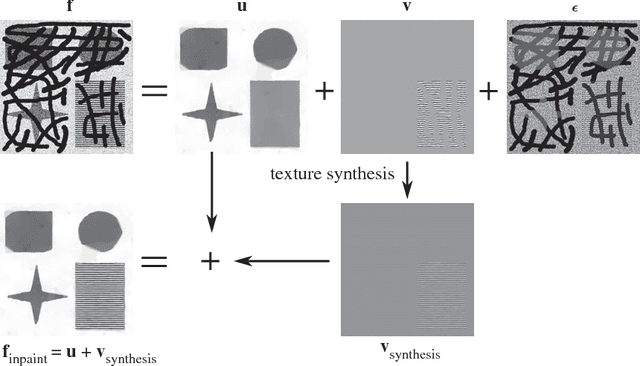

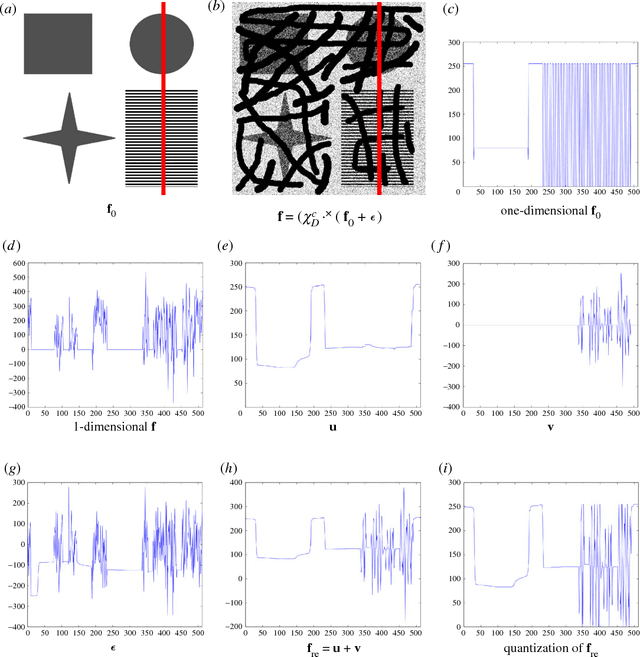

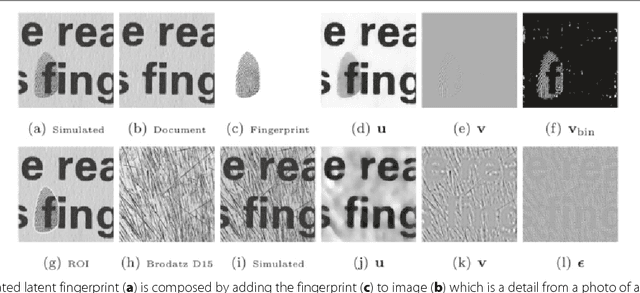

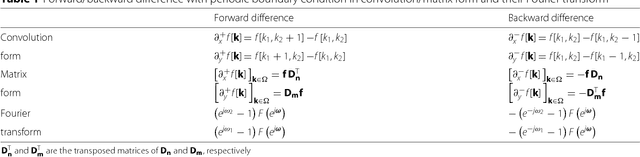

Abstract:We consider the very challenging task of restoring images (i) which have a large number of missing pixels, (ii) whose existing pixels are corrupted by noise and (iii) the ideal image to be restored contains both cartoon and texture elements. The combination of these three properties makes this inverse problem a very difficult one. The solution proposed in this manuscript is based on directional global three-part decomposition (DG3PD) [ThaiGottschlich2016] with directional total variation norm, directional G-norm and $\ell_\infty$-norm in curvelet domain as key ingredients of the model. Image decomposition by DG3PD enables a decoupled inpainting and denoising of the cartoon and texture components. A comparison to existing approaches for inpainting and denoising shows the advantages of the proposed method. Moreover, we regard the image restoration problem from the viewpoint of a Bayesian framework and we discuss the connections between the proposed solution by function space and related image representation by harmonic analysis and pyramid decomposition.

Directional Global Three-part Image Decomposition

Oct 06, 2015

Abstract:We consider the task of image decomposition and we introduce a new model coined directional global three-part decomposition (DG3PD) for solving it. As key ingredients of the DG3PD model, we introduce a discrete multi-directional total variation norm and a discrete multi-directional G-norm. Using these novel norms, the proposed discrete DG3PD model can decompose an image into two parts or into three parts. Existing models for image decomposition by Vese and Osher, by Aujol and Chambolle, by Starck et al., and by Thai and Gottschlich are included as special cases in the new model. Decomposition of an image by DG3PD results in a cartoon image, a texture image and a residual image. Advantages of the DG3PD model over existing ones lie in the properties enforced on the cartoon and texture images. The geometric objects in the cartoon image have a very smooth surface and sharp edges. The texture image yields oscillating patterns on a defined scale which is both smooth and sparse. Moreover, the DG3PD method achieves the goal of perfect reconstruction by summation of all components better than the other considered methods. Relevant applications of DG3PD are a novel way of image compression as well as feature extraction for applications such as latent fingerprint processing and optical character recognition.

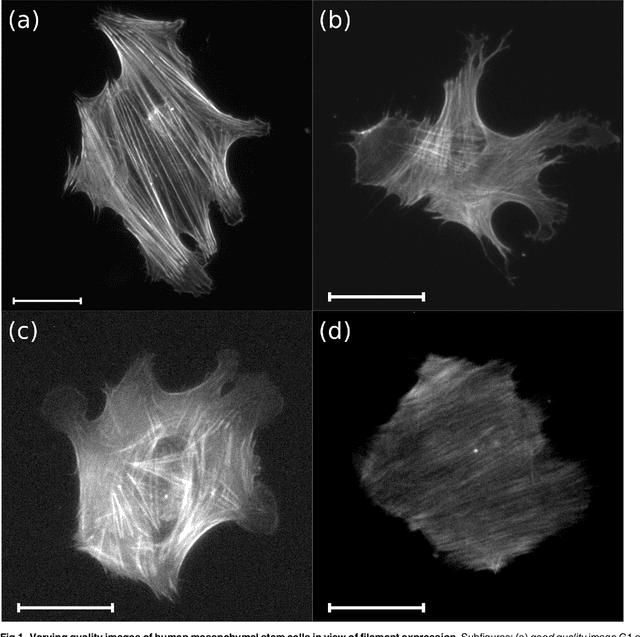

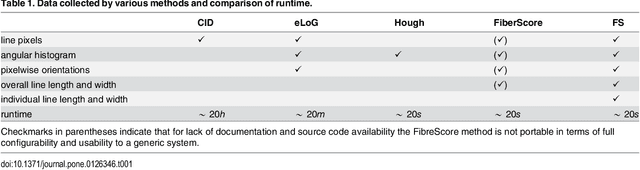

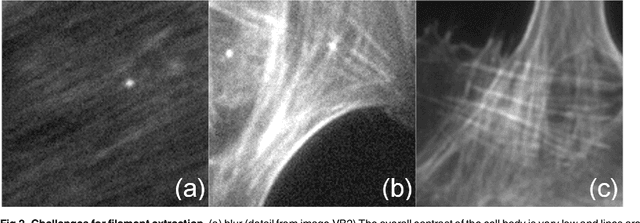

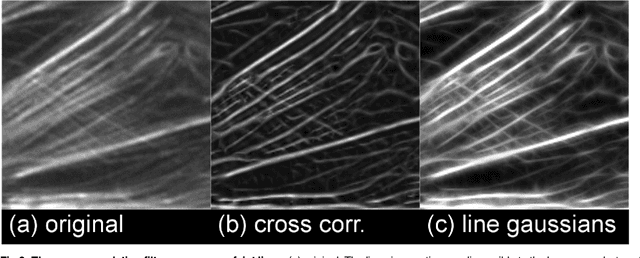

The Filament Sensor for Near Real-Time Detection of Cytoskeletal Fiber Structures

Jul 14, 2015

Abstract:A reliable extraction of filament data from microscopic images is of high interest in the analysis of acto-myosin structures as early morphological markers in mechanically guided differentiation of human mesenchymal stem cells and the understanding of the underlying fiber arrangement processes. In this paper, we propose the filament sensor (FS), a fast and robust processing sequence which detects and records location, orientation, length and width for each single filament of an image, and thus allows for the above described analysis. The extraction of these features has previously not been possible with existing methods. We evaluate the performance of the proposed FS in terms of accuracy and speed in comparison to three existing methods with respect to their limited output. Further, we provide a benchmark dataset of real cell images along with filaments manually marked by a human expert as well as simulated benchmark images. The FS clearly outperforms existing methods in terms of computational runtime and filament extraction accuracy. The implementation of the FS and the benchmark database are available as open source.

* 32 pages, 21 figures

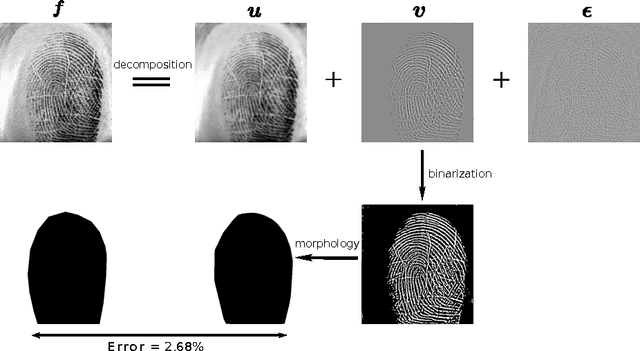

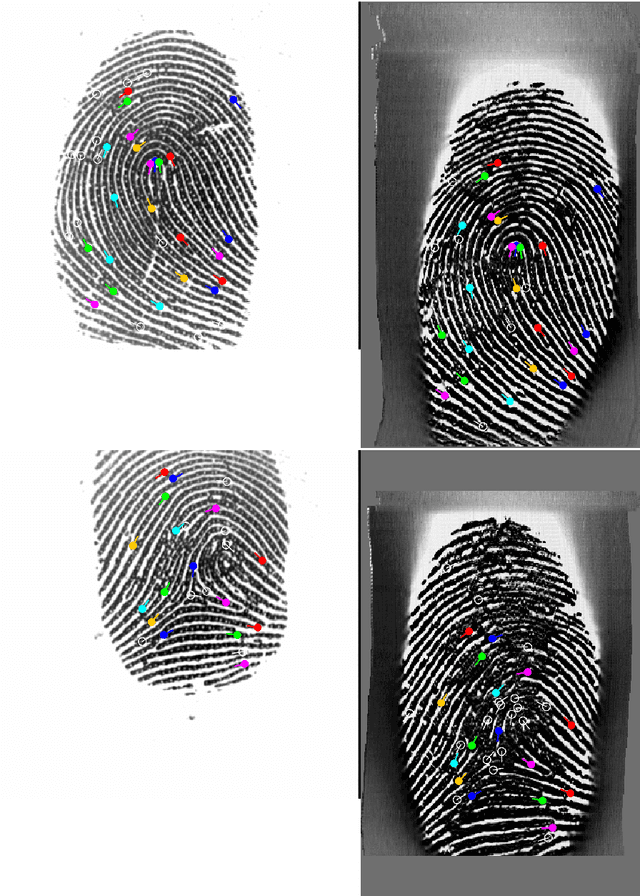

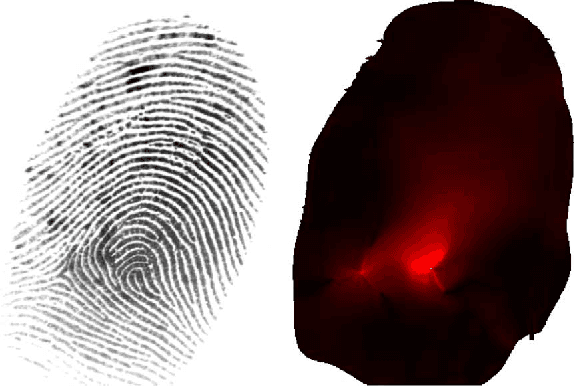

Global Variational Method for Fingerprint Segmentation by Three-part Decomposition

May 18, 2015

Abstract:Verifying an identity claim by fingerprint recognition is a commonplace experience for millions of people in their daily life, e.g. for unlocking a tablet computer or smartphone. The first processing step after fingerprint image acquisition is segmentation, i.e. dividing a fingerprint image into a foreground region which contains the relevant features for the comparison algorithm, and a background region. We propose a novel segmentation method by global three-part decomposition (G3PD). Based on global variational analysis, the G3PD method decomposes a fingerprint image into cartoon, texture and noise parts. After decomposition, the foreground region is obtained from the non-zero coefficients in the texture image using morphological processing. The segmentation performance of the G3PD method is compared to five state-of-the-art methods on a benchmark which comprises manually marked ground truth segmentation for 10560 images. Performance evaluations show that the G3PD method consistently outperforms existing methods in terms of segmentation accuracy.

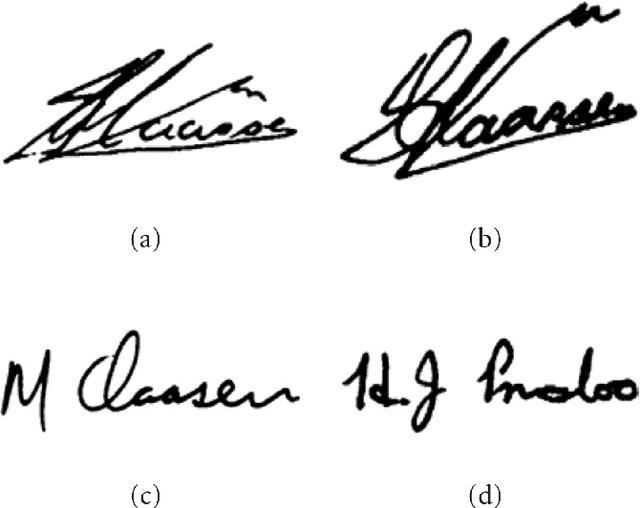

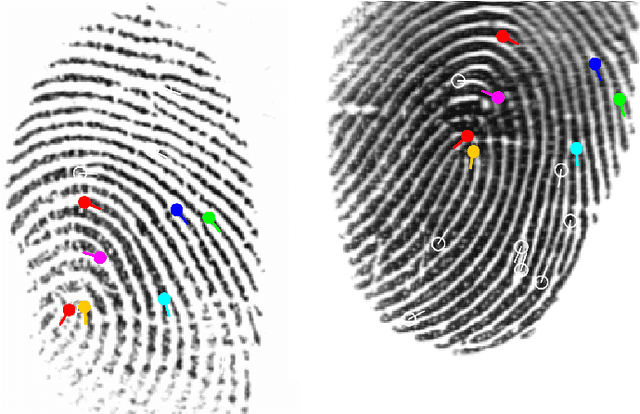

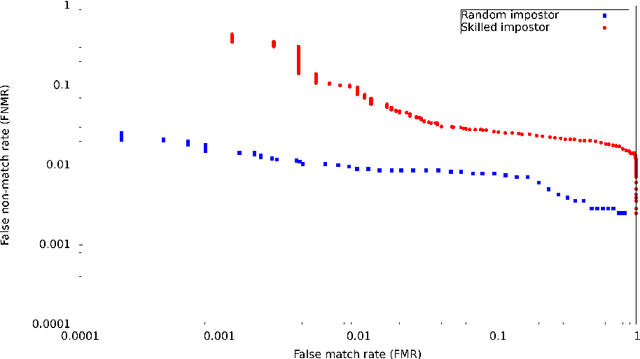

Skilled Impostor Attacks Against Fingerprint Verification Systems And Its Remedy

Mar 16, 2015

Abstract:Fingerprint verification systems are becoming ubiquitous in everyday life. This trend is propelled especially by the proliferation of mobile devices with fingerprint sensors such as smartphones and tablet computers, and fingerprint verification is increasingly applied for authenticating financial transactions. In this study we describe a novel attack vector against fingerprint verification systems which we coin skilled impostor attack. We show that existing protocols for performance evaluation of fingerprint verification systems are flawed and as a consequence of this, the system's real vulnerability is systematically underestimated. We examine a scenario in which a fingerprint verification system is tuned to operate at false acceptance rate of 0.1% using the traditional verification protocols with random impostors (zero-effort attacks). We demonstrate that an active and intelligent attacker can achieve a chance of success in the area of 89% or more against this system by performing skilled impostor attacks. We describe a new protocol for evaluating fingerprint verification performance in order to improve the assessment of potential and limitations of fingerprint recognition systems. This new evaluation protocol enables a more informed decision concerning the operating threshold in practical applications and the respective trade-off between security (low false acceptance rates) and usability (low false rejection rates). The skilled impostor attack is a general attack concept which is independent of specific databases or comparison algorithms. The proposed protocol relying on skilled impostor attacks can directly be applied for evaluating the verification performance of other biometric modalities such as e.g. iris, face, ear, finger vein, gait or speaker recognition.

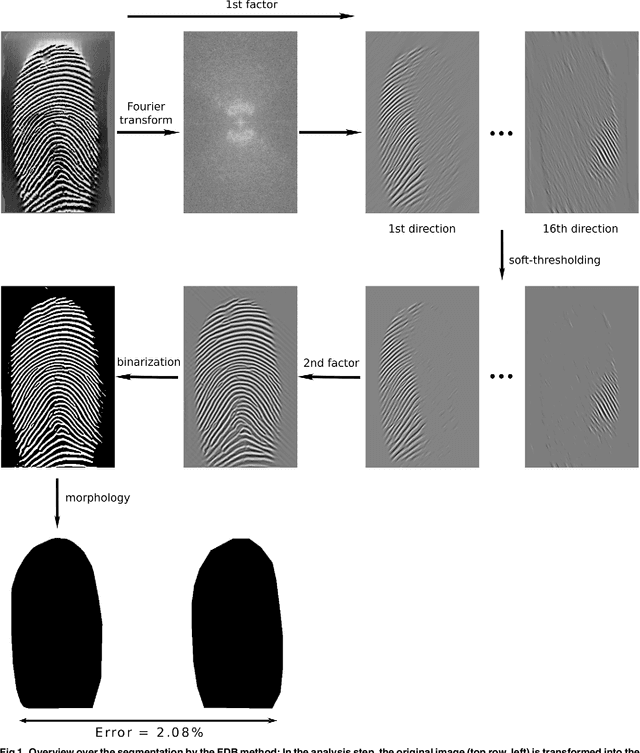

Filter Design and Performance Evaluation for Fingerprint Image Segmentation

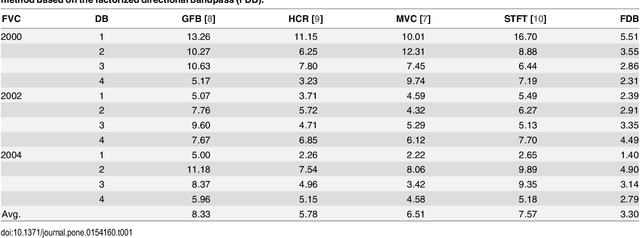

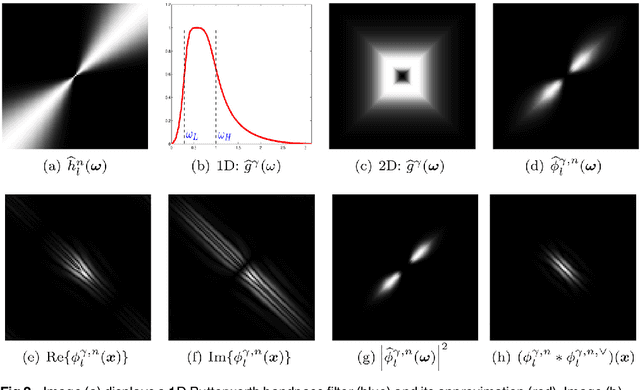

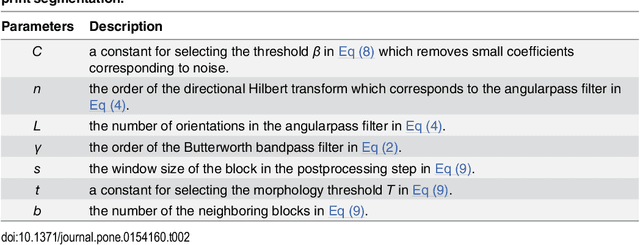

Jan 09, 2015

Abstract:Fingerprint recognition plays an important role in many commercial applications and is used by millions of people every day, e.g. for unlocking mobile phones. Fingerprint image segmentation is typically the first processing step of most fingerprint algorithms and it divides an image into foreground, the region of interest, and background. Two types of error can occur during this step which both have a negative impact on the recognition performance: 'true' foreground can be labeled as background and features like minutiae can be lost, or conversely 'true' background can be misclassified as foreground and spurious features can be introduced. The contribution of this paper is threefold: firstly, we propose a novel factorized directional bandpass (FDB) segmentation method for texture extraction based on the directional Hilbert transform of a Butterworth bandpass (DHBB) filter interwoven with soft-thresholding. Secondly, we provide a manually marked ground truth segmentation for 10560 images as an evaluation benchmark. Thirdly, we conduct a systematic performance comparison between the FDB method and four of the most often cited fingerprint segmentation algorithms showing that the FDB segmentation method clearly outperforms these four widely used methods. The benchmark and the implementation of the FDB method are made publicly available.

Separating the Real from the Synthetic: Minutiae Histograms as Fingerprints of Fingerprints

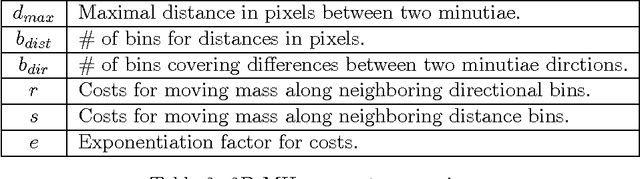

Oct 15, 2014

Abstract:In this study we show that by the current state-of-the-art synthetically generated fingerprints can easily be discriminated from real fingerprints. We propose a method based on second order extended minutiae histograms (MHs) which can distinguish between real and synthetic prints with very high accuracy. MHs provide a fixed-length feature vector for a fingerprint which are invariant under rotation and translation. This 'test of realness' can be applied to synthetic fingerprints produced by any method. In this work, tests are conducted on the 12 publicly available databases of FVC2000, FVC2002 and FVC2004 which are well established benchmarks for evaluating the performance of fingerprint recognition algorithms; 3 of these 12 databases consist of artificial fingerprints generated by the SFinGe software. Additionally, we evaluate the discriminative performance on a database of synthetic fingerprints generated by the software of Bicz versus real fingerprint images. We conclude with suggestions for the improvement of synthetic fingerprint generation.

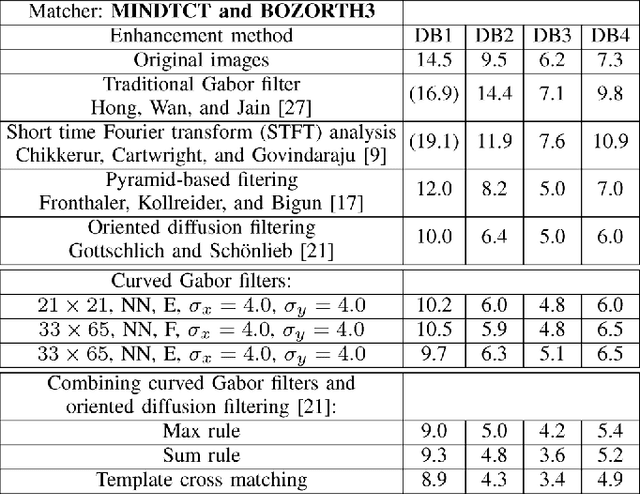

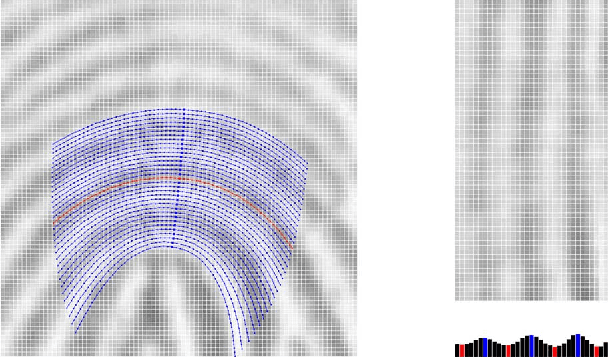

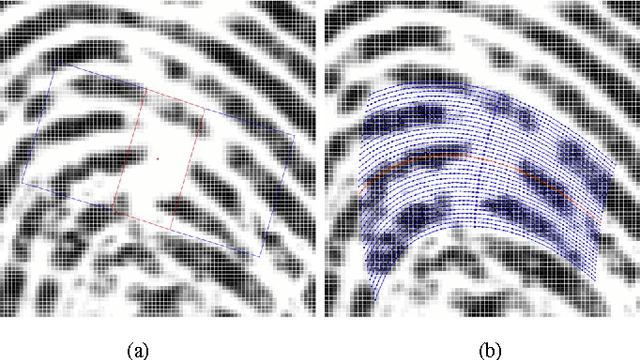

Curved Gabor Filters for Fingerprint Image Enhancement

Jul 25, 2014

Abstract:Gabor filters play an important role in many application areas for the enhancement of various types of images and the extraction of Gabor features. For the purpose of enhancing curved structures in noisy images, we introduce curved Gabor filters which locally adapt their shape to the direction of flow. These curved Gabor filters enable the choice of filter parameters which increase the smoothing power without creating artifacts in the enhanced image. In this paper, curved Gabor filters are applied to the curved ridge and valley structure of low-quality fingerprint images. First, we combine two orientation field estimation methods in order to obtain a more robust estimation for very noisy images. Next, curved regions are constructed by following the respective local orientation and they are used for estimating the local ridge frequency. Lastly, curved Gabor filters are defined based on curved regions and they are applied for the enhancement of low-quality fingerprint images. Experimental results on the FVC2004 databases show improvements of this approach in comparison to state-of-the-art enhancement methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge