Carola-Bibiane Schoenlieb

A multi-contrast MRI approach to thalamus segmentation

Jul 27, 2018

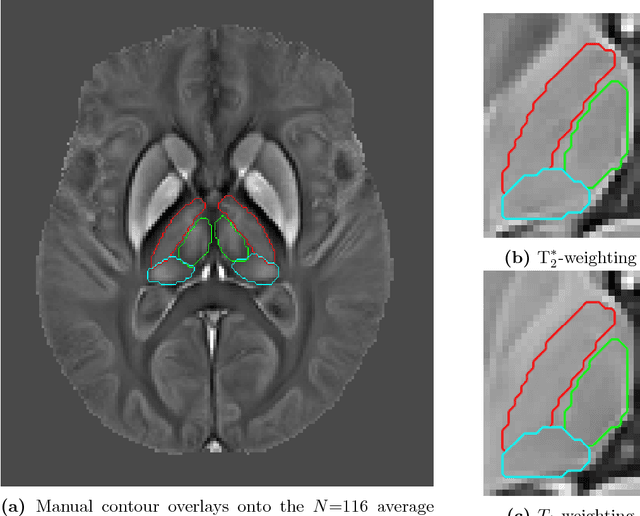

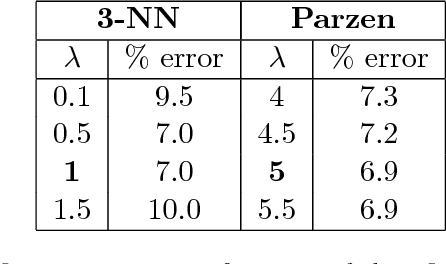

Abstract:Thalamic alterations are relevant to many neurological disorders including Alzheimer's disease, Parkinson's disease and multiple sclerosis. Routine interventions to improve symptom severity in movement disorders, for example, often consist of surgery or deep brain stimulation to diencephalic nuclei. Therefore, accurate delineation of grey matter thalamic subregions is of the upmost clinical importance. MRI is highly appropriate for structural segmentation as it provides different views of the anatomy from a single scanning session. Though with several contrasts potentially available, it is also of increasing importance to develop new image segmentation techniques that can operate multi-spectrally. We hereby propose a new segmentation method for use with multi-modality data, which we evaluated for automated segmentation of major thalamic subnuclear groups using T1-, T2*-weighted and quantitative susceptibility mapping (QSM) information. The proposed method consists of four steps: highly iterative image co-registration, manual segmentation on the average training-data template, supervised learning for pattern recognition, and a final convex optimisation step imposing further spatial constraints to refine the solution. This led to solutions in greater agreement with manual segmentation than the standard Morel atlas based approach. Furthermore, we show that the multi-contrast approach boosts segmentation performances. We then investigated whether prior knowledge using the training-template contours could further improve convex segmentation accuracy and robustness, which led to highly precise multi-contrast segmentations in single subjects. This approach can be extended to most 3D imaging data types and any region of interest discernible in single scans or multi-subject templates.

Guidefill: GPU Accelerated, Artist Guided Geometric Inpainting for 3D Conversion

Jul 31, 2017

Abstract:The conversion of traditional film into stereo 3D has become an important problem in the past decade. One of the main bottlenecks is a disocclusion step, which in commercial 3D conversion is usually done by teams of artists armed with a toolbox of inpainting algorithms. A current difficulty in this is that most available algorithms are either too slow for interactive use, or provide no intuitive means for users to tweak the output. In this paper we present a new fast inpainting algorithm based on transporting along automatically detected splines, which the user may edit. Our algorithm is implemented on the GPU and fills the inpainting domain in successive shells that adapt their shape on the fly. In order to allocate GPU resources as efficiently as possible, we propose a parallel algorithm to track the inpainting interface as it evolves, ensuring that no resources are wasted on pixels that are not currently being worked on. Theoretical analysis of the time and processor complexiy of our algorithm without and with tracking (as well as numerous numerical experiments) demonstrate the merits of the latter. Our transport mechanism is similar to the one used in coherence transport, but improves upon it by corrected a "kinking" phenomena whereby extrapolated isophotes may bend at the boundary of the inpainting domain. Theoretical results explaining this phenomena and its resolution are presented. Although our method ignores texture, in many cases this is not a problem due to the thin inpainting domains in 3D conversion. Experimental results show that our method can achieve a visual quality that is competitive with the state-of-the-art while maintaining interactive speeds and providing the user with an intuitive interface to tweak the results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge