Carlos Domingo

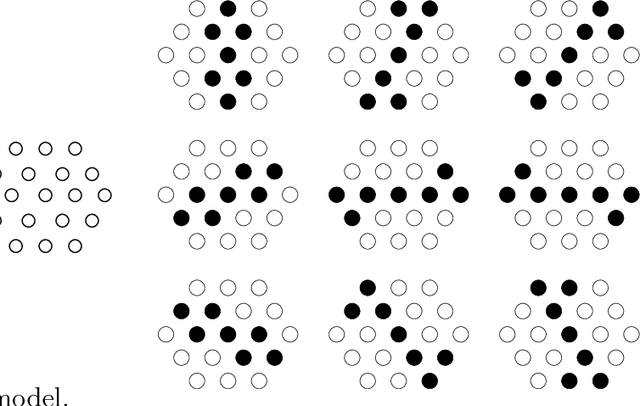

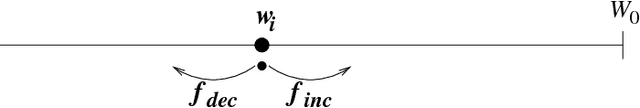

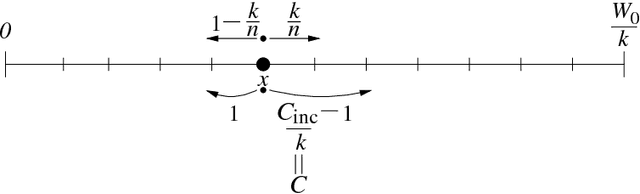

A role of constraint in self-organization

Sep 30, 1998

Abstract:In this paper we introduce a neural network model of self-organization. This model uses a variation of Hebb rule for updating its synaptic weights, and surely converges to the equilibrium status. The key point of the convergence is the update rule that constrains the total synaptic weight and this seems to make the model stable. We investigate the role of the constraint and show that it is the constraint that makes the model stable. For analyzing this setting, we propose a simple probabilistic game that models the neural network and the self-organization process. Then, we investigate the characteristics of this game, namely, the probability that the game becomes stable and the number of the steps it takes.

Practical algorithms for on-line sampling

Sep 30, 1998

Abstract:One of the core applications of machine learning to knowledge discovery consists on building a function (a hypothesis) from a given amount of data (for instance a decision tree or a neural network) such that we can use it afterwards to predict new instances of the data. In this paper, we focus on a particular situation where we assume that the hypothesis we want to use for prediction is very simple, and thus, the hypotheses class is of feasible size. We study the problem of how to determine which of the hypotheses in the class is almost the best one. We present two on-line sampling algorithms for selecting hypotheses, give theoretical bounds on the number of necessary examples, and analize them exprimentally. We compare them with the simple batch sampling approach commonly used and show that in most of the situations our algorithms use much fewer number of examples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge