Carlos Carvalho

CS-FLEURS: A Massively Multilingual and Code-Switched Speech Dataset

Sep 17, 2025Abstract:We present CS-FLEURS, a new dataset for developing and evaluating code-switched speech recognition and translation systems beyond high-resourced languages. CS-FLEURS consists of 4 test sets which cover in total 113 unique code-switched language pairs across 52 languages: 1) a 14 X-English language pair set with real voices reading synthetically generated code-switched sentences, 2) a 16 X-English language pair set with generative text-to-speech 3) a 60 {Arabic, Mandarin, Hindi, Spanish}-X language pair set with the generative text-to-speech, and 4) a 45 X-English lower-resourced language pair test set with concatenative text-to-speech. Besides the four test sets, CS-FLEURS also provides a training set with 128 hours of generative text-to-speech data across 16 X-English language pairs. Our hope is that CS-FLEURS helps to broaden the scope of future code-switched speech research. Dataset link: https://huggingface.co/datasets/byan/cs-fleurs.

CAMÕES: A Comprehensive Automatic Speech Recognition Benchmark for European Portuguese

Aug 27, 2025Abstract:Existing resources for Automatic Speech Recognition in Portuguese are mostly focused on Brazilian Portuguese, leaving European Portuguese (EP) and other varieties under-explored. To bridge this gap, we introduce CAM\~OES, the first open framework for EP and other Portuguese varieties. It consists of (1) a comprehensive evaluation benchmark, including 46h of EP test data spanning multiple domains; and (2) a collection of state-of-the-art models. For the latter, we consider multiple foundation models, evaluating their zero-shot and fine-tuned performances, as well as E-Branchformer models trained from scratch. A curated set of 425h of EP was used for both fine-tuning and training. Our results show comparable performance for EP between fine-tuned foundation models and the E-Branchformer. Furthermore, the best-performing models achieve relative improvements above 35% WER, compared to the strongest zero-shot foundation model, establishing a new state-of-the-art for EP and other varieties.

AC-Mix: Self-Supervised Adaptation for Low-Resource Automatic Speech Recognition using Agnostic Contrastive Mixup

Oct 18, 2024Abstract:Self-supervised learning (SSL) leverages large amounts of unlabelled data to learn rich speech representations, fostering improvements in automatic speech recognition (ASR), even when only a small amount of labelled data is available for fine-tuning. Despite the advances in SSL, a significant challenge remains when the data used for pre-training (source domain) mismatches the fine-tuning data (target domain). To tackle this domain mismatch challenge, we propose a new domain adaptation method for low-resource ASR focused on contrastive mixup for joint-embedding architectures named AC-Mix (agnostic contrastive mixup). In this approach, the SSL model is adapted through additional pre-training using mixed data views created by interpolating samples from the source and the target domains. Our proposed adaptation method consistently outperforms the baseline system, using approximately 11 hours of adaptation data and requiring only 1 hour of adaptation time on a single GPU with WavLM-Large.

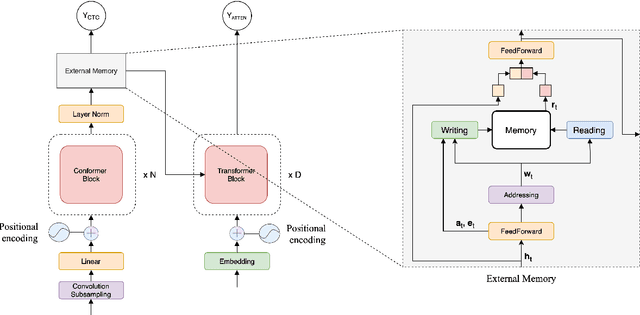

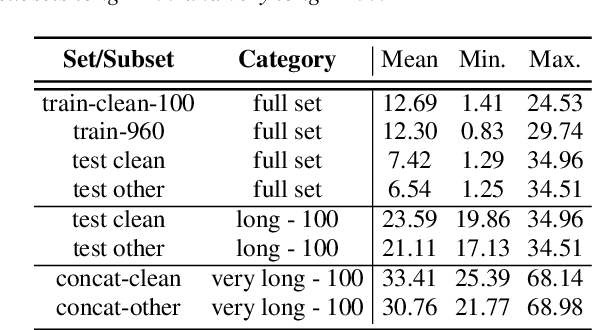

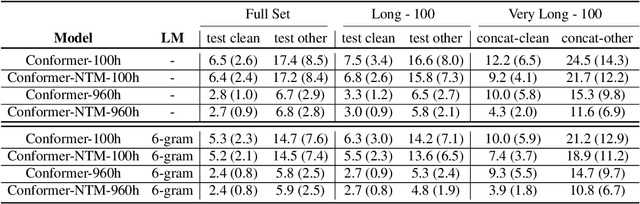

Memory-augmented conformer for improved end-to-end long-form ASR

Sep 22, 2023

Abstract:Conformers have recently been proposed as a promising modelling approach for automatic speech recognition (ASR), outperforming recurrent neural network-based approaches and transformers. Nevertheless, in general, the performance of these end-to-end models, especially attention-based models, is particularly degraded in the case of long utterances. To address this limitation, we propose adding a fully-differentiable memory-augmented neural network between the encoder and decoder of a conformer. This external memory can enrich the generalization for longer utterances since it allows the system to store and retrieve more information recurrently. Notably, we explore the neural Turing machine (NTM) that results in our proposed Conformer-NTM model architecture for ASR. Experimental results using Librispeech train-clean-100 and train-960 sets show that the proposed system outperforms the baseline conformer without memory for long utterances.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge