Can Bogoclu

Quantifying Local Model Validity using Active Learning

Jun 11, 2024Abstract:Real-world applications of machine learning models are often subject to legal or policy-based regulations. Some of these regulations require ensuring the validity of the model, i.e., the approximation error being smaller than a threshold. A global metric is generally too insensitive to determine the validity of a specific prediction, whereas evaluating local validity is costly since it requires gathering additional data.We propose learning the model error to acquire a local validity estimate while reducing the amount of required data through active learning. Using model validation benchmarks, we provide empirical evidence that the proposed method can lead to an error model with sufficient discriminative properties using a relatively small amount of data. Furthermore, an increased sensitivity to local changes of the validity bounds compared to alternative approaches is demonstrated.

Deep Gaussian Covariance Network with Trajectory Sampling for Data-Efficient Policy Search

Mar 23, 2024

Abstract:Probabilistic world models increase data efficiency of model-based reinforcement learning (MBRL) by guiding the policy with their epistemic uncertainty to improve exploration and acquire new samples. Moreover, the uncertainty-aware learning procedures in probabilistic approaches lead to robust policies that are less sensitive to noisy observations compared to uncertainty unaware solutions. We propose to combine trajectory sampling and deep Gaussian covariance network (DGCN) for a data-efficient solution to MBRL problems in an optimal control setting. We compare trajectory sampling with density-based approximation for uncertainty propagation using three different probabilistic world models; Gaussian processes, Bayesian neural networks, and DGCNs. We provide empirical evidence using four different well-known test environments, that our method improves the sample-efficiency over other combinations of uncertainty propagation methods and probabilistic models. During our tests, we place particular emphasis on the robustness of the learned policies with respect to noisy initial states.

Intelligent Optimization and Machine Learning Algorithms for Structural Anomaly Detection using Seismic Signals

Jan 18, 2024Abstract:The lack of anomaly detection methods during mechanized tunnelling can cause financial loss and deficits in drilling time. On-site excavation requires hard obstacles to be recognized prior to drilling in order to avoid damaging the tunnel boring machine and to adjust the propagation velocity. The efficiency of the structural anomaly detection can be increased with intelligent optimization techniques and machine learning. In this research, the anomaly in a simple structure is detected by comparing the experimental measurements of the structural vibrations with numerical simulations using parameter estimation methods.

Gradient and Uncertainty Enhanced Sequential Sampling for Global Fit

Sep 29, 2023Abstract:Surrogate models based on machine learning methods have become an important part of modern engineering to replace costly computer simulations. The data used for creating a surrogate model are essential for the model accuracy and often restricted due to cost and time constraints. Adaptive sampling strategies have been shown to reduce the number of samples needed to create an accurate model. This paper proposes a new sampling strategy for global fit called Gradient and Uncertainty Enhanced Sequential Sampling (GUESS). The acquisition function uses two terms: the predictive posterior uncertainty of the surrogate model for exploration of unseen regions and a weighted approximation of the second and higher-order Taylor expansion values for exploitation. Although various sampling strategies have been proposed so far, the selection of a suitable method is not trivial. Therefore, we compared our proposed strategy to 9 adaptive sampling strategies for global surrogate modeling, based on 26 different 1 to 8-dimensional deterministic benchmarks functions. Results show that GUESS achieved on average the highest sample efficiency compared to other surrogate-based strategies on the tested examples. An ablation study considering the behavior of GUESS in higher dimensions and the importance of surrogate choice is also presented.

Local Latin Hypercube Refinement for Multi-objective Design Uncertainty Optimization

Aug 19, 2021

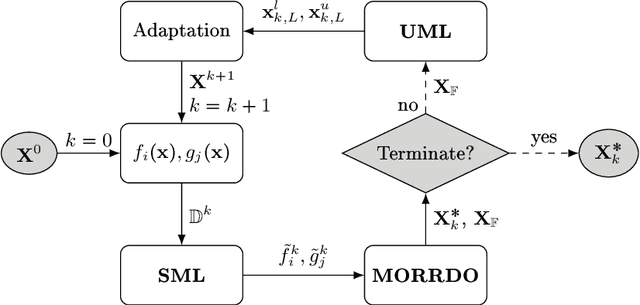

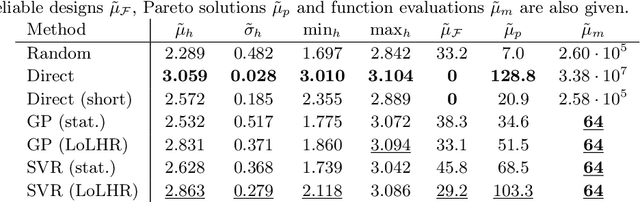

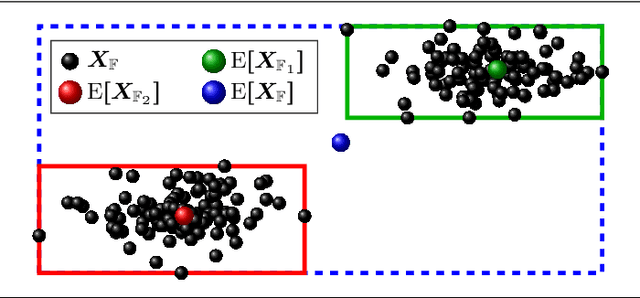

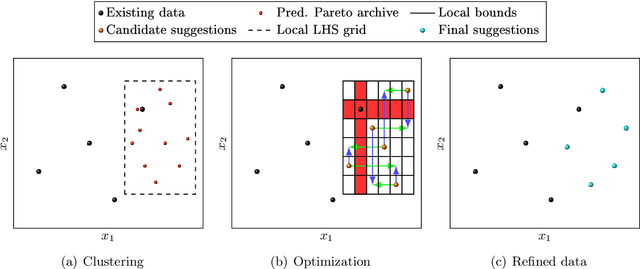

Abstract:Optimizing the reliability and the robustness of a design is important but often unaffordable due to high sample requirements. Surrogate models based on statistical and machine learning methods are used to increase the sample efficiency. However, for higher dimensional or multi-modal systems, surrogate models may also require a large amount of samples to achieve good results. We propose a sequential sampling strategy for the surrogate based solution of multi-objective reliability based robust design optimization problems. Proposed local Latin hypercube refinement (LoLHR) strategy is model-agnostic and can be combined with any surrogate model because there is no free lunch but possibly a budget one. The proposed method is compared to stationary sampling as well as other proposed strategies from the literature. Gaussian process and support vector regression are both used as surrogate models. Empirical evidence is presented, showing that LoLHR achieves on average better results compared to other surrogate based strategies on the tested examples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge