Byungjin Cho

Learning-based decentralized offloading decision making in an adversarial environment

Apr 26, 2021

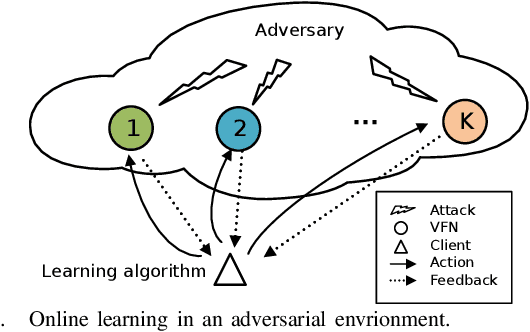

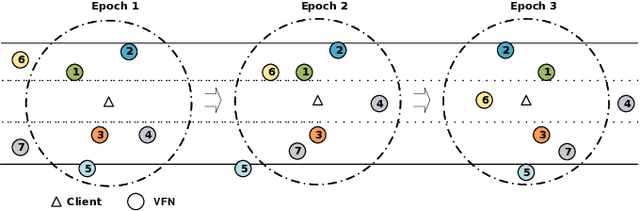

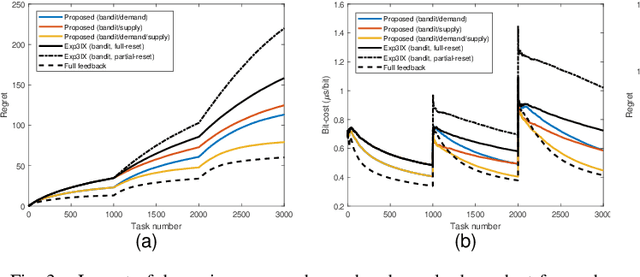

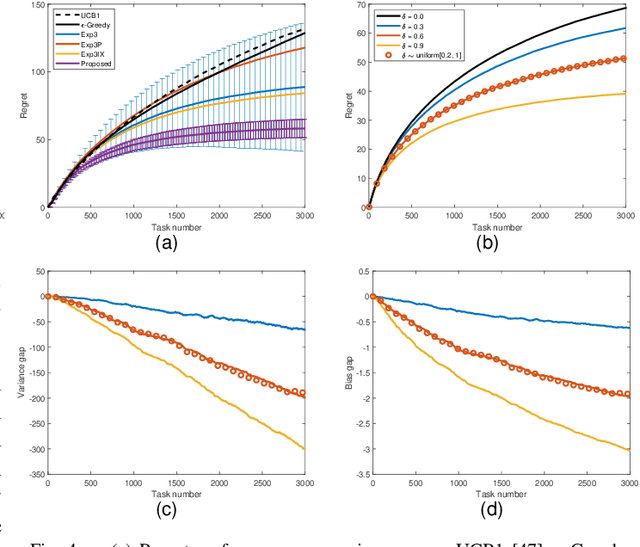

Abstract:Vehicular fog computing (VFC) pushes the cloud computing capability to the distributed fog nodes at the edge of the Internet, enabling compute-intensive and latency-sensitive computing services for vehicles through task offloading. However, a heterogeneous mobility environment introduces uncertainties in terms of resource supply and demand, which are inevitable bottlenecks for the optimal offloading decision. Also, these uncertainties bring extra challenges to task offloading under the oblivious adversary attack and data privacy risks. In this article, we develop a new adversarial online algorithm with bandit feedback based on the adversarial multi-armed bandit theory, to enable scalable and low-complex offloading decision making on the fog node selection toward minimizing the offloading service cost in terms of delay and energy. The key is to implicitly tune exploration bonus in selection and assessment rules of the designed algorithm, taking into account volatile resource supply and demand. We theoretically prove that the input-size dependent selection rule allows to choose a suitable fog node without exploring the sub-optimal actions, and also an appropriate score patching rule allows to quickly adapt to evolving circumstances, which reduces variance and bias simultaneously, thereby achieving better exploitation exploration balance. Simulation results verify the effectiveness and robustness of the proposed algorithm.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge