Bryon A. Mueller

DenseNet and Support Vector Machine classifications of major depressive disorder using vertex-wise cortical features

Nov 18, 2023

Abstract:Major depressive disorder (MDD) is a complex psychiatric disorder that affects the lives of hundreds of millions of individuals around the globe. Even today, researchers debate if morphological alterations in the brain are linked to MDD, likely due to the heterogeneity of this disorder. The application of deep learning tools to neuroimaging data, capable of capturing complex non-linear patterns, has the potential to provide diagnostic and predictive biomarkers for MDD. However, previous attempts to demarcate MDD patients and healthy controls (HC) based on segmented cortical features via linear machine learning approaches have reported low accuracies. In this study, we used globally representative data from the ENIGMA-MDD working group containing an extensive sample of people with MDD (N=2,772) and HC (N=4,240), which allows a comprehensive analysis with generalizable results. Based on the hypothesis that integration of vertex-wise cortical features can improve classification performance, we evaluated the classification of a DenseNet and a Support Vector Machine (SVM), with the expectation that the former would outperform the latter. As we analyzed a multi-site sample, we additionally applied the ComBat harmonization tool to remove potential nuisance effects of site. We found that both classifiers exhibited close to chance performance (balanced accuracy DenseNet: 51%; SVM: 53%), when estimated on unseen sites. Slightly higher classification performance (balanced accuracy DenseNet: 58%; SVM: 55%) was found when the cross-validation folds contained subjects from all sites, indicating site effect. In conclusion, the integration of vertex-wise morphometric features and the use of the non-linear classifier did not lead to the differentiability between MDD and HC. Our results support the notion that MDD classification on this combination of features and classifiers is unfeasible.

Meta-modal Information Flow: A Method for Capturing Multimodal Modular Disconnectivity in Schizophrenia

Jan 06, 2020

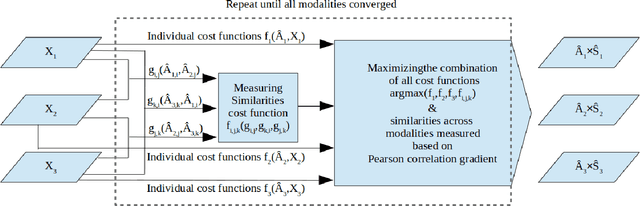

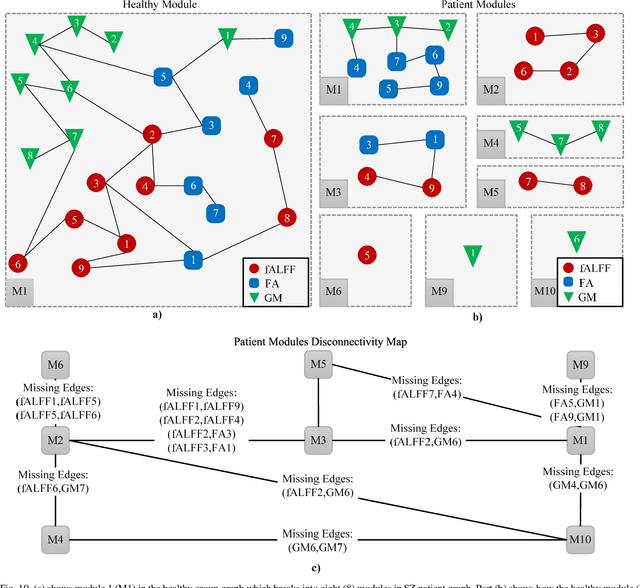

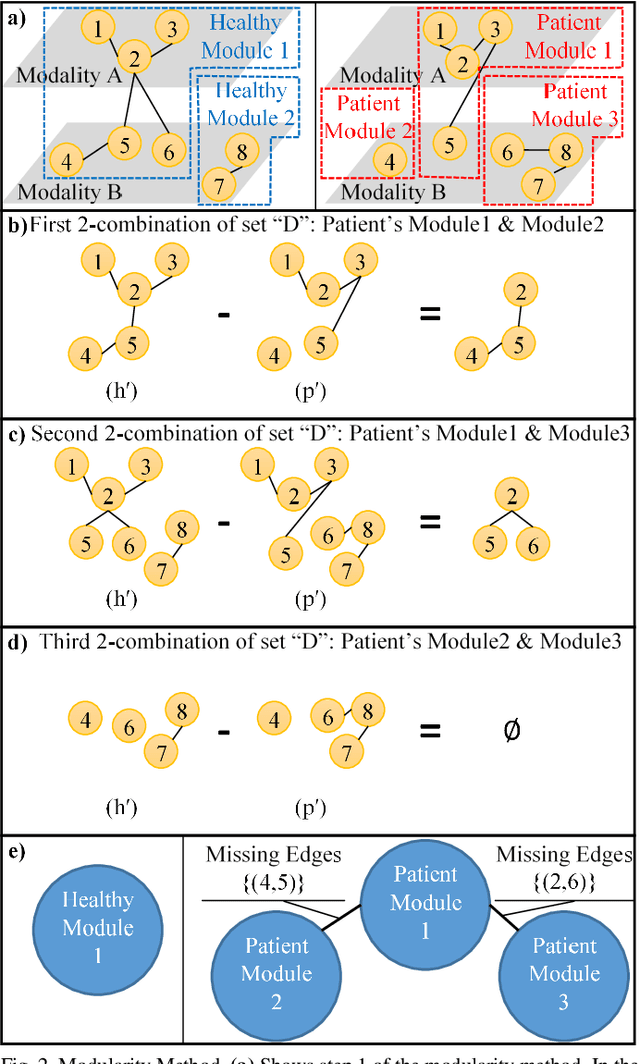

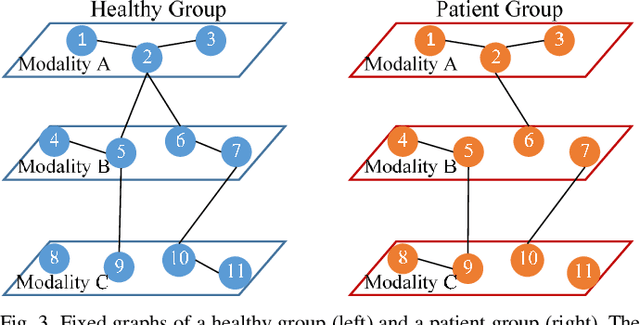

Abstract:Objective: Multimodal measurements of the same phenomena provide complementary information and highlight different perspectives, albeit each with their own limitations. A focus on a single modality may lead to incorrect inferences, which is especially important when a studied phenomenon is a disease. In this paper, we introduce a method that takes advantage of multimodal data in addressing the hypotheses of disconnectivity and dysfunction within schizophrenia (SZ). Methods: We start with estimating and visualizing links within and among extracted multimodal data features using a Gaussian graphical model (GGM). We then propose a modularity-based method that can be applied to the GGM to identify links that are associated with mental illness across a multimodal data set. Through simulation and real data, we show our approach reveals important information about disease-related network disruptions that are missed with a focus on a single modality. We use functional MRI (fMRI), diffusion MRI (dMRI), and structural MRI (sMRI) to compute the fractional amplitude of low frequency fluctuations (fALFF), fractional anisotropy (FA), and gray matter (GM) concentration maps. These three modalities are analyzed using our modularity method. Results: Our results show missing links that are only captured by the cross-modal information that may play an important role in disconnectivity between the components. Conclusion: We identified multimodal (fALFF, FA and GM) disconnectivity in the default mode network area in patients with SZ, which would not have been detectable in a single modality. Significance: The proposed approach provides an important new tool for capturing information that is distributed among multiple imaging modalities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge