Bryn Marie Reinstadler

STRATA: Building Robustness with a Simple Method for Generating Black-box Adversarial Attacks for Models of Code

Sep 28, 2020

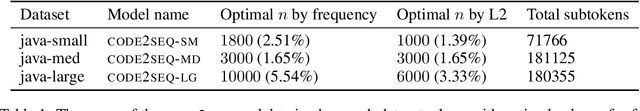

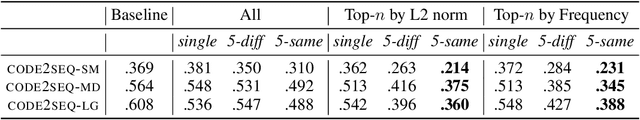

Abstract:Adversarial examples are imperceptible perturbations in the input to a neural model that result in misclassification. Generating adversarial examples for source code poses an additional challenge compared to the domains of images and natural language, because source code perturbations must adhere to strict semantic guidelines so the resulting programs retain the functional meaning of the code. We propose a simple and efficient black-box method for generating state-of-the-art adversarial examples on models of code. Our method generates untargeted and targeted attacks, and empirically outperforms competing gradient-based methods with less information and less computational effort. We also use adversarial training to construct a model robust to these attacks; our attack reduces the F1 score of code2seq by 42%. Adversarial training brings the F1 score on adversarial examples up to 99% of baseline.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge