Bruce C. Wainman

Automated Grading of Anatomical Objective Structured Practical Exams Using Decision Trees

Jun 01, 2021

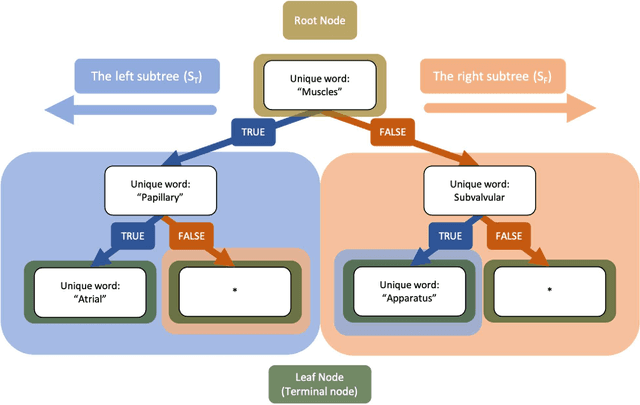

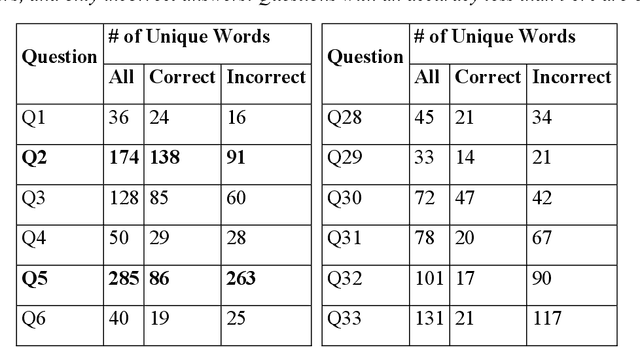

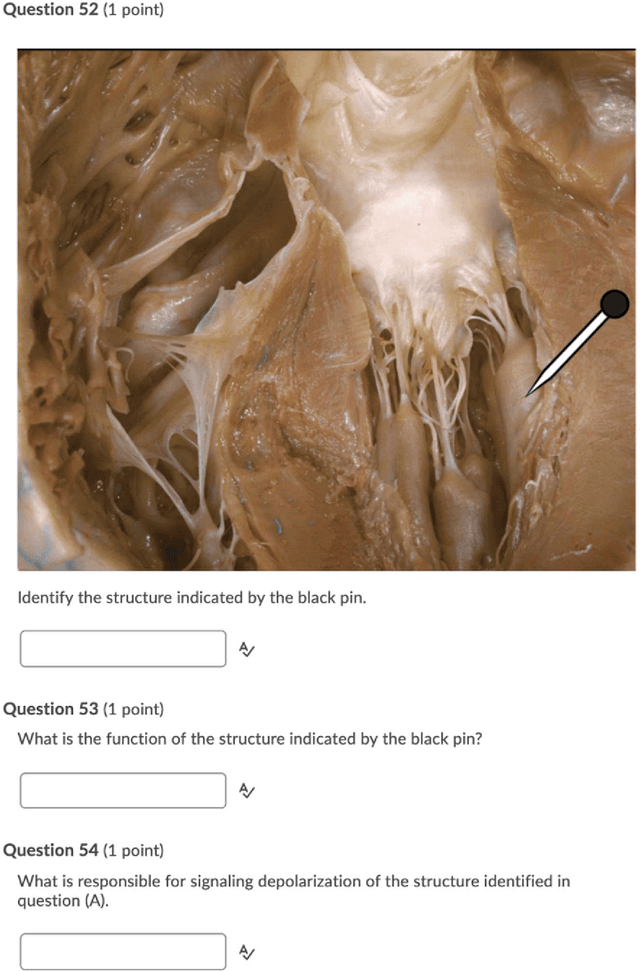

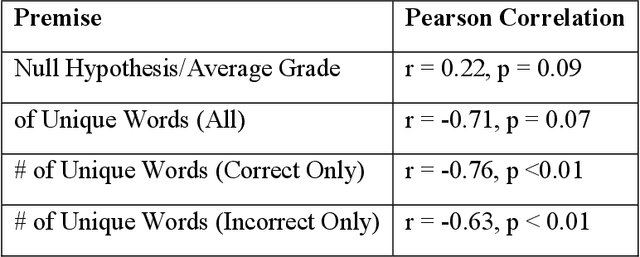

Abstract:An Objective Structured Practical Examination (OSPE) is an effective and robust, but resource-intensive, means of evaluating anatomical knowledge. Since most OSPEs employ short answer or fill-in-the-blank style questions, the format requires many people familiar with the content to mark the exams. However, the increasing prevalence of online delivery for anatomy and physiology courses could result in students losing the OSPE practice that they would receive in face-to-face learning sessions. The purpose of this study was to test the accuracy of Decision Trees (DTs) in marking OSPE questions as a potential first step to creating an intelligent, online OSPE tutoring system. The study used the results of the winter 2020 semester final OSPE from McMaster University's anatomy and physiology course in the Faculty of Health Sciences (HTHSCI 2FF3/2LL3/1D06) as the data set. Ninety percent of the data set was used in a 10-fold validation algorithm to train a DT for each of the 54 questions. Each DT was comprised of unique words that appeared in correct, student-written answers. The remaining 10% of the data set was marked by the generated DTs. When the answers marked by the DT were compared to the answers marked by staff and faculty, the DT achieved an average accuracy of 94.49% across all 54 questions. This suggests that machine learning algorithms such as DTs are a highly effective option for OSPE grading and are suitable for the development of an intelligent, online OSPE tutoring system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge