Brian Gardner

Supervised Learning with First-to-Spike Decoding in Multilayer Spiking Neural Networks

Aug 16, 2020

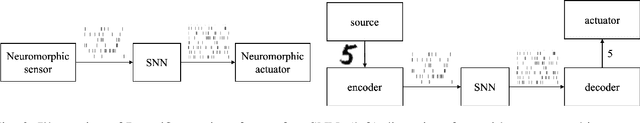

Abstract:Experimental studies support the notion of spike-based neuronal information processing in the brain, with neural circuits exhibiting a wide range of temporally-based coding strategies to rapidly and efficiently represent sensory stimuli. Accordingly, it would be desirable to apply spike-based computation to tackling real-world challenges, and in particular transferring such theory to neuromorphic systems for low-power embedded applications. Motivated by this, we propose a new supervised learning method that can train multilayer spiking neural networks to solve classification problems based on a rapid, first-to-spike decoding strategy. The proposed learning rule supports multiple spikes fired by stochastic hidden neurons, and yet is stable by relying on first-spike responses generated by a deterministic output layer. In addition to this, we also explore several distinct, spike-based encoding strategies in order to form compact representations of presented input data. We demonstrate the classification performance of the learning rule as applied to several benchmark datasets, including MNIST. The learning rule is capable of generalising from the data, and is successful even when used with constrained network architectures containing few input and hidden layer neurons. Furthermore, we highlight a novel encoding strategy, termed `scanline encoding', that can transform image data into compact spatiotemporal patterns for subsequent network processing. Designing constrained, but optimised, network structures and performing input dimensionality reduction has strong implications for neuromorphic applications.

Supervised Learning in Temporally-Coded Spiking Neural Networks with Approximate Backpropagation

Jul 27, 2020

Abstract:In this work we propose a new supervised learning method for temporally-encoded multilayer spiking networks to perform classification. The method employs a reinforcement signal that mimics backpropagation but is far less computationally intensive. The weight update calculation at each layer requires only local data apart from this signal. We also employ a rule capable of producing specific output spike trains; by setting the target spike time equal to the actual spike time with a slight negative offset for key high-value neurons the actual spike time becomes as early as possible. In simulated MNIST handwritten digit classification, two-layer networks trained with this rule matched the performance of a comparable backpropagation based non-spiking network.

An Introduction to Probabilistic Spiking Neural Networks

Oct 02, 2019

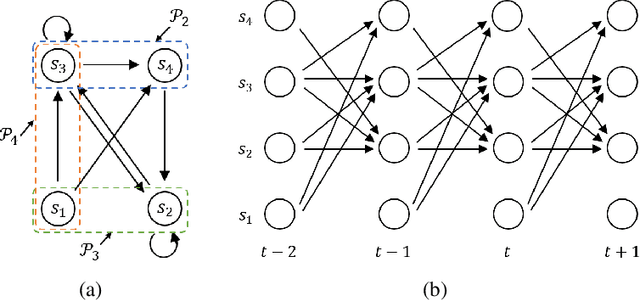

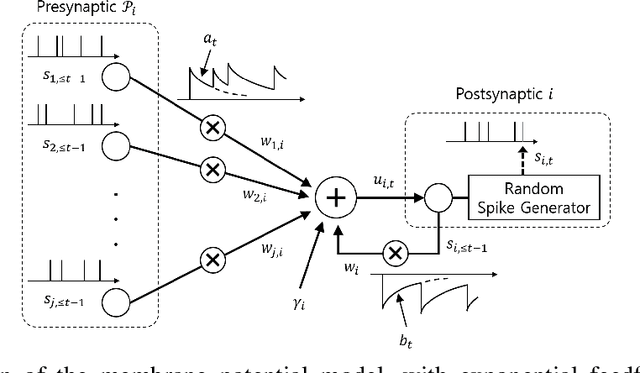

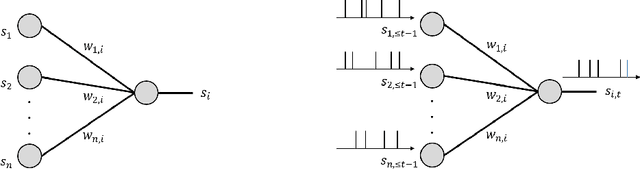

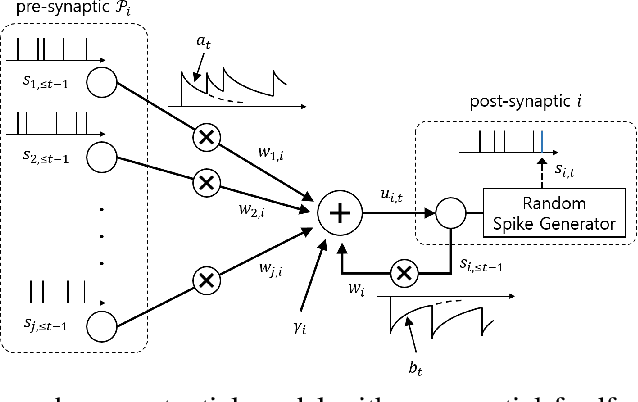

Abstract:Spiking neural networks (SNNs) are distributed trainable systems whose computing elements, or neurons, are characterized by internal analog dynamics and by digital and sparse synaptic communications. The sparsity of the synaptic spiking inputs and the corresponding event-driven nature of neural processing can be leveraged by energy-efficient hardware implementations, which can offer significant energy reductions as compared to conventional artificial neural networks (ANNs). The design of training algorithms lags behind the hardware implementations. Most existing training algorithms for SNNs have been designed either for biological plausibility or through conversion from pretrained ANNs via rate encoding. This article provides an introduction to SNNs by focusing on a probabilistic signal processing methodology that enables the direct derivation of learning rules by leveraging the unique time-encoding capabilities of SNNs. We adopt discrete-time probabilistic models for networked spiking neurons and derive supervised and unsupervised learning rules from first principles via variational inference. Examples and open research problems are also provided.

Spiking Neural Networks: A Stochastic Signal Processing Perspective

Dec 11, 2018

Abstract:Spiking Neural Networks (SNNs) are distributed systems whose computing elements, or neurons, are characterized by analog internal dynamics and by digital and sparse inter-neuron, or synaptic, communications. The sparsity of the synaptic spiking inputs and the corresponding event-driven nature of neural processing can be leveraged by hardware implementations to obtain significant energy reductions as compared to conventional Artificial Neural Networks (ANNs). SNNs can be used not only as coprocessors to carry out given computing tasks, such as classification, but also as learning machines that adapt their internal parameters, e.g., their synaptic weights, on the basis of data and of a learning criterion. This paper provides an overview of models, learning rules, and applications of SNNs from the viewpoint of stochastic signal processing.

Supervised Learning in Spiking Neural Networks for Precise Temporal Encoding

Oct 28, 2016

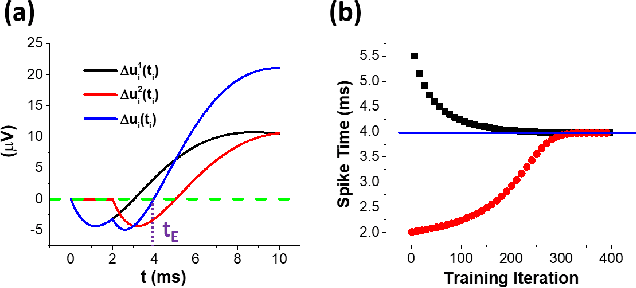

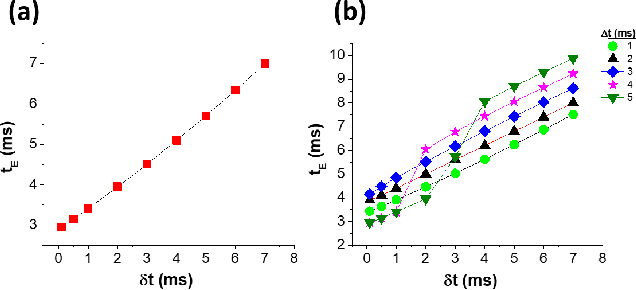

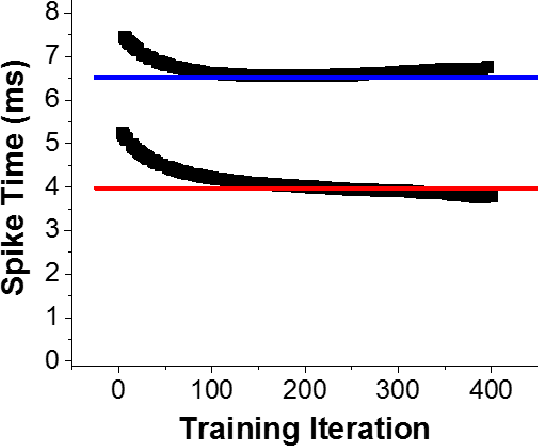

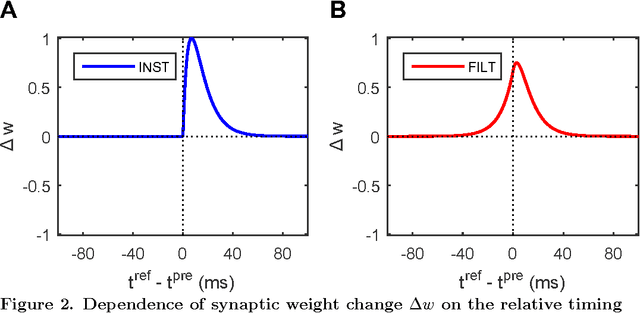

Abstract:Precise spike timing as a means to encode information in neural networks is biologically supported, and is advantageous over frequency-based codes by processing input features on a much shorter time-scale. For these reasons, much recent attention has been focused on the development of supervised learning rules for spiking neural networks that utilise a temporal coding scheme. However, despite significant progress in this area, there still lack rules that have a theoretical basis, and yet can be considered biologically relevant. Here we examine the general conditions under which synaptic plasticity most effectively takes place to support the supervised learning of a precise temporal code. As part of our analysis we examine two spike-based learning methods: one of which relies on an instantaneous error signal to modify synaptic weights in a network (INST rule), and the other one on a filtered error signal for smoother synaptic weight modifications (FILT rule). We test the accuracy of the solutions provided by each rule with respect to their temporal encoding precision, and then measure the maximum number of input patterns they can learn to memorise using the precise timings of individual spikes as an indication of their storage capacity. Our results demonstrate the high performance of FILT in most cases, underpinned by the rule's error-filtering mechanism, which is predicted to provide smooth convergence towards a desired solution during learning. We also find FILT to be most efficient at performing input pattern memorisations, and most noticeably when patterns are identified using spikes with sub-millisecond temporal precision. In comparison with existing work, we determine the performance of FILT to be consistent with that of the highly efficient E-learning Chronotron, but with the distinct advantage that FILT is also implementable as an online method for increased biological realism.

Encoding Spike Patterns in Multilayer Spiking Neural Networks

Mar 31, 2015

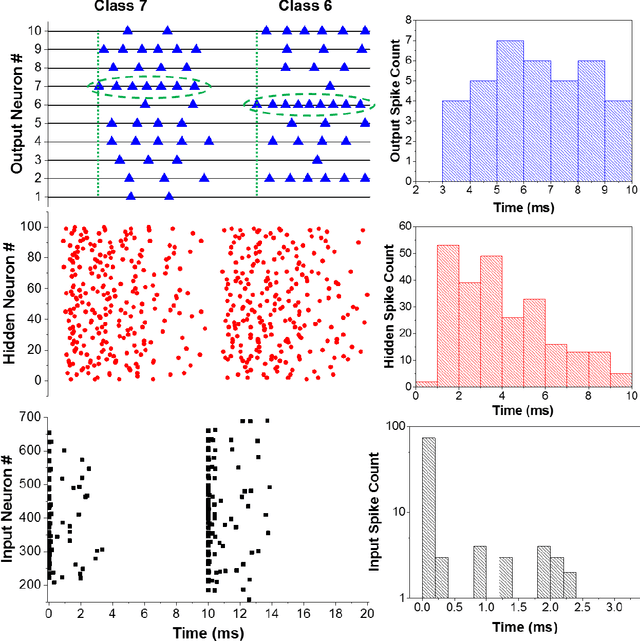

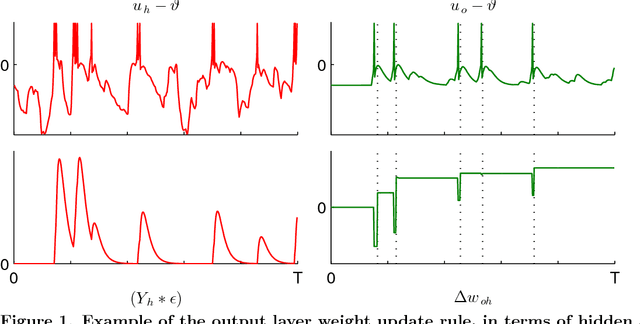

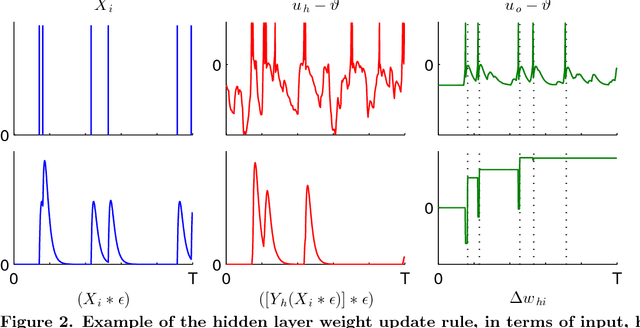

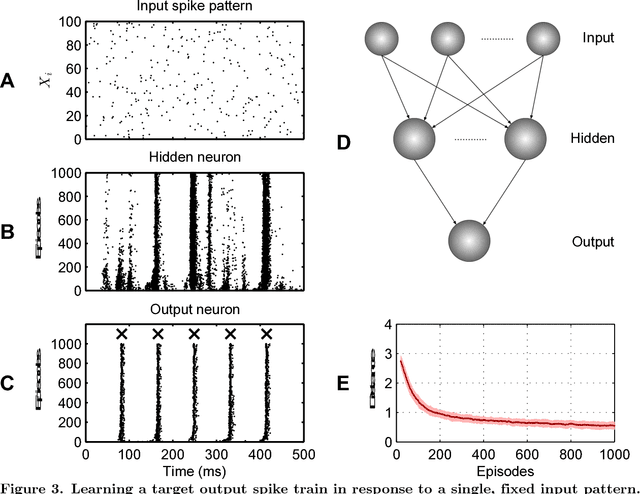

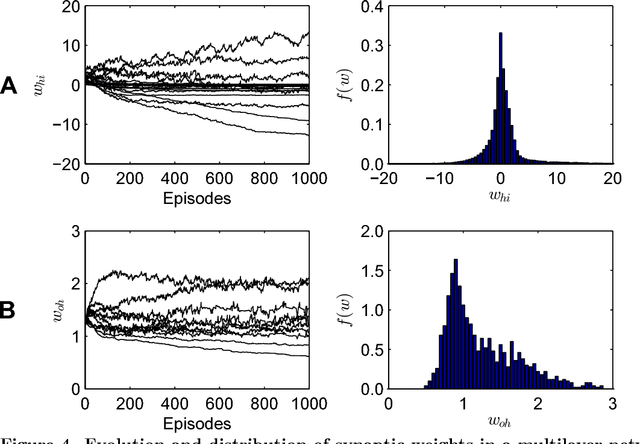

Abstract:Information encoding in the nervous system is supported through the precise spike-timings of neurons; however, an understanding of the underlying processes by which such representations are formed in the first place remains unclear. Here we examine how networks of spiking neurons can learn to encode for input patterns using a fully temporal coding scheme. To this end, we introduce a learning rule for spiking networks containing hidden neurons which optimizes the likelihood of generating desired output spiking patterns. We show the proposed learning rule allows for a large number of accurate input-output spike pattern mappings to be learnt, which outperforms other existing learning rules for spiking neural networks: both in the number of mappings that can be learnt as well as the complexity of spike train encodings that can be utilised. The learning rule is successful even in the presence of input noise, is demonstrated to solve the linearly non-separable XOR computation and generalizes well on an example dataset. We further present a biologically plausible implementation of backpropagated learning in multilayer spiking networks, and discuss the neural mechanisms that might underlie its function. Our approach contributes both to a systematic understanding of how pattern encodings might take place in the nervous system, and a learning rule that displays strong technical capability.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge