Get our free extension to see links to code for papers anywhere online!Free add-on: code for papers everywhere!Free add-on: See code for papers anywhere!

Brandon Yam-Viramontes

Implementation of a Natural User Interface to Command a Drone

Mar 05, 2020Figures and Tables:

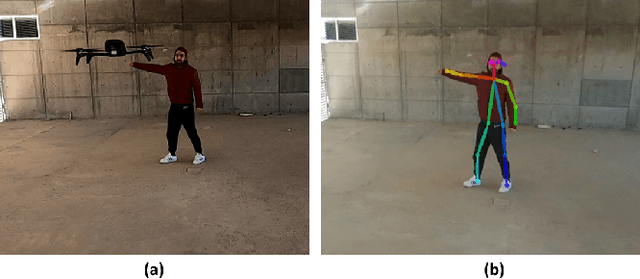

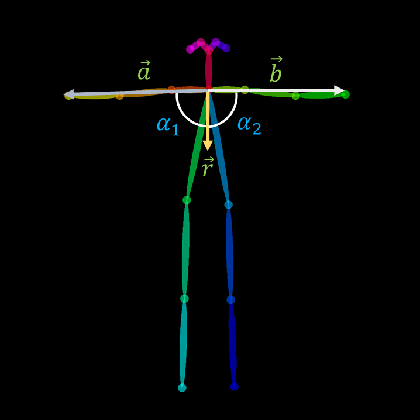

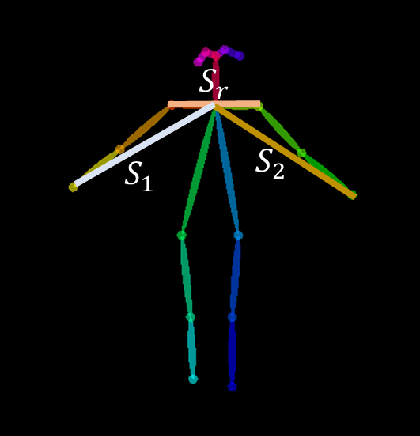

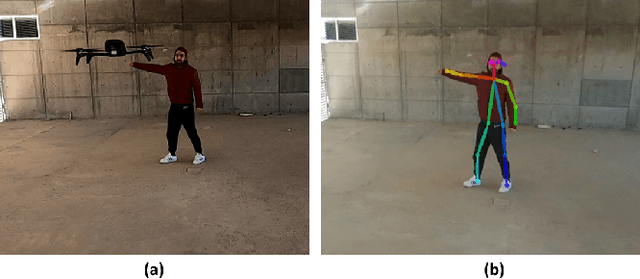

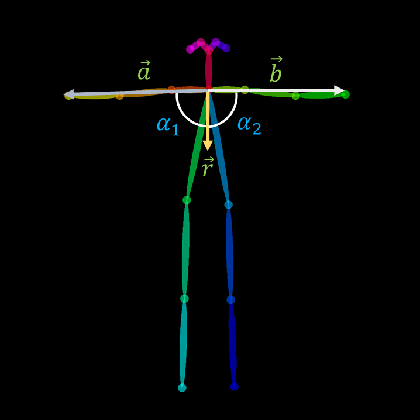

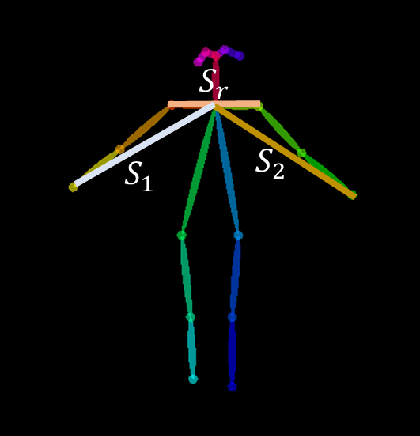

Abstract:In this work, we propose the use of a Natural User Interface (NUI) through body gestures using the open source library OpenPose, looking for a more dynamic and intuitive way to control a drone. For the implementation, we use the Robotic Operative System (ROS) to control and manage the different components of the project. Wrapped inside ROS, OpenPose (OP) processes the video obtained in real-time by a commercial drone, allowing to obtain the user's pose. Finally, the keypoints from OpenPose are obtained and translated, using geometric constraints, to specify high-level commands to the drone. Real-time experiments validate the full strategy.

* Submitted to the 2020 International Conference on Unmanned Aerial

Vehicles, Athens, Greece

Via

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge