Bowen Qiu

CompNet: A Designated Model to Handle Combinations of Images and Designed features

Sep 28, 2022

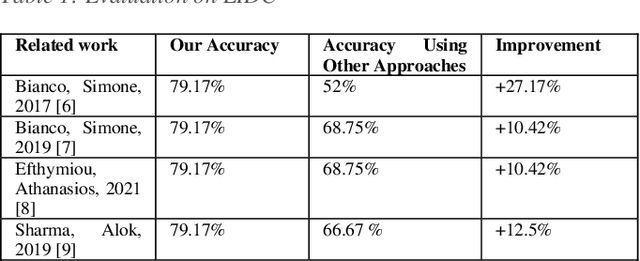

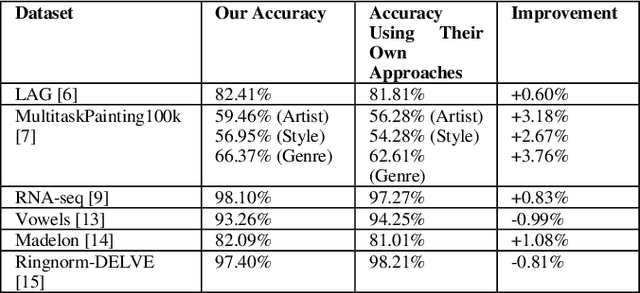

Abstract:Convolutional neural networks (CNNs) are one of the most popular models of Artificial Neural Networks (ANN)s in Computer Vision (CV). A variety of CNN-based structures were developed by researchers to solve problems like image classification, object detection, and image similarity measurement. Although CNNs have shown their value in most cases, they still have a downside: they easily overfit when there are not enough samples in the dataset. Most medical image datasets are examples of such a dataset. Additionally, many datasets also contain both designed features and images, but CNNs can only deal with images directly. This represents a missed opportunity to leverage additional information. For this reason, we propose a new structure of CNN-based model: CompNet, a composite convolutional neural network. This is a specially designed neural network that accepts combinations of images and designed features as input in order to leverage all available information. The novelty of this structure is that it uses learned features from images to weight designed features in order to gain all information from both images and designed features. With the use of this structure on classification tasks, the results indicate that our approach has the capability to significantly reduce overfitting. Furthermore, we also found several similar approaches proposed by other researchers that can combine images and designed features. To make comparison, we first applied those similar approaches on LIDC and compared the results with the CompNet results, then we applied our CompNet on the datasets that those similar approaches originally used in their works and compared the results with the results they proposed in their papers. All these comparison results showed that our model outperformed those similar approaches on classification tasks either on LIDC dataset or on their proposed datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge