Boris Bogaerts

Enabling Humans to Plan Inspection Paths Using a Virtual Reality Interface

Sep 13, 2019

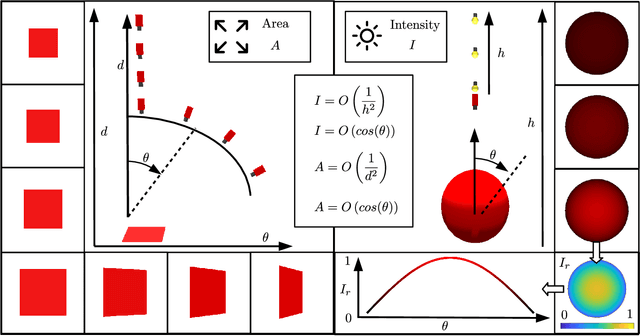

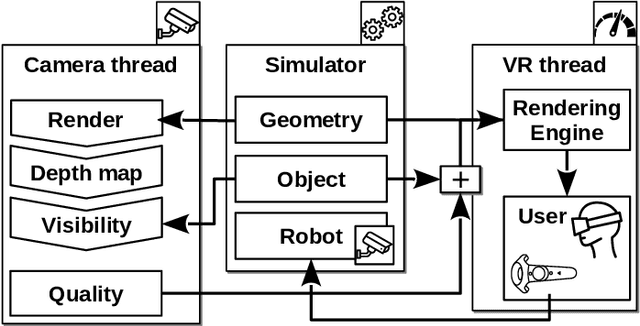

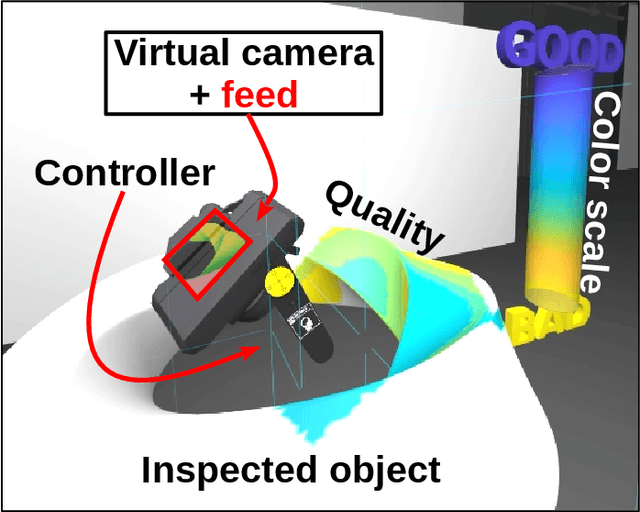

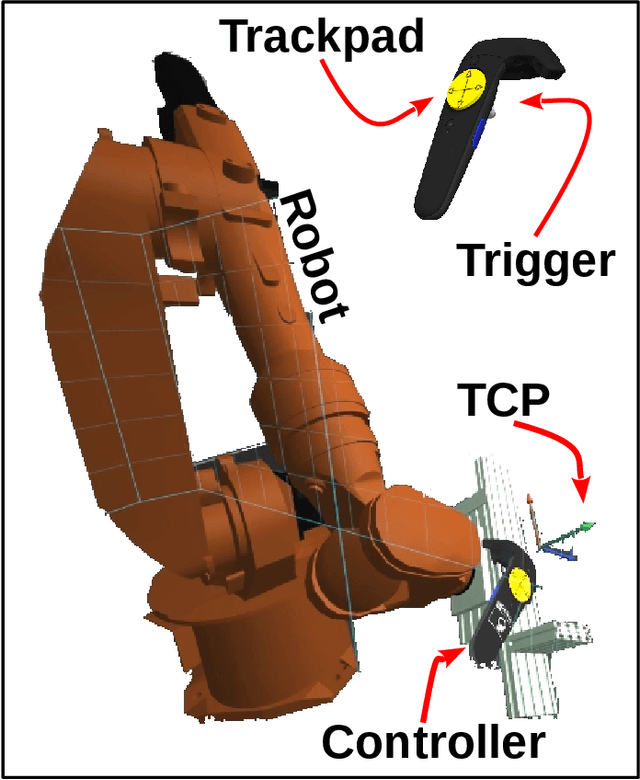

Abstract:In this work, we investigate whether humans can manually generate high-quality robot paths for optical inspections. Typically, automated algorithms are used to solve the inspection planning problem. The use of automated algorithms implies that specialized knowledge from users is needed to set up the algorithm. We aim to replace this need for specialized experience, by entrusting a non-expert human user with the planning task. We augment this user with intuitive visualizations and interactions in virtual reality. To investigate if humans can generate high-quality inspection paths, we perform a user study in which users from different experience categories, generate inspection paths with the proposed virtual reality interface. From our study, it can be concluded that users without experience can generate high-quality inspection paths: The median inspection quality of user generated paths ranged between 66-81\% of the quality of a state-of-the-art automated algorithm on various inspection planning scenarios. We noticed however, a sizable variation in the performance of users, which is a result of some typical user behaviors. These behaviors are discussed, and possible solutions are provided.

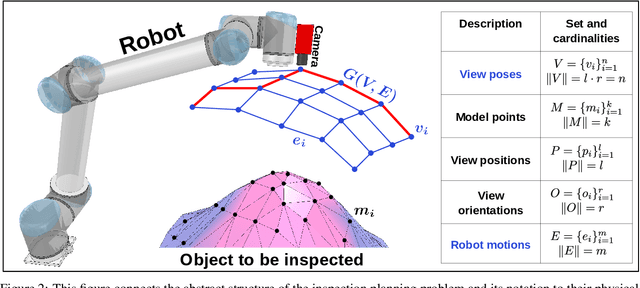

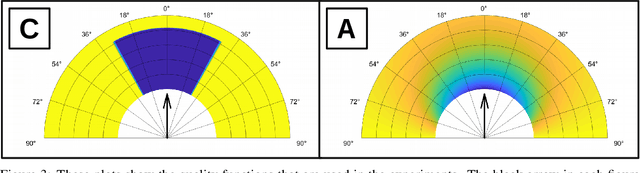

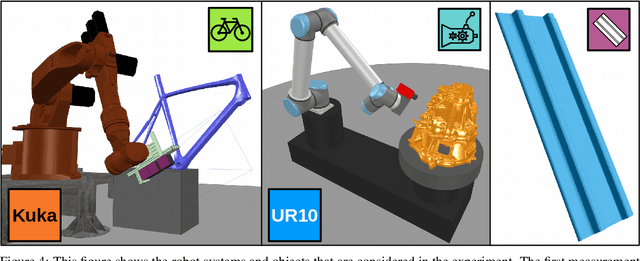

Near-Optimal Path Planning for Complex Robotic Inspection Tasks

May 14, 2019

Abstract:In this paper, we consider the problem of generating inspection paths for robots. These paths should allow an attached measurement device to perform high-quality measurements. We formally show that generating robot paths, while maximizing the inspection quality, naturally corresponds to the submodular orienteering problem. Traditional methods that are able to generate solutions with mathematical guarantees do not scale to real-world problems. In this work, we propose a method that is able to generate near-optimal solutions for real-world complex problems. We experimentally test this method in a wide variety of inspection problems and show that it nearly always outperforms traditional methods. We furthermore show that the near-optimality of our approach makes it more robust to changing the inspection problem, and is thus more general.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge