Bhuvni Shah

Fair Decision-Making for Food Inspections

Aug 12, 2021

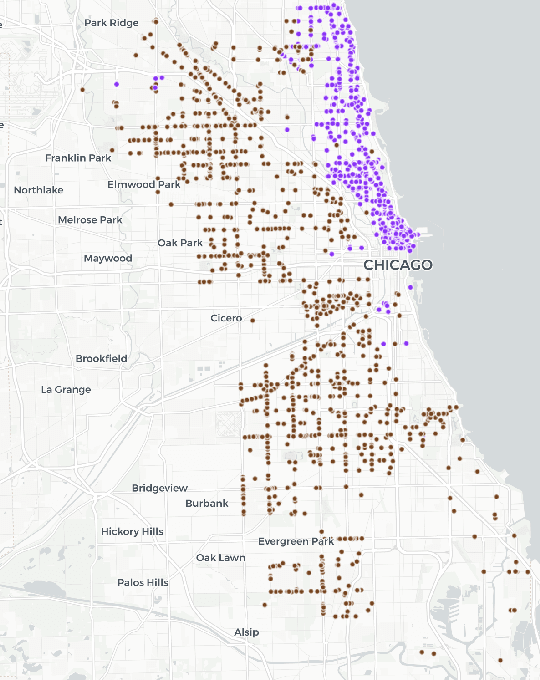

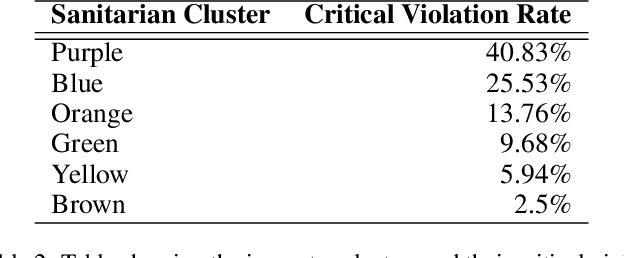

Abstract:We revisit the application of predictive models by the Chicago Department of Public Health to schedule restaurant inspections and prioritize the detection of critical violations of the food code. Performing the first analysis from the perspective of fairness to the population served by the restaurants, we find that the model treats inspections unequally based on the sanitarian who conducted the inspection and that in turn there are both geographic and demographic disparities in the benefits of the model. We examine both approaches to use the original model in a fairer way and ways to train the model to achieve fairness and find more success with the former class of approaches. The challenges from this application point to important directions for future work around fairness with collective entities rather than individuals, the use of critical violations as a proxy, and the disconnect between fair classification and fairness in the dynamic scheduling system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge