Benjamin James Lansdell

Learning to solve the credit assignment problem

Jun 05, 2019

Abstract:Backpropagation is driving today's artificial neural networks (ANNs). However, despite extensive research, it remains unclear if the brain implements this algorithm. Among neuroscientists, reinforcement learning (RL) algorithms are often seen as a realistic alternative: neurons can randomly introduce change, and use unspecific feedback signals to observe their effect on the cost and thus approximate their gradient. However, the convergence rate of such learning scales poorly with the number of involved neurons (e.g. O(N)). Here we propose a hybrid learning approach. Each neuron uses an RL-type strategy to learn how to approximate the gradients that backpropagation would provide -- in this way it learns to learn. We provide proof that our approach converges to the true gradient for certain classes of networks. In both feed-forward and recurrent networks, we empirically show that our approach learns to approximate the gradient, and can match the performance of gradient-based learning. Learning to learn provides a biologically plausible mechanism of achieving good performance, without the need for precise, pre-specified learning rules.

Towards learning-to-learn

Nov 01, 2018

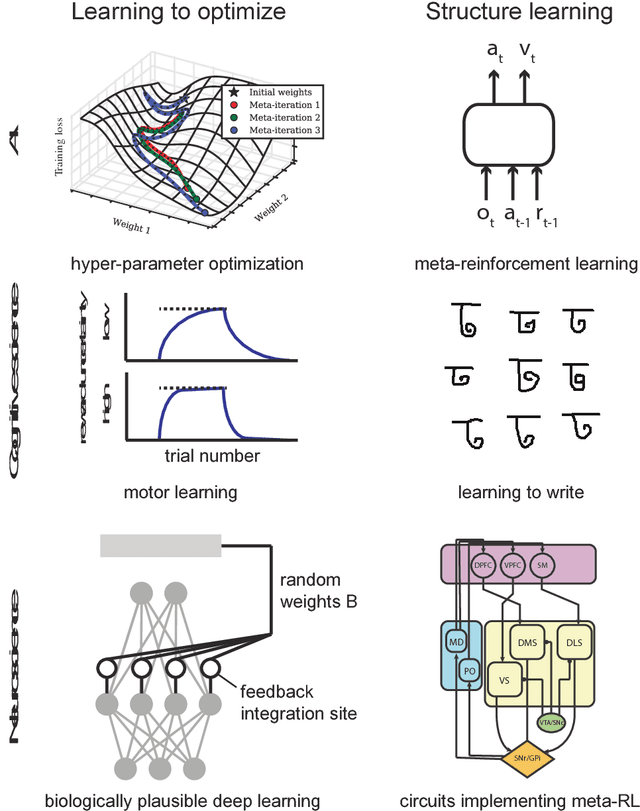

Abstract:In good old-fashioned artificial intelligence (GOFAI), humans specified systems that solved problems. Much of the recent progress in AI has come from replacing human insights by learning. However, learning itself is still usually built by humans -- specifically the choice that parameter updates should follow the gradient of a cost function. Yet, in analogy with GOFAI, there is no reason to believe that humans are particularly good at defining such learning systems: we may expect learning itself to be better if we learn it. Recent research in machine learning has started to realize the benefits of that strategy. We should thus expect this to be relevant for neuroscience: how could the correct learning rules be acquired? Indeed, behavioral science has long shown that humans learn-to-learn, which is potentially responsible for their impressive learning abilities. Here we discuss ideas across machine learning, neuroscience, and behavioral science that matter for the principle of learning-to-learn.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge