Ben Hicks

Distinguishing artefacts: evaluating the saturation point of convolutional neural networks

May 21, 2021

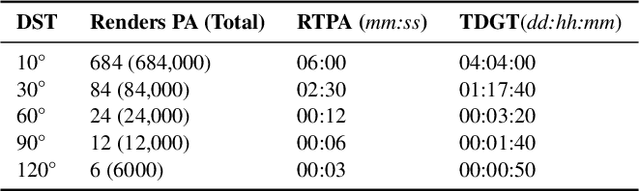

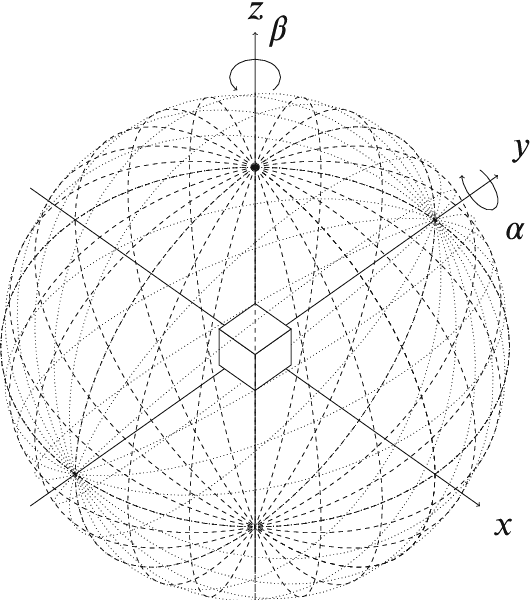

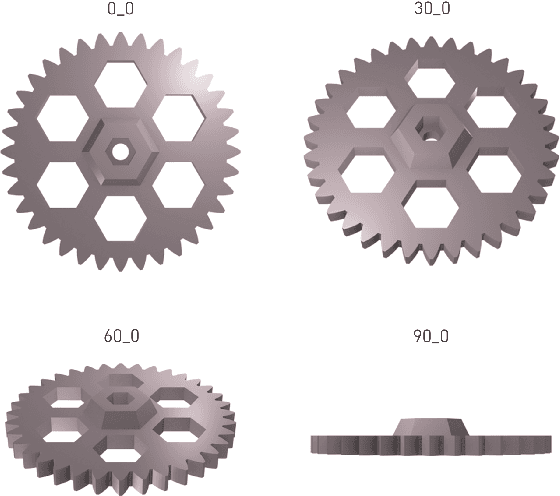

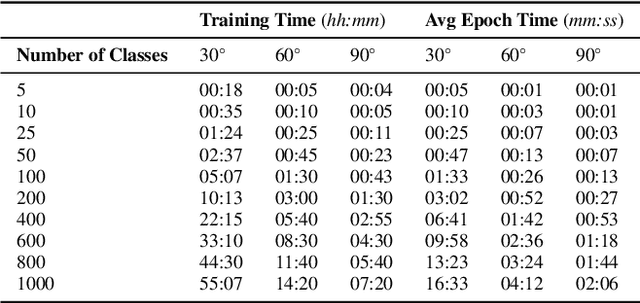

Abstract:Prior work has shown Convolutional Neural Networks (CNNs) trained on surrogate Computer Aided Design (CAD) models are able to detect and classify real-world artefacts from photographs. The applications of which support twinning of digital and physical assets in design, including rapid extraction of part geometry from model repositories, information search \& retrieval and identifying components in the field for maintenance, repair, and recording. The performance of CNNs in classification tasks have been shown dependent on training data set size and number of classes. Where prior works have used relatively small surrogate model data sets ($<100$ models), the question remains as to the ability of a CNN to differentiate between models in increasingly large model repositories. This paper presents a method for generating synthetic image data sets from online CAD model repositories, and further investigates the capacity of an off-the-shelf CNN architecture trained on synthetic data to classify models as class size increases. 1,000 CAD models were curated and processed to generate large scale surrogate data sets, featuring model coverage at steps of 10$^{\circ}$, 30$^{\circ}$, 60$^{\circ}$, and 120$^{\circ}$ degrees. The findings demonstrate the capability of computer vision algorithms to classify artefacts in model repositories of up to 200, beyond this point the CNN's performance is observed to deteriorate significantly, limiting its present ability for automated twinning of physical to digital artefacts. Although, a match is more often found in the top-5 results showing potential for information search and retrieval on large repositories of surrogate models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge