Bei Lin

Multi-View Graph Representation Learning Beyond Homophily

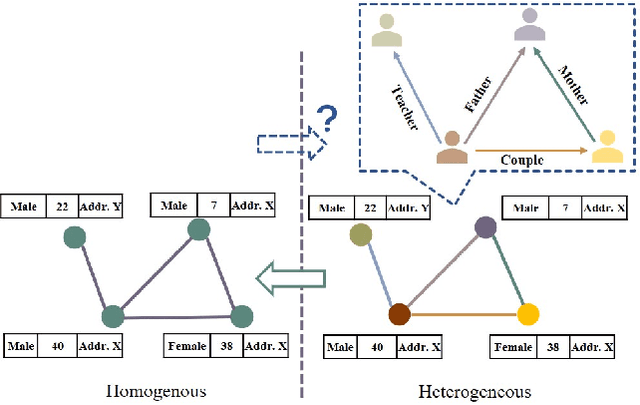

Apr 15, 2023Abstract:Unsupervised graph representation learning(GRL) aims to distill diverse graph information into task-agnostic embeddings without label supervision. Due to a lack of support from labels, recent representation learning methods usually adopt self-supervised learning, and embeddings are learned by solving a handcrafted auxiliary task(so-called pretext task). However, partially due to the irregular non-Euclidean data in graphs, the pretext tasks are generally designed under homophily assumptions and cornered in the low-frequency signals, which results in significant loss of other signals, especially high-frequency signals widespread in graphs with heterophily. Motivated by this limitation, we propose a multi-view perspective and the usage of diverse pretext tasks to capture different signals in graphs into embeddings. A novel framework, denoted as Multi-view Graph Encoder(MVGE), is proposed, and a set of key designs are identified. More specifically, a set of new pretext tasks are designed to encode different types of signals, and a straightforward operation is propxwosed to maintain both the commodity and personalization in both the attribute and the structural levels. Extensive experiments on synthetic and real-world network datasets show that the node representations learned with MVGE achieve significant performance improvements in three different downstream tasks, especially on graphs with heterophily. Source code is available at \url{https://github.com/G-AILab/MVGE}.

Graph Representation Learning Beyond Node and Homophily

Mar 03, 2022

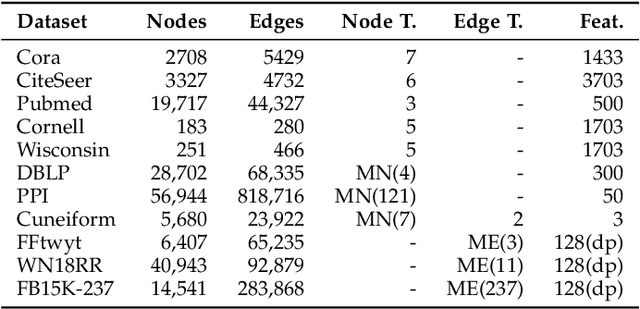

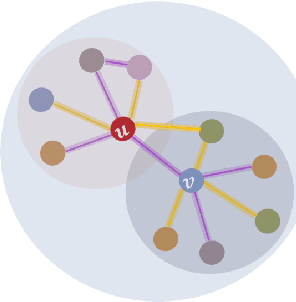

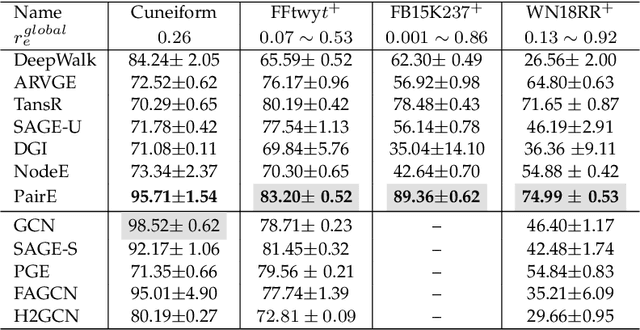

Abstract:Unsupervised graph representation learning aims to distill various graph information into a downstream task-agnostic dense vector embedding. However, existing graph representation learning approaches are designed mainly under the node homophily assumption: connected nodes tend to have similar labels and optimize performance on node-centric downstream tasks. Their design is apparently against the task-agnostic principle and generally suffers poor performance in tasks, e.g., edge classification, that demands feature signals beyond the node-view and homophily assumption. To condense different feature signals into the embeddings, this paper proposes PairE, a novel unsupervised graph embedding method using two paired nodes as the basic unit of embedding to retain the high-frequency signals between nodes to support node-related and edge-related tasks. Accordingly, a multi-self-supervised autoencoder is designed to fulfill two pretext tasks: one retains the high-frequency signal better, and another enhances the representation of commonality. Our extensive experiments on a diversity of benchmark datasets clearly show that PairE outperforms the unsupervised state-of-the-art baselines, with up to 101.1\% relative improvement on the edge classification tasks that rely on both the high and low-frequency signals in the pair and up to 82.5\% relative performance gain on the node classification tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge