Behrouz Far

Deep Learning Approach for Aggressive Driving Behaviour Detection

Nov 08, 2021

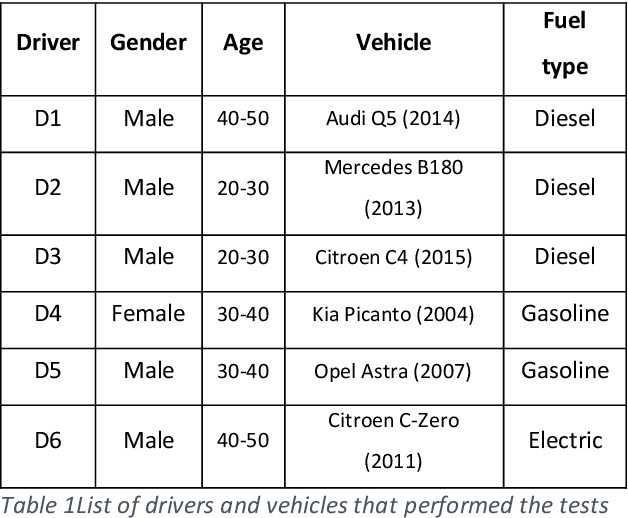

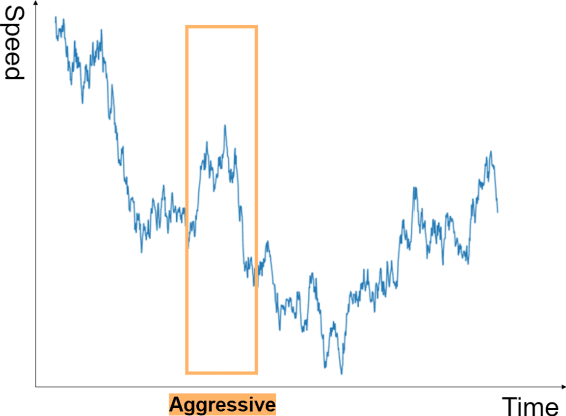

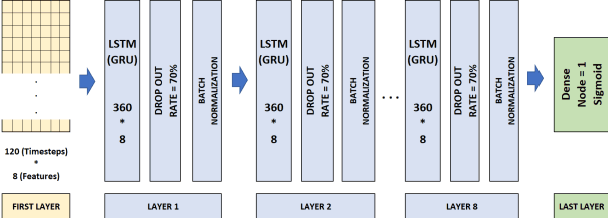

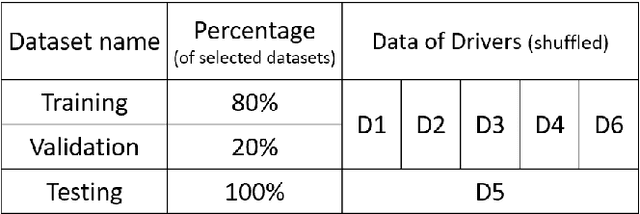

Abstract:Driving behaviour is one of the primary causes of road crashes and accidents, and these can be decreased by identifying and minimizing aggressive driving behaviour. This study identifies the timesteps when a driver in different circumstances (rush, mental conflicts, reprisal) begins to drive aggressively. An observer (real or virtual) is needed to examine driving behaviour to discover aggressive driving occasions; we overcome this problem by using a smartphone's GPS sensor to detect locations and classify drivers' driving behaviour every three minutes. To detect timeseries patterns in our dataset, we employ RNN (GRU, LSTM) algorithms to identify patterns during the driving course. The algorithm is independent of road, vehicle, position, or driver characteristics. We conclude that three minutes (or more) of driving (120 seconds of GPS data) is sufficient to identify driver behaviour. The results show high accuracy and a high F1 score.

A Load Balanced Recommendation Approach

May 20, 2021

Abstract:Recommender systems (RSs) are software tools and algorithms developed to alleviate the problem of information overload, which makes it difficult for a user to make right decisions. Two main paradigms toward the recommendation problem are collaborative filtering and content-based filtering, which try to recommend the best items using ratings and content available. These methods typically face infamous problems including cold-start, diversity, scalability, and great computational expense. We argue that the uptake of deep learning and reinforcement learning methods is also questionable due to their computational complexities and uninterpretability. In this paper, we approach the recommendation problem from a new prospective. We borrow ideas from cluster head selection algorithms in wireless sensor networks and adapt them to the recommendation problem. In particular, we propose Load Balanced Recommender System (LBRS), which uses a probabilistic scheme for item recommendation. Furthermore, we factor in the importance of items in the recommendation process, which significantly improves the recommendation accuracy. We also introduce a method that considers a heterogeneity among items, in order to balance the similarity and diversity trade-off. Finally, we propose a new metric for diversity, which emphasizes the importance of diversity not only from an intra-list perspective, but also from a between-list point of view. With experiments in a simulation study performed on RecSim, we show that LBRS is effective and can outperform baseline methods.

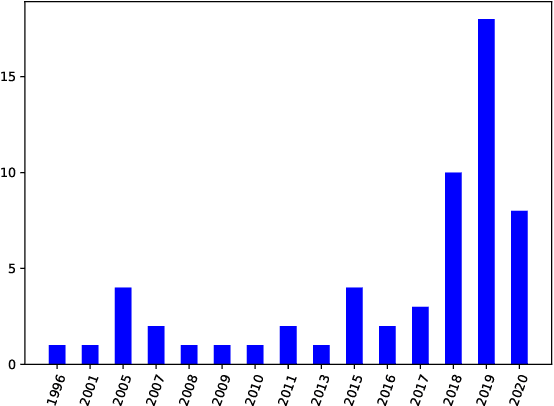

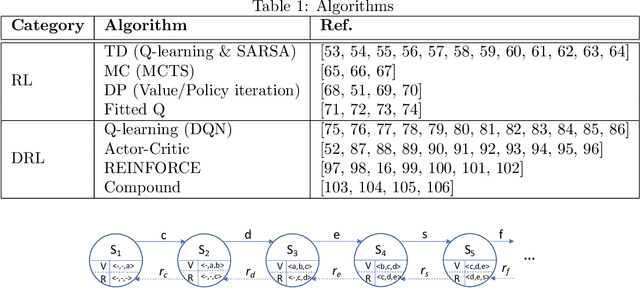

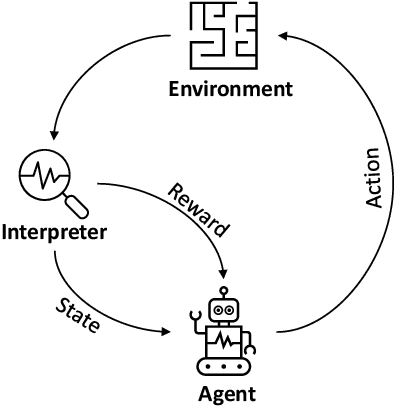

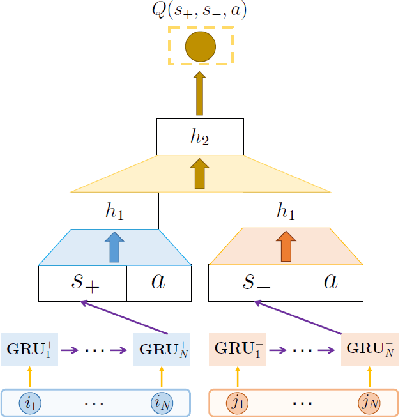

Reinforcement learning based recommender systems: A survey

Jan 15, 2021

Abstract:Recommender systems (RSs) are becoming an inseparable part of our everyday lives. They help us find our favorite items to purchase, our friends on social networks, and our favorite movies to watch. Traditionally, the recommendation problem was considered as a simple classification or prediction problem; however, the sequential nature of the recommendation problem has been shown. Accordingly, it can be formulated as a Markov decision process (MDP) and reinforcement learning (RL) methods can be employed to solve it. In fact, recent advances in combining deep learning with traditional RL methods, i.e. deep reinforcement learning (DRL), has made it possible to apply RL to the recommendation problem with massive state and action spaces. In this paper, a survey on reinforcement learning based recommender systems (RLRSs) is presented. We first recognize the fact that algorithms developed for RLRSs can be generally classified into RL- and DRL-based methods. Then, we present these RL- and DRL-based methods in a classified manner based on the specific RL algorithm, e.g., Q-learning, SARSA, and REINFORCE, that is used to optimize the recommendation policy. Furthermore, some tables are presented that contain detailed information about the MDP formulation of these methods, as well as about their evaluation schemes. Finally, we discuss important aspects and challenges that can be addressed in the future.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge