Behdad Dashtbozorg

Automatic Prostate Volume Estimation in Transabdominal Ultrasound Images

Feb 11, 2025Abstract:Prostate cancer is a leading health concern among men, requiring accurate and accessible methods for early detection and risk stratification. Prostate volume (PV) is a key parameter in multivariate risk stratification for early prostate cancer detection, commonly estimated using transrectal ultrasound (TRUS). While TRUS provides precise prostate volume measurements, its invasive nature often compromises patient comfort. Transabdominal ultrasound (TAUS) provides a non-invasive alternative but faces challenges such as lower image quality, complex interpretation, and reliance on operator expertise. This study introduces a new deep-learning-based framework for automatic PV estimation using TAUS, emphasizing its potential to enable accurate and non-invasive prostate cancer risk stratification. A dataset of TAUS videos from 100 individual patients was curated, with manually delineated prostate boundaries and calculated diameters by an expert clinician as ground truth. The introduced framework integrates deep-learning models for prostate segmentation in both axial and sagittal planes, automatic prostate diameter estimation, and PV calculation. Segmentation performance was evaluated using Dice correlation coefficient (%) and Hausdorff distance (mm). Framework's volume estimation capabilities were evaluated on volumetric error (mL). The framework demonstrates that it can estimate PV from TAUS videos with a mean volumetric error of -5.5 mL, which results in an average relative error between 5 and 15%. The introduced framework for automatic PV estimation from TAUS images, utilizing deep learning models for prostate segmentation, shows promising results. It effectively segments the prostate and estimates its volume, offering potential for reliable, non-invasive risk stratification for early prostate detection.

Deformable multi-modal image registration for the correlation between optical measurements and histology images

Nov 24, 2023Abstract:The correlation of optical measurements with a correct pathology label is often hampered by imprecise registration caused by deformations in histology images. This study explores an automated multi-modal image registration technique utilizing deep learning principles to align snapshot breast specimen images with corresponding histology images. The input images, acquired through different modalities, present challenges due to variations in intensities and structural visibility, making linear assumptions inappropriate. An unsupervised and supervised learning approach, based on the VoxelMorph model, was explored, making use of a dataset with manually registered images used as ground truth. Evaluation metrics, including Dice scores and mutual information, reveal that the unsupervised model outperforms the supervised (and manual approach) significantly, achieving superior image alignment. This automated registration approach holds promise for improving the validation of optical technologies by minimizing human errors and inconsistencies associated with manual registration.

Point Projection Mapping System for Tracking, Registering, Labeling and Validating Optical Tissue Measurements

Nov 22, 2023Abstract:Validation of newly developed optical tissue sensing techniques for tumor detection during cancer surgery requires an accurate correlation with histological results. Additionally, such accurate correlation facilitates precise data labeling for developing high-performance machine-learning tissue classification models. In this paper, a newly developed Point Projection Mapping system will be introduced, which allows non-destructive tracking of the measurement locations on tissue specimens. Additionally, a framework for accurate registration, validation, and labeling with histopathology results is proposed and validated on a case study. The proposed framework provides a more robust and accurate method for tracking and validation of optical tissue sensing techniques, which saves time and resources compared to conventional techniques available.

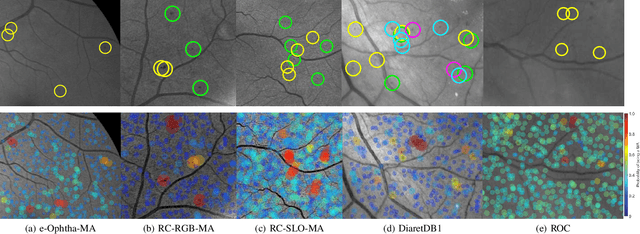

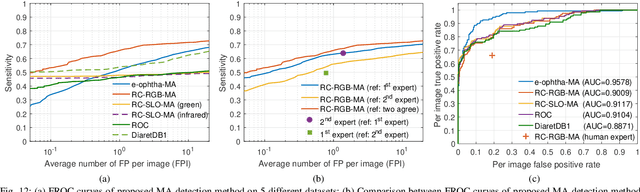

Retinal Microaneurysms Detection using Local Convergence Index Features

Jul 21, 2017

Abstract:Retinal microaneurysms are the earliest clinical sign of diabetic retinopathy disease. Detection of microaneurysms is crucial for the early diagnosis of diabetic retinopathy and prevention of blindness. In this paper, a novel and reliable method for automatic detection of microaneurysms in retinal images is proposed. In the first stage of the proposed method, several preliminary microaneurysm candidates are extracted using a gradient weighting technique and an iterative thresholding approach. In the next stage, in addition to intensity and shape descriptors, a new set of features based on local convergence index filters is extracted for each candidate. Finally, the collective set of features is fed to a hybrid sampling/boosting classifier to discriminate the MAs from non-MAs candidates. The method is evaluated on images with different resolutions and modalities (RGB and SLO) using five publicly available datasets including the Retinopathy Online Challenge's dataset. The proposed method achieves an average sensitivity score of 0.471 on the ROC dataset outperforming state-of-the-art approaches in an extensive comparison. The experimental results on the other four datasets demonstrate the effectiveness and robustness of the proposed microaneurysms detection method regardless of different image resolutions and modalities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge