Ayesha Gurnani

ViS-HuD: Using Visual Saliency to Improve Human Detection with Convolutional Neural Networks

Apr 18, 2018

Abstract:The paper presents a technique to improve human detection in still images using deep learning. Our novel method, ViS-HuD, computes visual saliency map from the image. Then the input image is multiplied by the map and product is fed to the Convolutional Neural Network (CNN) which detects humans in the image. A visual saliency map is generated using ML-Net and human detection is carried out using DetectNet. ML-Net is pre-trained on SALICON while, DetectNet is pre-trained on ImageNet database for visual saliency detection and image classification respectively. The CNNs of ViS-HuD were trained on two challenging databases - Penn Fudan and TUD-Brussels Benchmark. Experimental results demonstrate that the proposed method achieves state-of-the-art performance on Penn Fudan Dataset with 91.4% human detection accuracy and it achieves average miss-rate of 53% on the TUDBrussels benchmark.

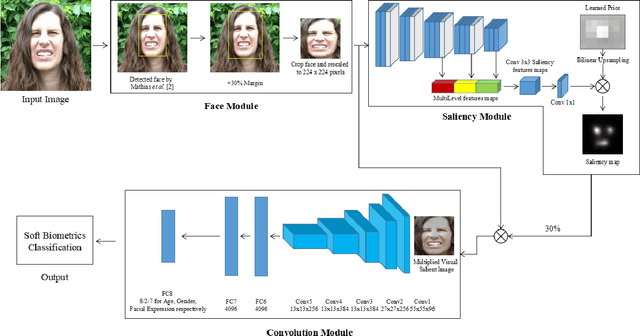

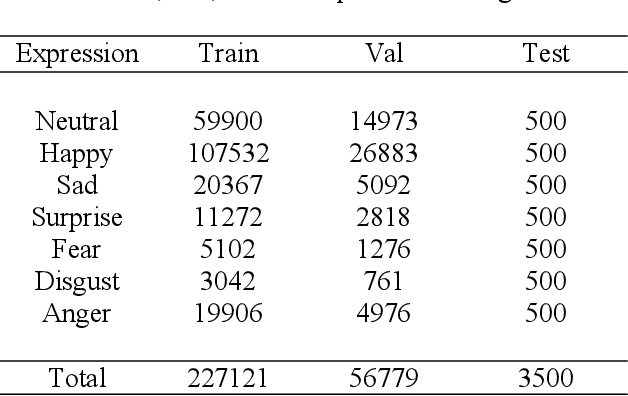

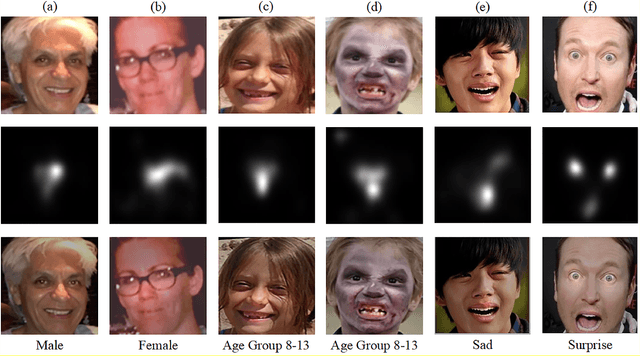

VEGAC: Visual Saliency-based Age, Gender, and Facial Expression Classification Using Convolutional Neural Networks

Mar 13, 2018

Abstract:This paper explores the use of Visual Saliency to Classify Age, Gender and Facial Expression for Facial Images. For multi-task classification, we propose our method VEGAC, which is based on Visual Saliency. Using the Deep Multi-level Network [1] and off-the-shelf face detector [2], our proposed method first detects the face in the test image and extracts the CNN predictions on the cropped face. The CNN of VEGAC were fine-tuned on the collected dataset from different benchmarks. Our convolutional neural network (CNN) uses the VGG-16 architecture [3] and is pre-trained on ImageNet for image classification. We demonstrate the usefulness of our method for Age Estimation, Gender Classification, and Facial Expression Classification. We show that we obtain the competitive result with our method on selected benchmarks. All our models and code will be publically available.

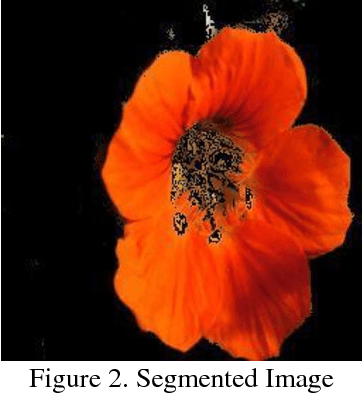

Flower Categorization using Deep Convolutional Neural Networks

Dec 08, 2017

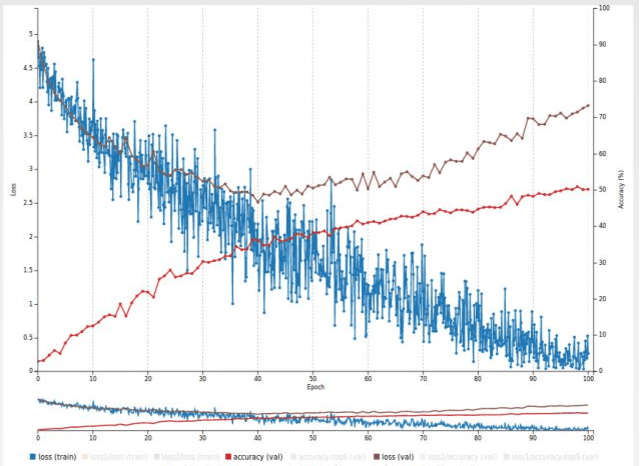

Abstract:We have developed a deep learning network for classification of different flowers. For this, we have used Visual Geometry Group's 102 category flower dataset having 8189 images of 102 different flowers from University of Oxford. The method is basically divided into two parts; Image segmentation and classification. We have compared the performance of two different Convolutional Neural Network architectures GoogLeNet and AlexNet for classification purpose. By keeping the hyper parameters same for both architectures, we have found that the top 1 and top 5 accuracies of GoogLeNet are 47.15% and 69.17% respectively whereas the top 1 and top 5 accuracies of AlexNet are 43.39% and 68.68% respectively. These results are extremely good when compared to random classification accuracy of 0.98%. This method for classification of flowers can be implemented in real time applications and can be used to help botanists for their research as well as camping enthusiasts.

A Novel Approach for Image Segmentation based on Histograms computed from Hue-data

Jul 30, 2017

Abstract:Computer Vision is growing day by day in terms of user specific applications. The first step of any such application is segmenting an image. In this paper, we propose a novel and grass-root level image segmentation algorithm for cases in which the background has uniform color distribution. This algorithm can be used for images of flowers, birds, insects and many more where such background conditions occur. By image segmentation, the visualization of a computer increases manifolds and it can even attain near-human accuracy during classification.

* 4 pages

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge