Attila Szabo

Federated Learning with Taskonomy for Non-IID Data

Mar 29, 2021

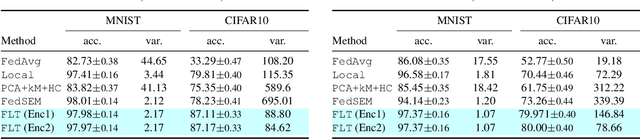

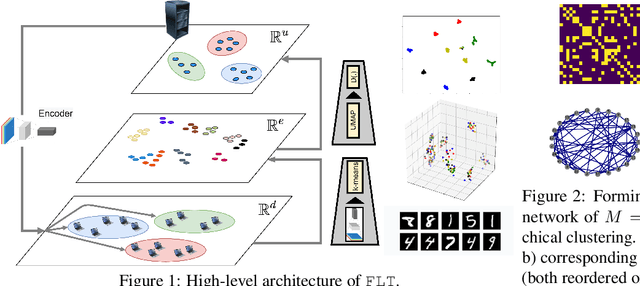

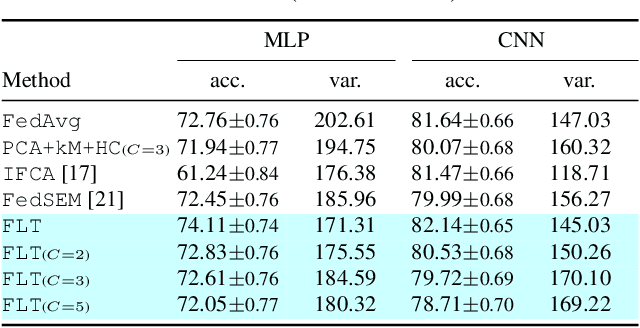

Abstract:Classical federated learning approaches incur significant performance degradation in the presence of non-IID client data. A possible direction to address this issue is forming clusters of clients with roughly IID data. Most solutions following this direction are iterative and relatively slow, also prone to convergence issues in discovering underlying cluster formations. We introduce federated learning with taskonomy (FLT) that generalizes this direction by learning the task-relatedness between clients for more efficient federated aggregation of heterogeneous data. In a one-off process, the server provides the clients with a pretrained (and fine-tunable) encoder to compress their data into a latent representation, and transmit the signature of their data back to the server. The server then learns the task-relatedness among clients via manifold learning, and performs a generalization of federated averaging. FLT can flexibly handle a generic client relatedness graph, when there are no explicit clusters of clients, as well as efficiently decompose it into (disjoint) clusters for clustered federated learning. We demonstrate that FLT not only outperforms the existing state-of-the-art baselines in non-IID scenarios but also offers improved fairness across clients.

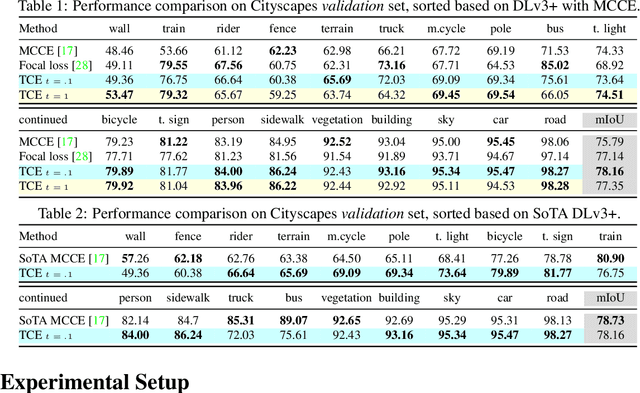

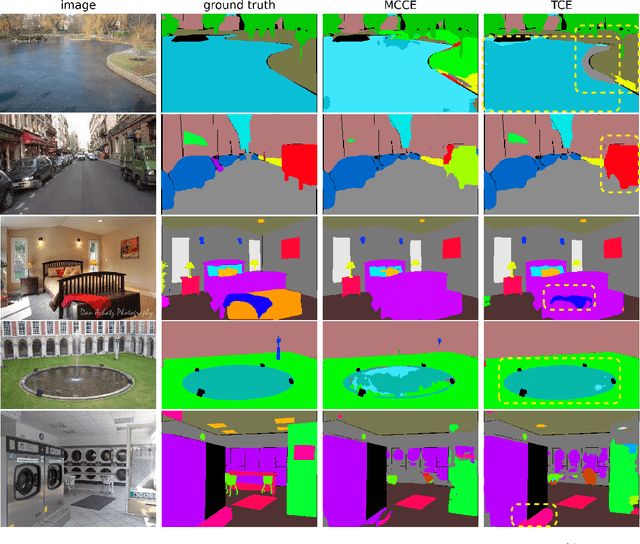

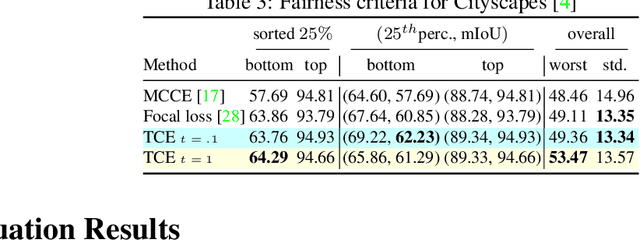

Tilted Cross Entropy (TCE): Promoting Fairness in Semantic Segmentation

Mar 25, 2021

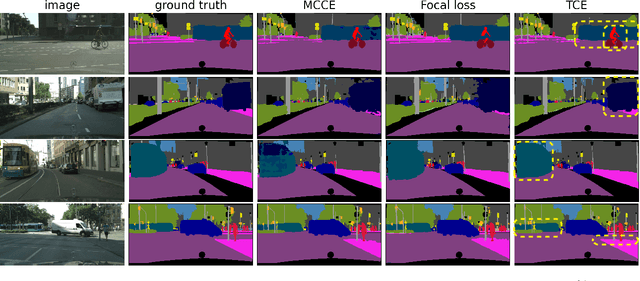

Abstract:Traditional empirical risk minimization (ERM) for semantic segmentation can disproportionately advantage or disadvantage certain target classes in favor of an (unfair but) improved overall performance. Inspired by the recently introduced tilted ERM (TERM), we propose tilted cross-entropy (TCE) loss and adapt it to the semantic segmentation setting to minimize performance disparity among target classes and promote fairness. Through quantitative and qualitative performance analyses, we demonstrate that the proposed Stochastic TCE for semantic segmentation can efficiently improve the low-performing classes of Cityscapes and ADE20k datasets trained with multi-class cross-entropy (MCCE), and also results in improved overall fairness.

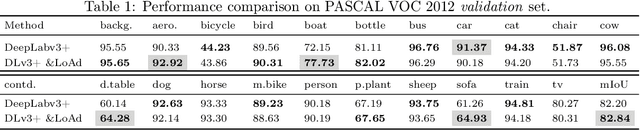

Lookahead Adversarial Semantic Segmentation

Jun 19, 2020

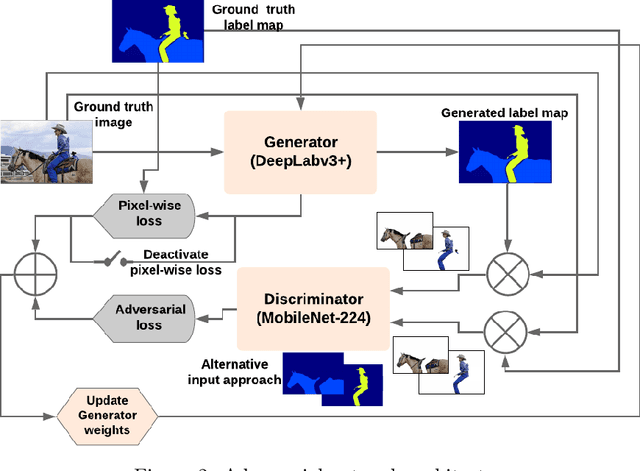

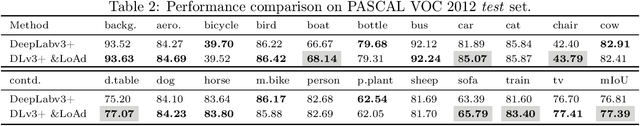

Abstract:Semantic segmentation is one of the most fundamental problems in computer vision with significant impact on a wide variety of applications. Adversarial learning is shown to be an effective approach for improving semantic segmentation quality by enforcing higher-level pixel correlations and structural information. However, state-of-the-art semantic segmentation models cannot be easily plugged into an adversarial setting because they are not designed to accommodate convergence and stability issues in adversarial networks. We bridge this gap by building a conditional adversarial network with a state-of-the-art segmentation model (DeepLabv3+) at its core. To battle the stability issues, we introduce a novel lookahead adversarial learning approach (LoAd) with an embedded label map aggregation module. We demonstrate that the proposed solution can alleviate divergence issues in an adversarial semantic segmentation setting and results in considerable performance improvements (up to 5% in some classes) on the baseline for two standard datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge