Athanasios Oikonomou

Mechanical Engineering, University of Patras, Patras, Greece, National Centre for Scientific Research Demokritos, Agia Paraskevi, Attica, Greece, Superlabs AMKE, Marousi, Attica, Greece

FairyLandAI: Personalized Fairy Tales utilizing ChatGPT and DALLE-3

Jul 12, 2024Abstract:In the diverse world of AI-driven storytelling, there is a unique opportunity to engage young audiences with customized, and personalized narratives. This paper introduces FairyLandAI an innovative Large Language Model (LLM) developed through OpenAI's API, specifically crafted to create personalized fairytales for children. The distinctive feature of FairyLandAI is its dual capability: it not only generates stories that are engaging, age-appropriate, and reflective of various traditions but also autonomously produces imaginative prompts suitable for advanced image generation tools like GenAI and Dalle-3, thereby enriching the storytelling experience. FairyLandAI is expertly tailored to resonate with the imaginative worlds of children, providing narratives that are both educational and entertaining and in alignment with the moral values inherent in different ages. Its unique strength lies in customizing stories to match individual children's preferences and cultural backgrounds, heralding a new era in personalized storytelling. Further, its integration with image generation technology offers a comprehensive narrative experience that stimulates both verbal and visual creativity. Empirical evaluations of FairyLandAI demonstrate its effectiveness in crafting captivating stories for children, which not only entertain but also embody the values and teachings of diverse traditions. This model serves as an invaluable tool for parents and educators, supporting them in imparting meaningful moral lessons through engaging narratives. FairyLandAI represents a pioneering step in using LLMs, particularly through OpenAI's API, for educational and cultural enrichment, making complex moral narratives accessible and enjoyable for young, imaginative minds.

Physics-Informed Bayesian Learning of Electrohydrodynamic Polymer Jet Printing Dynamics

Apr 16, 2022

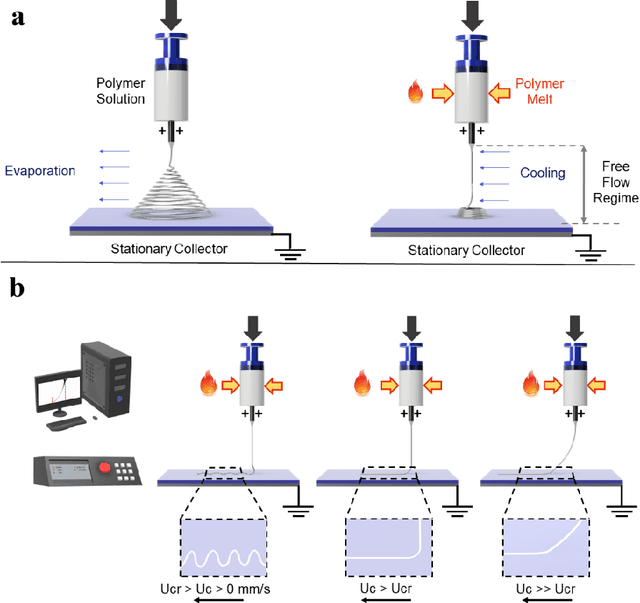

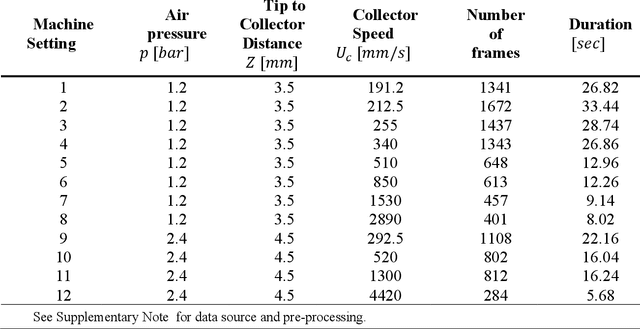

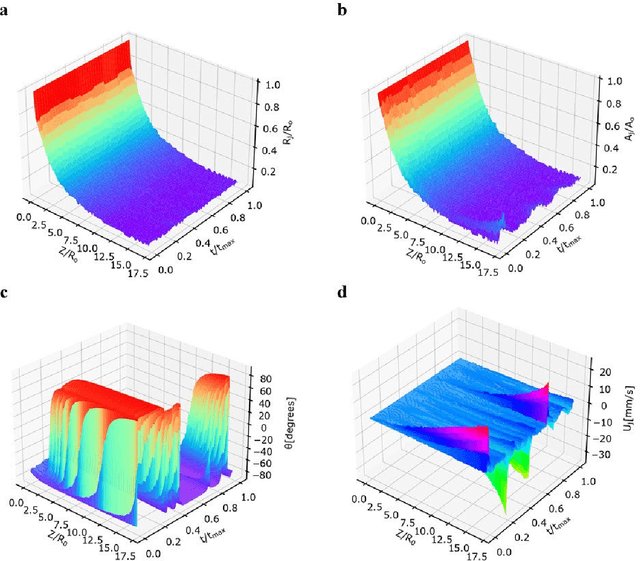

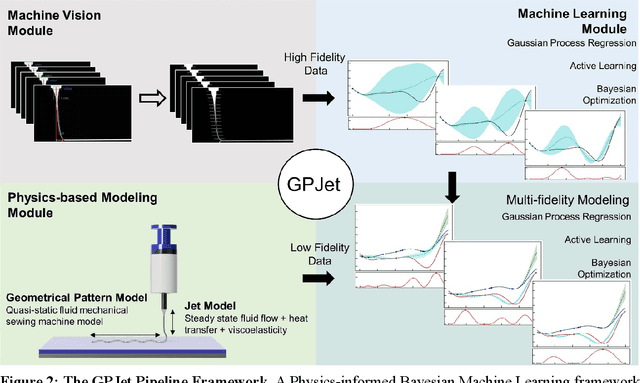

Abstract:Calibration of highly dynamic multi-physics manufacturing processes such as electro-hydrodynamics-based additive manufacturing (AM) technologies (E-jet printing) is still performed by labor-intensive trial-and-error practices. These practices have hindered the broad adoption of these technologies, demanding a new paradigm of self-calibrating E-jet printing machines. To address this need, we developed GPJet, an end-to-end physics-informed Bayesian learning framework, and tested it on a virtual E-jet printing machine with in-process jet monitoring capabilities. GPJet consists of three modules: a) the Machine Vision module, b) the Physics-Based Modeling Module, and c) the Machine Learning (ML) module. We demonstrate that the Machine Vision module can extract high-fidelity jet features in real-time from video data using an automated parallelized computer vision workflow. In addition, we show that the Machine Vision module, combined with the Physics-based modeling module, can act as closed-loop sensory feedback to the Machine Learning module of high- and low-fidelity data. Powered by our data-centric approach, we demonstrate that the online ML planner can actively learn the jet process dynamics using video and physics with minimum experimental cost. GPJet brings us one step closer to realizing the vision of intelligent AM machines that can efficiently search complex process-structure-property landscapes and create optimized material solutions for a wide range of applications at a fraction of the cost and speed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge