Atay Ozgovde

DeepAir: A Multi-Agent Deep Reinforcement Learning Based Scheme for an Unknown User Location Problem

Aug 11, 2024

Abstract:The deployment of unmanned aerial vehicles (UAVs) in many different settings has provided various solutions and strategies for networking paradigms. Therefore, it reduces the complexity of the developments for the existing problems, which otherwise require more sophisticated approaches. One of those existing problems is the unknown user locations in an infrastructure-less environment in which users cannot connect to any communication device or computation-providing server, which is essential to task offloading in order to achieve the required quality of service (QoS). Therefore, in this study, we investigate this problem thoroughly and propose a novel deep reinforcement learning (DRL) based scheme, DeepAir. DeepAir considers all of the necessary steps including sensing, localization, resource allocation, and multi-access edge computing (MEC) to achieve QoS requirements for the offloaded tasks without violating the maximum tolerable delay. To this end, we use two types of UAVs including detector UAVs, and serving UAVs. We utilize detector UAVs as DRL agents which ensure sensing, localization, and resource allocation. On the other hand, we utilize serving UAVs to provide MEC features. Our experiments show that DeepAir provides a high task success rate by deploying fewer detector UAVs in the environment, which includes different numbers of users and user attraction points, compared to benchmark methods.

Air Computing: A Survey on a New Generation Computation Paradigm in 6G Wireless Networks

Sep 10, 2022

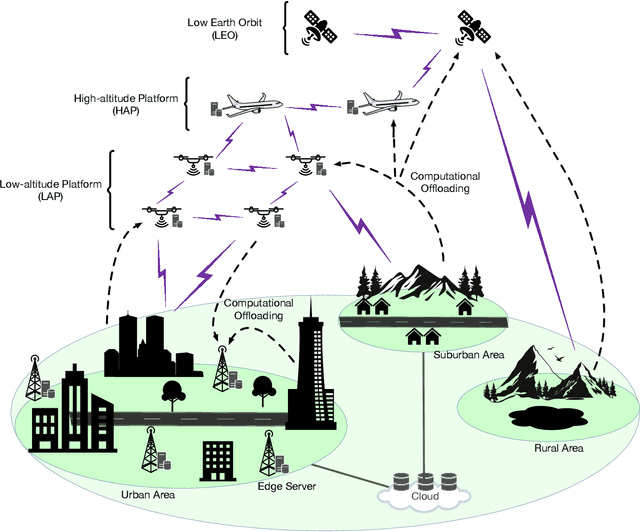

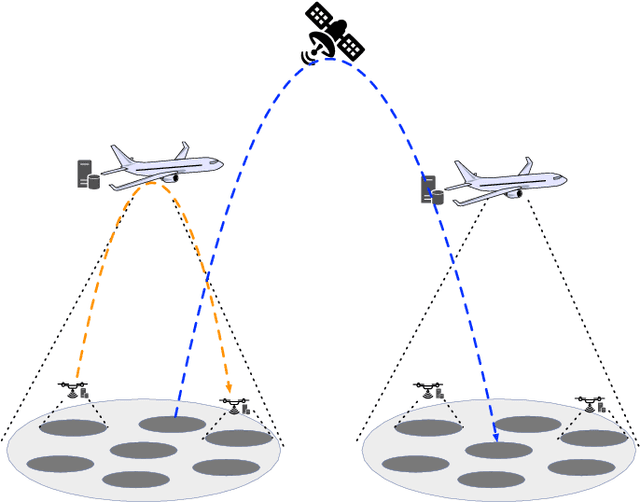

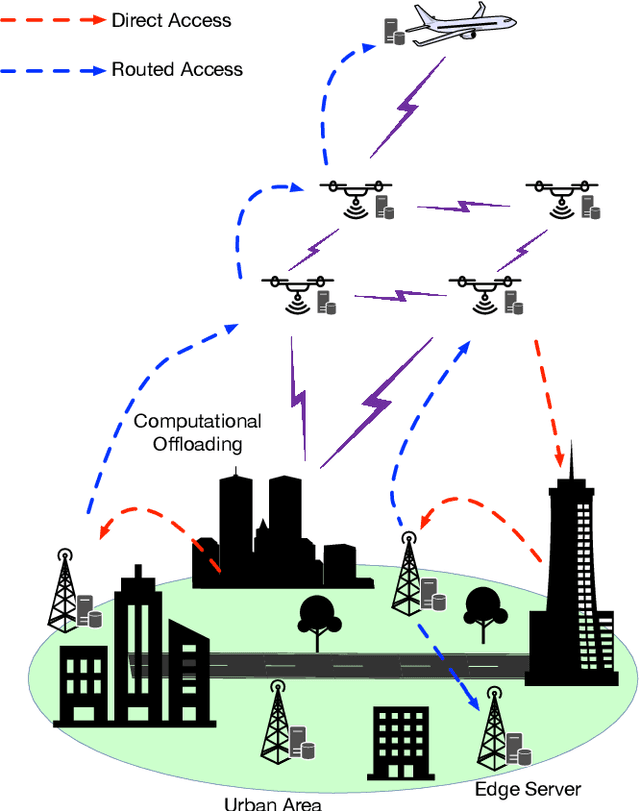

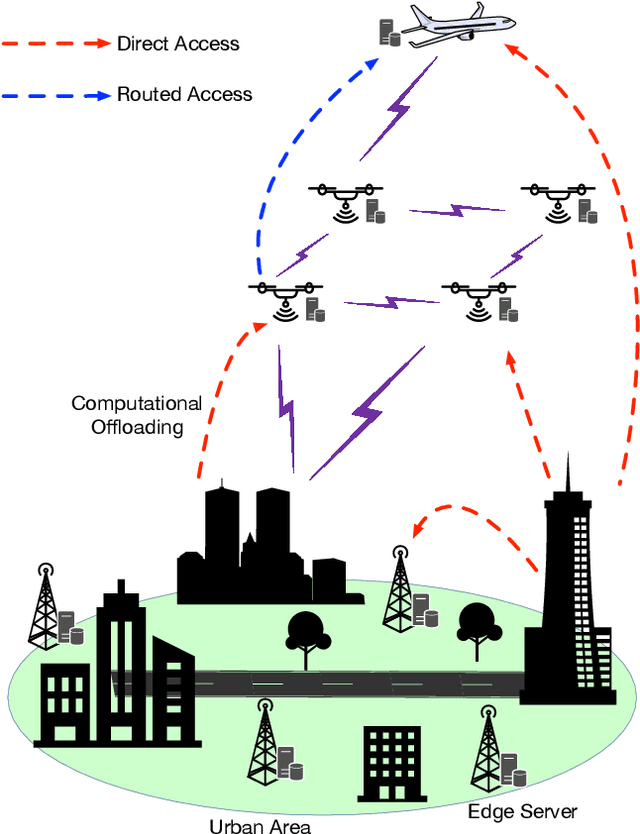

Abstract:There is an ever-growing race between what novel applications demand from the infrastructure and what the continuous technological breakthroughs bring in. Especially after the proliferation of smart devices and diverse IoT requirements, we observe the dominance of cutting-edge applications with ever-increased user expectations in terms of mobility, pervasiveness, and real-time response. Over the years, to meet the requirements of those applications, cloud computing provides the necessary capacity for computation, while edge computing ensures low latency. However, these two essential solutions would be insufficient for the next-generation applications since computational and communicational bottlenecks are inevitable due to the highly dynamic load. Therefore, a 3D networking structure using different air layers including Low Altitude Platforms, High Altitude Platforms, and Low Earth Orbits in a harmonized manner for both urban and rural areas should be applied to satisfy the requirements of the dynamic environment. In this perspective, we put forward a novel, next-generation paradigm called Air Computing that presents a dynamic, responsive, and high-resolution computation and communication environment for all spectrum of applications using the 6G Wireless Networks as the fundamental communication system. In this survey, we define the components of air computing, investigate its architecture in detail, and discuss its essential use cases and the advantages it brings for next-generation application scenarios. We provide a detailed and technical overview of the benefits and challenges of air computing as a novel paradigm and spot the important future research directions.

DeepEdge: A Deep Reinforcement Learning based Task Orchestrator for Edge Computing

Oct 05, 2021

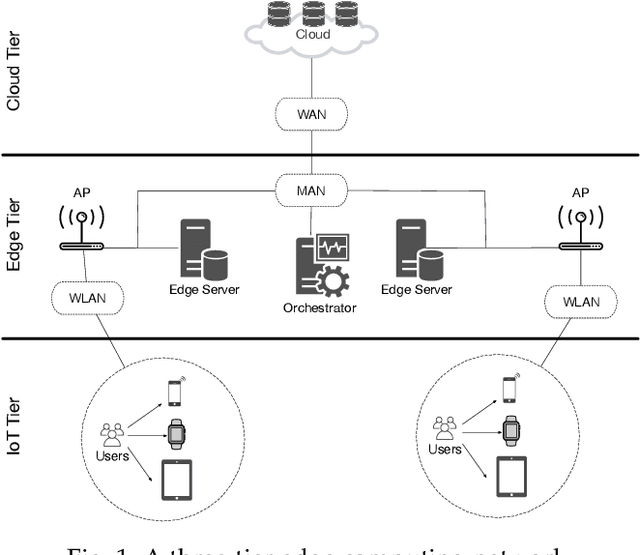

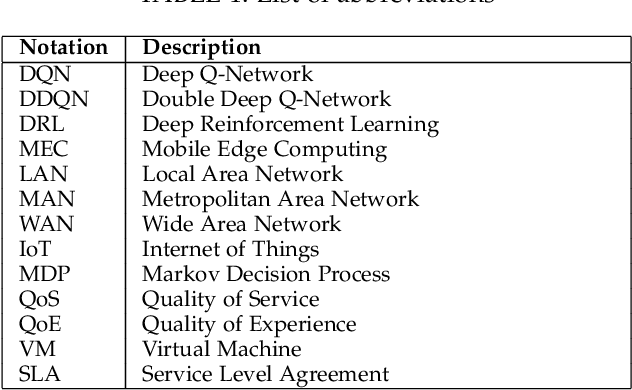

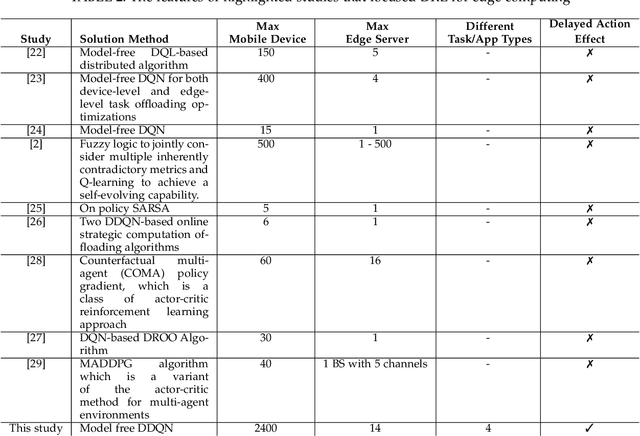

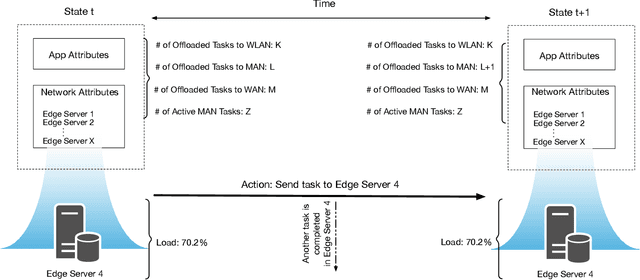

Abstract:The improvements in the edge computing technology pave the road for diversified applications that demand real-time interaction. However, due to the mobility of the end-users and the dynamic edge environment, it becomes challenging to handle the task offloading with high performance. Moreover, since each application in mobile devices has different characteristics, a task orchestrator must be adaptive and have the ability to learn the dynamics of the environment. For this purpose, we develop a deep reinforcement learning based task orchestrator, DeepEdge, which learns to meet different task requirements without needing human interaction even under the heavily-loaded stochastic network conditions in terms of mobile users and applications. Given the dynamic offloading requests and time-varying communication conditions, we successfully model the problem as a Markov process and then apply the Double Deep Q-Network (DDQN) algorithm to implement DeepEdge. To evaluate the robustness of DeepEdge, we experiment with four different applications including image rendering, infotainment, pervasive health, and augmented reality in the network under various loads. Furthermore, we compare the performance of our agent with the four different task offloading approaches in the literature. Our results show that DeepEdge outperforms its competitors in terms of the percentage of satisfactorily completed tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge