Ata Kaboudi

DeepProv: Behavioral Characterization and Repair of Neural Networks via Inference Provenance Graph Analysis

Sep 30, 2025

Abstract:Deep neural networks (DNNs) are increasingly being deployed in high-stakes applications, from self-driving cars to biometric authentication. However, their unpredictable and unreliable behaviors in real-world settings require new approaches to characterize and ensure their reliability. This paper introduces DeepProv, a novel and customizable system designed to capture and characterize the runtime behavior of DNNs during inference by using their underlying graph structure. Inspired by system audit provenance graphs, DeepProv models the computational information flow of a DNN's inference process through Inference Provenance Graphs (IPGs). These graphs provide a detailed structural representation of the behavior of DNN, allowing both empirical and structural analysis. DeepProv uses these insights to systematically repair DNNs for specific objectives, such as improving robustness, privacy, or fairness. We instantiate DeepProv with adversarial robustness as the goal of model repair and conduct extensive case studies to evaluate its effectiveness. Our results demonstrate its effectiveness and scalability across diverse classification tasks, attack scenarios, and model complexities. DeepProv automatically identifies repair actions at the node and edge-level within IPGs, significantly enhancing the robustness of the model. In particular, applying DeepProv repair strategies to just a single layer of a DNN yields an average 55% improvement in adversarial accuracy. Moreover, DeepProv complements existing defenses, achieving substantial gains in adversarial robustness. Beyond robustness, we demonstrate the broader potential of DeepProv as an adaptable system to characterize DNN behavior in other critical areas, such as privacy auditing and fairness analysis.

Morphence-2.0: Evasion-Resilient Moving Target Defense Powered by Out-of-Distribution Detection

Jun 15, 2022

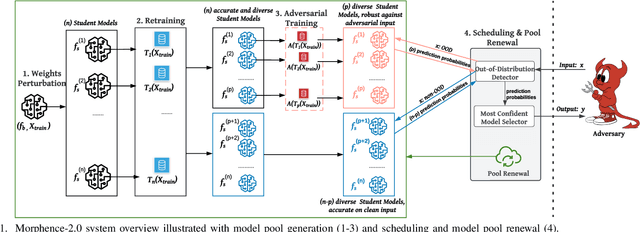

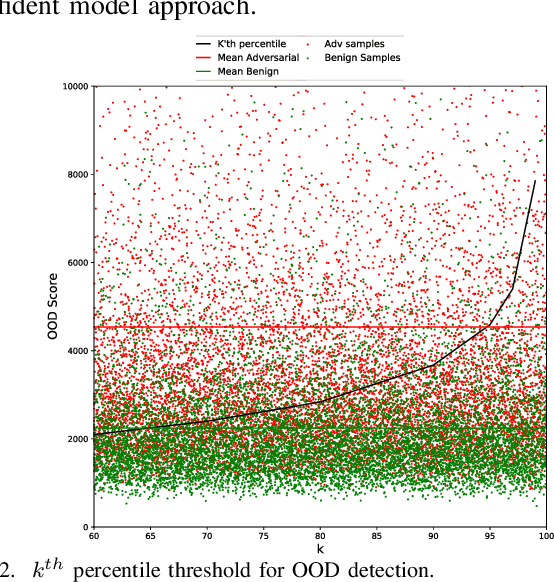

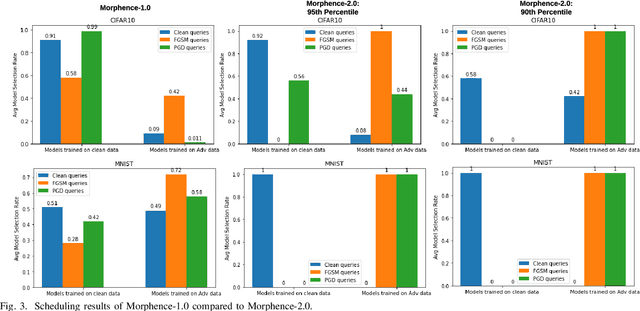

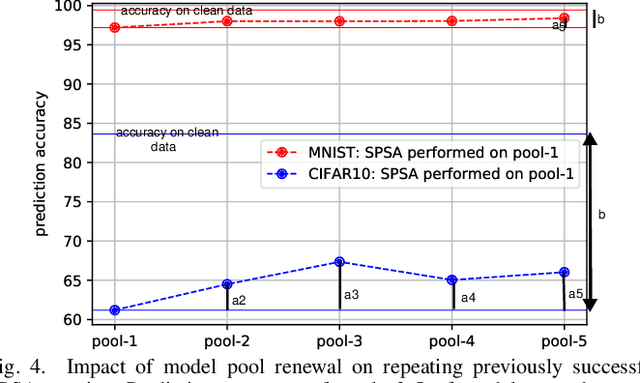

Abstract:Evasion attacks against machine learning models often succeed via iterative probing of a fixed target model, whereby an attack that succeeds once will succeed repeatedly. One promising approach to counter this threat is making a model a moving target against adversarial inputs. To this end, we introduce Morphence-2.0, a scalable moving target defense (MTD) powered by out-of-distribution (OOD) detection to defend against adversarial examples. By regularly moving the decision function of a model, Morphence-2.0 makes it significantly challenging for repeated or correlated attacks to succeed. Morphence-2.0 deploys a pool of models generated from a base model in a manner that introduces sufficient randomness when it responds to prediction queries. Via OOD detection, Morphence-2.0 is equipped with a scheduling approach that assigns adversarial examples to robust decision functions and benign samples to an undefended accurate models. To ensure repeated or correlated attacks fail, the deployed pool of models automatically expires after a query budget is reached and the model pool is seamlessly replaced by a new model pool generated in advance. We evaluate Morphence-2.0 on two benchmark image classification datasets (MNIST and CIFAR10) against 4 reference attacks (3 white-box and 1 black-box). Morphence-2.0 consistently outperforms prior defenses while preserving accuracy on clean data and reducing attack transferability. We also show that, when powered by OOD detection, Morphence-2.0 is able to precisely make an input-based movement of the model's decision function that leads to higher prediction accuracy on both adversarial and benign queries.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge